Hacking the Brain with Adversarial Images

Table of Contents

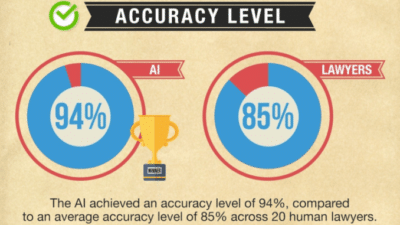

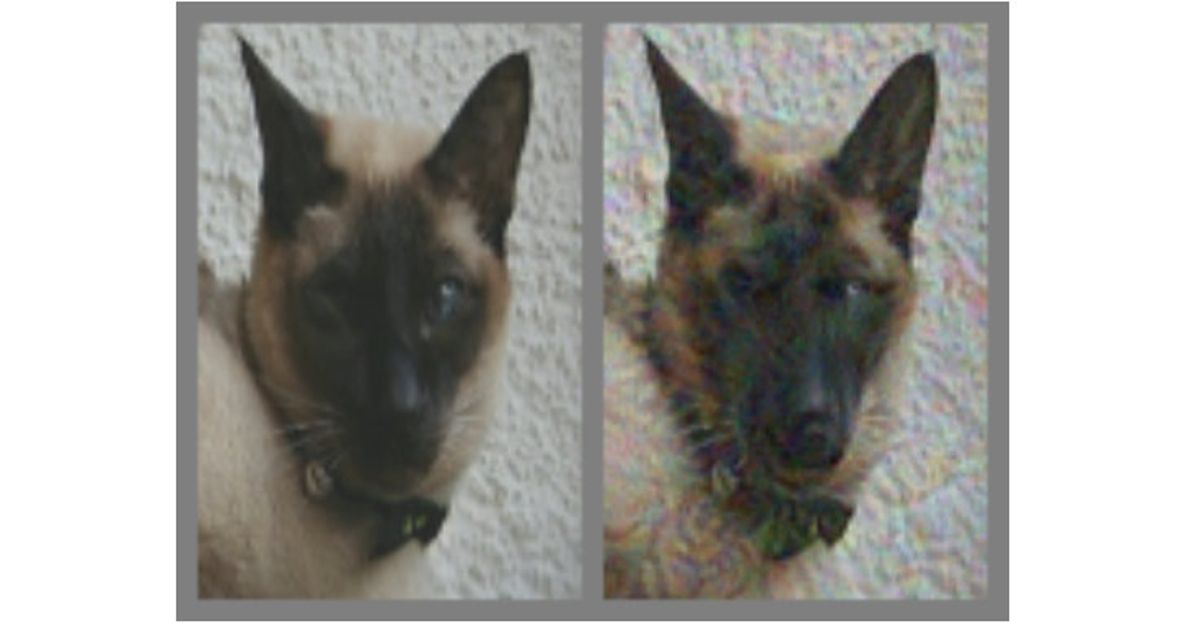

This is an example of what’s called an adversarial image: an image specifically designed to fool neural networks into making an incorrect determination about what they’re looking at. Researchers at Google Brain decided to try and figure out whether the same techniques that fool artificial neural networks can also fool the biological neural networks inside of our heads, by developing adversarial images capable of making both computers and humans think that they’re looking at something they aren’t.

Source: ieee.org