Paper Repro: “Self-Normalizing Neural Networks”

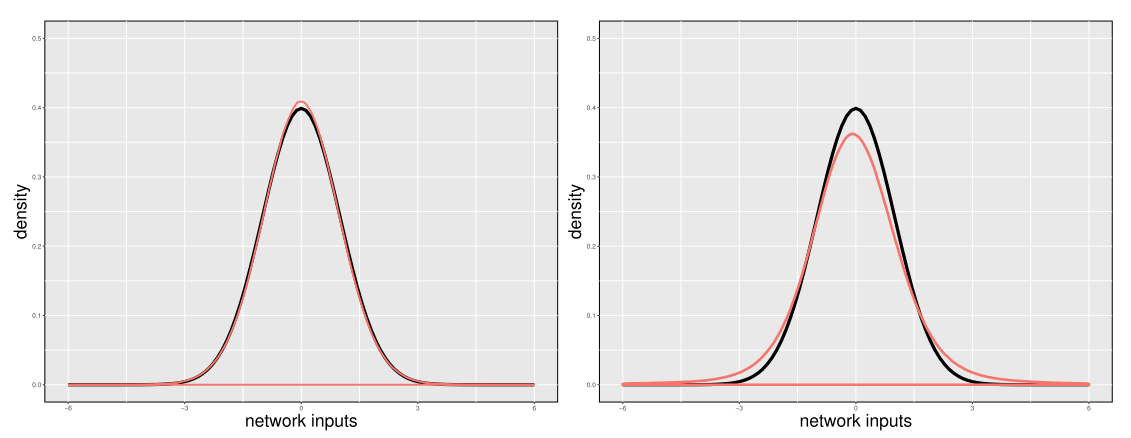

SNNs are really cool: their goal is to create neural networks in which, if the input of any layer is normally distributed, the output will automatically also be normally distributed. This is amazing because normalizing the output of layers is known to be a very efficient way to improve the performance of neural networks, but the current ways to do it (eg BatchNorm) basically involve weird hacks, while in SNN the normalization is an intrinsic part of the mathematics of the neural net.

Source: becominghuman.ai