Automatic Photography With Google Clips

How could we train an algorithm to recognize interesting moments? As with most machine learning problems, we started with a dataset. We created a dataset of thousands of videos in diverse scenarios where we imagined Clips being used.

We also made sure our dataset represented a wide range of ethnicities, genders, and ages. We then hired expert photographers and video editors to pore over this footage to select the best short video segments. These early curations gave us examples for our algorithms to emulate.

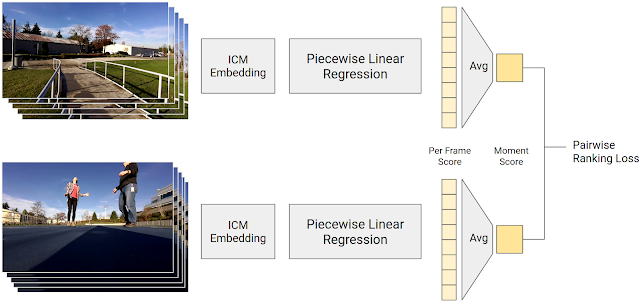

However, it is challenging to train an algorithm solely from the subjective selection of the curators — one needs a smooth gradient of labels to teach an algorithm to recognize the quality of content, ranging from ‘perfect’ to ‘terrible.’ To address this problem, we took a second data-collection approach, with the goal of creating a continuous quality score across the length of a video. We split each video into short segments (similar to the content Clips captures), randomly selected pairs of segments, and asked human raters to select the one they prefer.

Source: googleblog.com