What’s New in Deep Learning Research: Inside Google’s Semantic Experiences

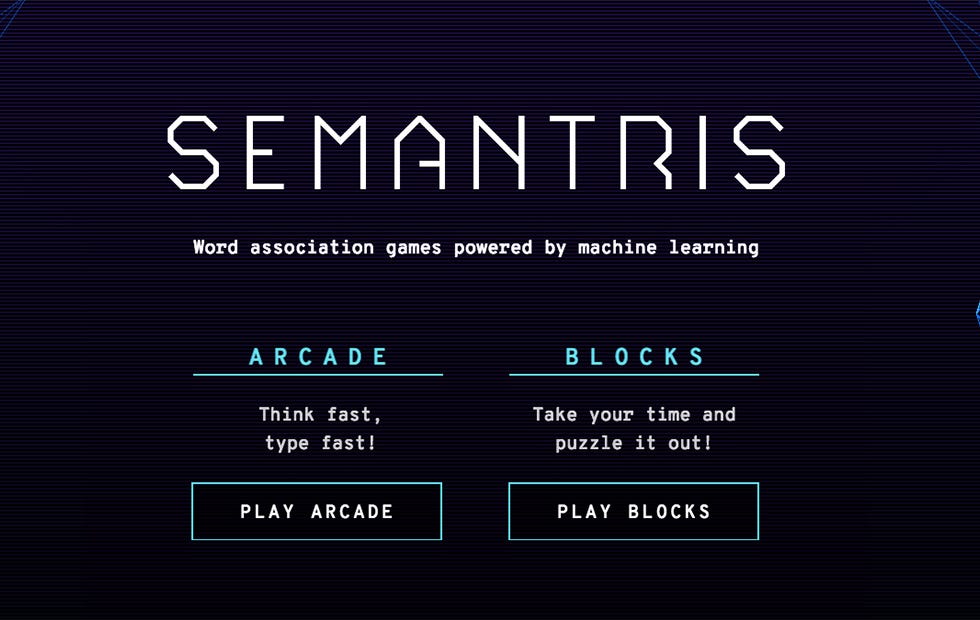

Last week Google Research made news with the release of Semantic Experiences, a website that serves as a playground to evaluate some of the new advancements in natural language understanding(NLU) technologies. The initial release included two pseudo-games that illustrates the practical viability of some of Google’s latest NLU research. The second initiatives included in Semantic Experiences is Semantris, a game powered by machine learning, where you type out words associated with a given prompt.

The experience might seem trivial but, speaking as an end user, I can tell you it can become addictive. Google’s Talk to Books and Semantris are both the first practical implementations of the Universal Sentence Encoder technique. This method was recently outlined in a paper published by a team of researchers at Google that includes world-renown author and futurist Ray Kurzweil.

Conceptually, the Universal Sentence Encoder technique focuses on representing language in a vector space using vectors that represents large text sections such as sentences or paragraphs vs. the traditional models that focused mostly on word vectors.

Source: towardsdatascience.com