AI and Compute

Table of Contents

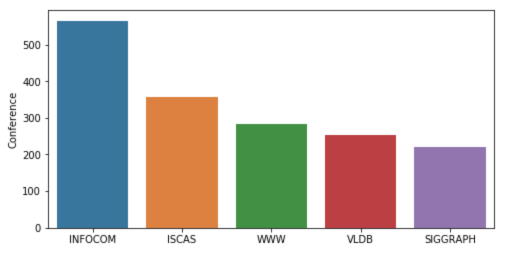

We’re releasing an analysis showing that since 2012, the amount of compute used in the largest AI training runs has been increasing exponentially with a 3.5 month-doubling time (by comparison, Moore’s Law had an 18-month doubling period). Since 2012, this metric has grown by more than 300,000x (an 18-month doubling period would yield only a 12x increase). Improvements in compute have been a key component of AI progress, so as long as this trend continues, it’s worth preparing for the implications of systems far outside today’s capabilities.

The chart shows the total amount of compute, in petaflop/s-days, that was used to train selected results that are relatively well known, used a lot of compute for their time, and gave enough information to estimate the compute used. A petaflop/s-day (pfs-day) consists of performing 1015 neural net operations per second for one day, or a total of about 1020 operations. The compute-time product serves as a mental convenience, similar to kW-hr for energy.

We don’t measure peak theoretical FLOPS of the hardware but instead try to estimate the number of actual operations performed. We count adds and multiplies as separate operations, we count any add or multiply as a single operation regardless of numerical precision (making “FLOP” a slight misnomer), and we ignore ensemble models. Example calculations that went into this graph are provided in this appendix.

Doubling time for line of best fit shown is 3.43 months.

Source: openai.com