A Deep Dive into Monte Carlo Tree Search

Table of Contents

The very first Go AIs used multiple modules to handle each aspect of playing Go – life and death, capturing races, opening theory, endgame theory, and so on. The idea was that by having experts program each module using heuristics, the AI would become an expert in all areas of the game. All that came to a grinding halt with the introduction of Monte Carlo Tree Search (MCTS) around 2008.

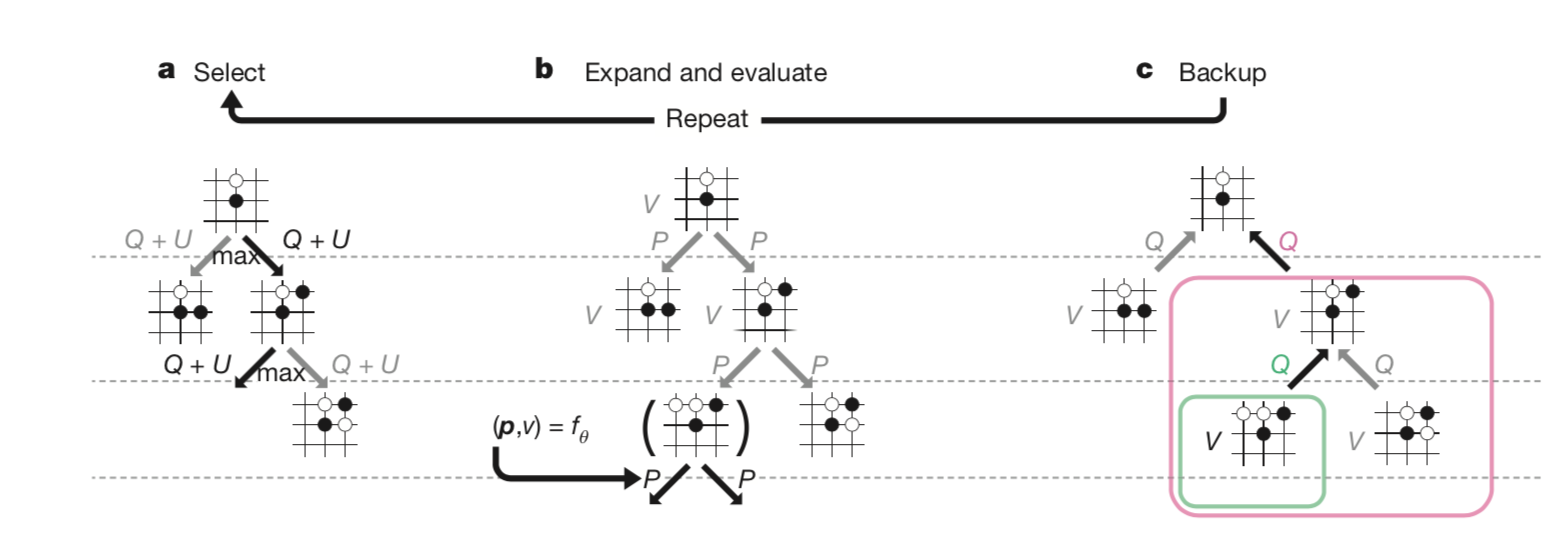

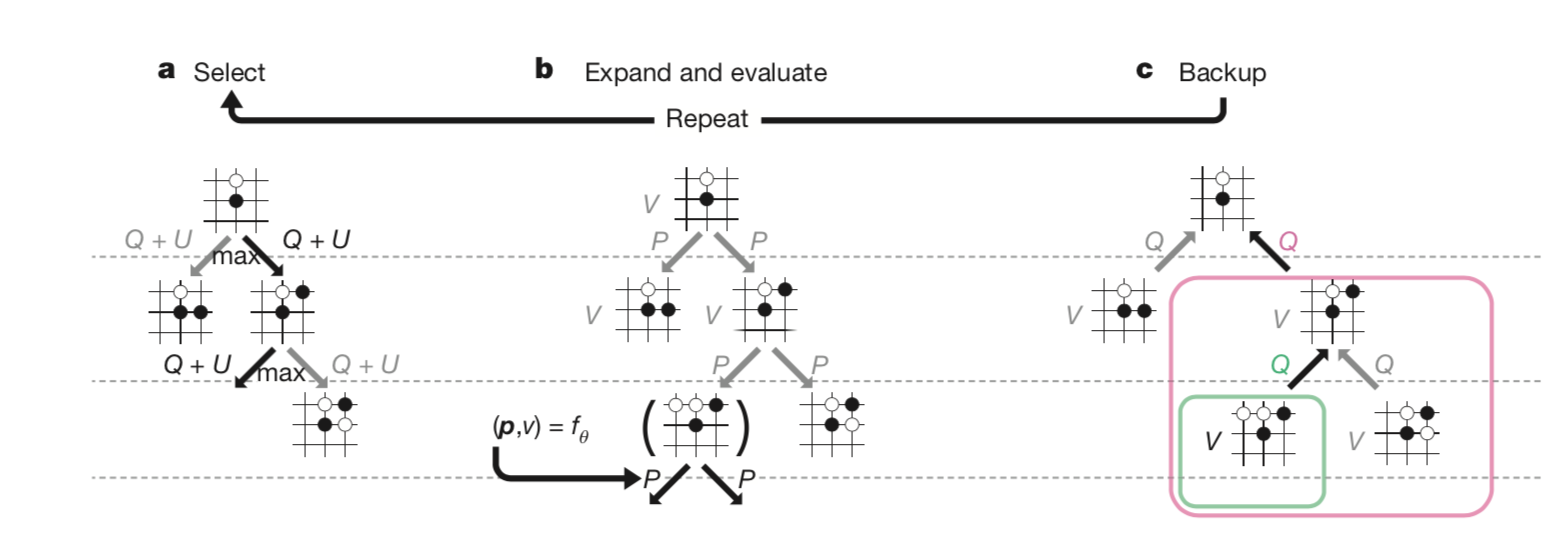

MCTS is a tree search algorithm that dumped the idea of modules in favor of a generic tree search algorithm that operated in all stages of the game. MCTS AIs still used hand-crafted heuristics to make the tree search more efficient and accurate, but they far outperformed non-MCTS AIs. Go AIs then continued to improve through a mix of algorithmic improvements and better heuristics.

In 2016, AlphaGo leapfrogged the best MCTS AIs by replacing some heuristics with deep learning models, and AlphaGoZero in 2018 completely replaced all heuristics with learned models.

Source: moderndescartes.com