The Billion Data Point Challenge: Building a Query Engine for High Cardinality Time Series Data

Table of Contents

Uber, like most large technology companies, relies extensively on metrics to effectively monitor its entire stack. From low-level system metrics, such as memory utilization of a host, to high-level business metrics, including the number of Uber Eats orders in a particular city, they allow our engineers to gain insight into how our services are operating on a daily basis. As our dimensionality and usage of metrics increases, common solutions like Prometheus and Graphite become difficult to manage and sometimes cease to work.

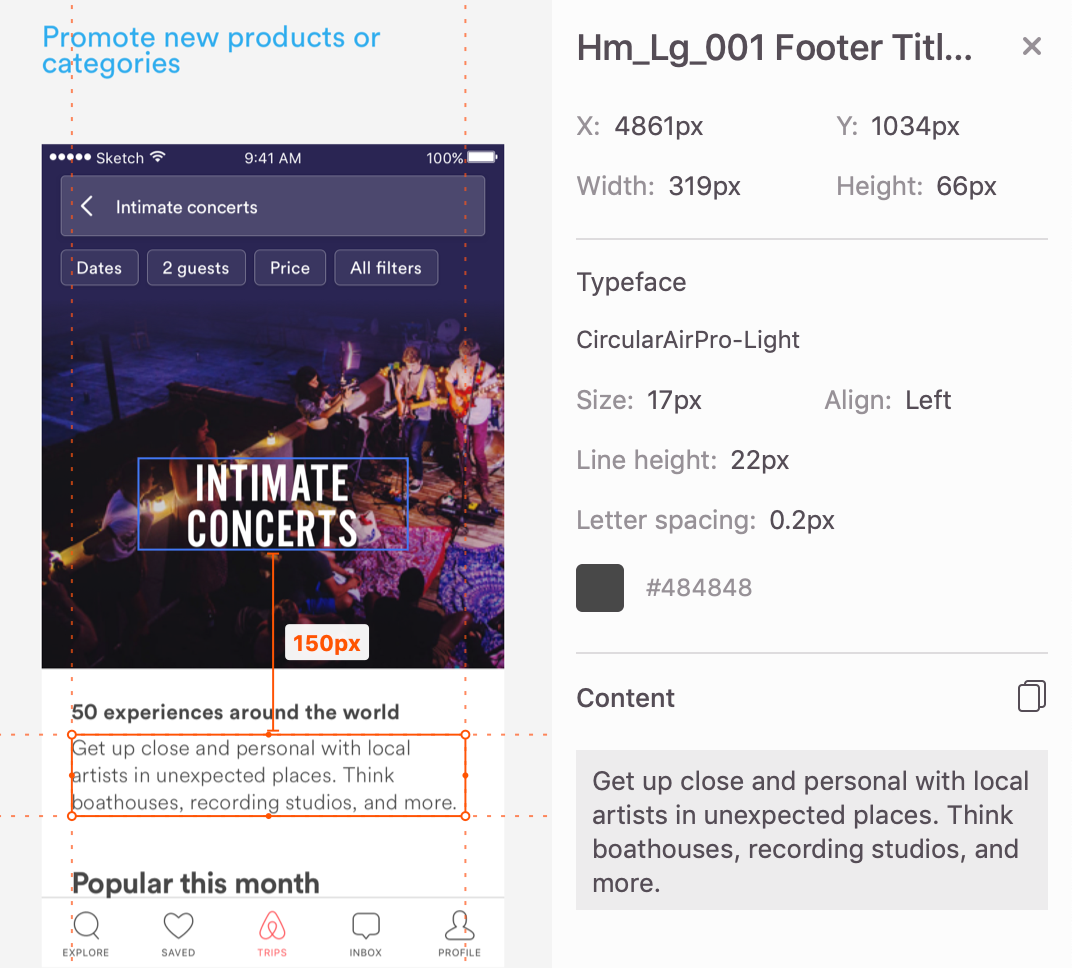

Due to a lack of available solutions, we decided to build an in-house, open source metrics platform, named M3, that could handle the scale of our metrics. A major component of the M3 platform is its query engine, which we built from the ground up and have been using internally for several years. As of November 2018, our metrics query engine handles around 2,500 queries per second (Figure 1), about 8.5 billion data points per second (Figure 2), and approximately 35 Gbps (Figure 3).

These numbers have been constantly trending upwards at a rate much higher than Uber’s organic growth due to the increased adoption of metrics across various parts of our stack.

Source: uber.com