A Crash Course For Running Istio

Table of Contents

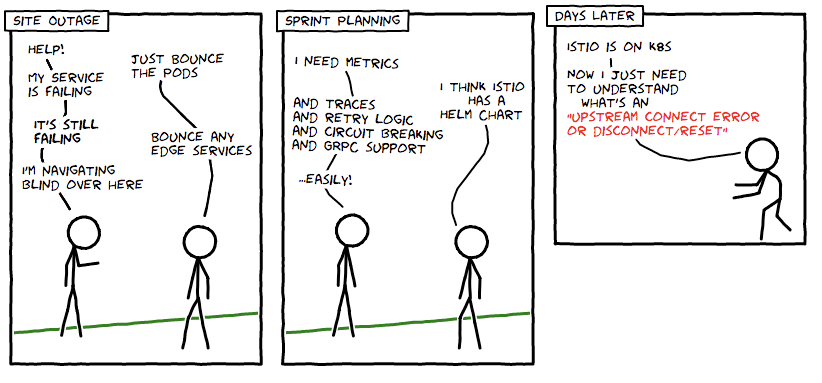

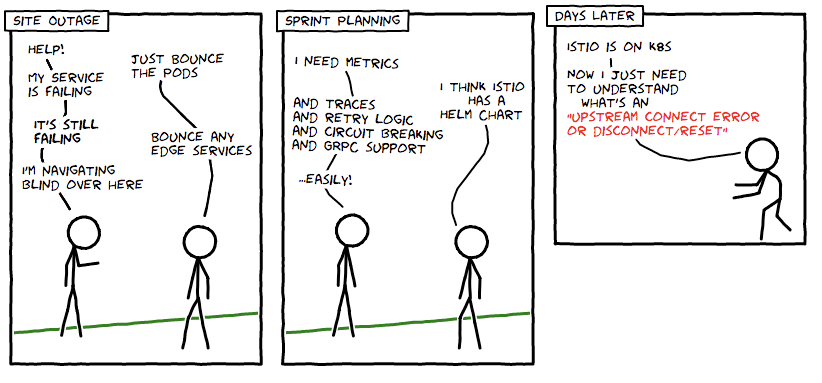

At Namely we’ve been running with Istio for a year now. Yes, that’s pretty much when it first came out. We had a major performance regression with a Kubernetes cluster, we wanted distributed tracing, and used Istio to bootstrap Jaeger to investigate.

We immediately saw the potential of a service mesh as it relates to our infrastructure and decided to make an investment in the tool. It hasn’t always been the smoothest ride, but we have learned a ton about how it works and how to operate it. This post—the start of series—hopes to explain how Istio integrates with Kubernetes and some operational observations we’ve made along the way.

We’ll go into some technical details, but mostly keep it high level, and with additional posts to come. Istio is a service mesh configuration engine. It reads the state of a Kubernetes cluster and performs updates to L7 (HTTP and gRPC) proxies that are initialized as “sidecars” to Kubernetes pods.

These sidecars are Envoy containers that have been setup to read configuration from the Istio Pilot API (also a gRPC service) and then route traffic based on that configuration. The powerful L7 proxy under the hood allows us to leverage features such as metrics, tracing, retry logic, circuit breaking, load balancing and canary deployments.

Source: medium.com