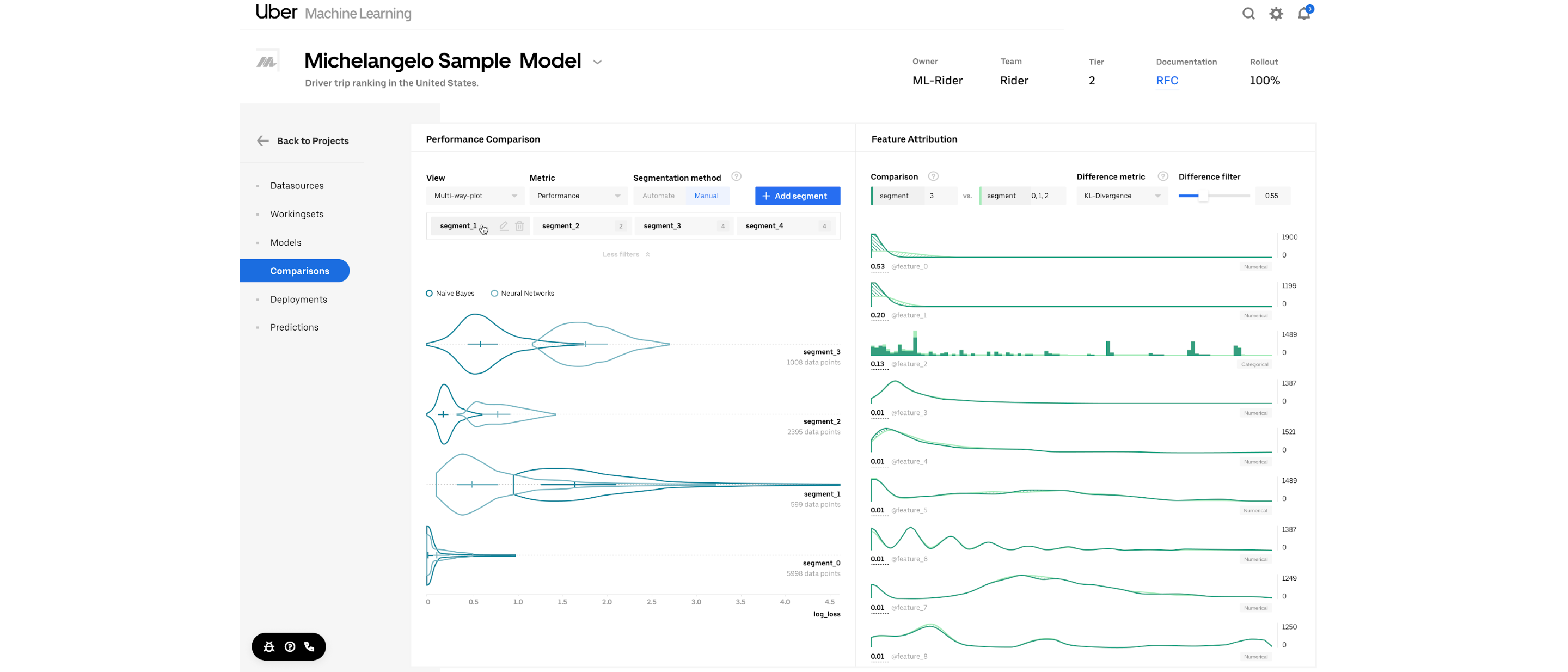

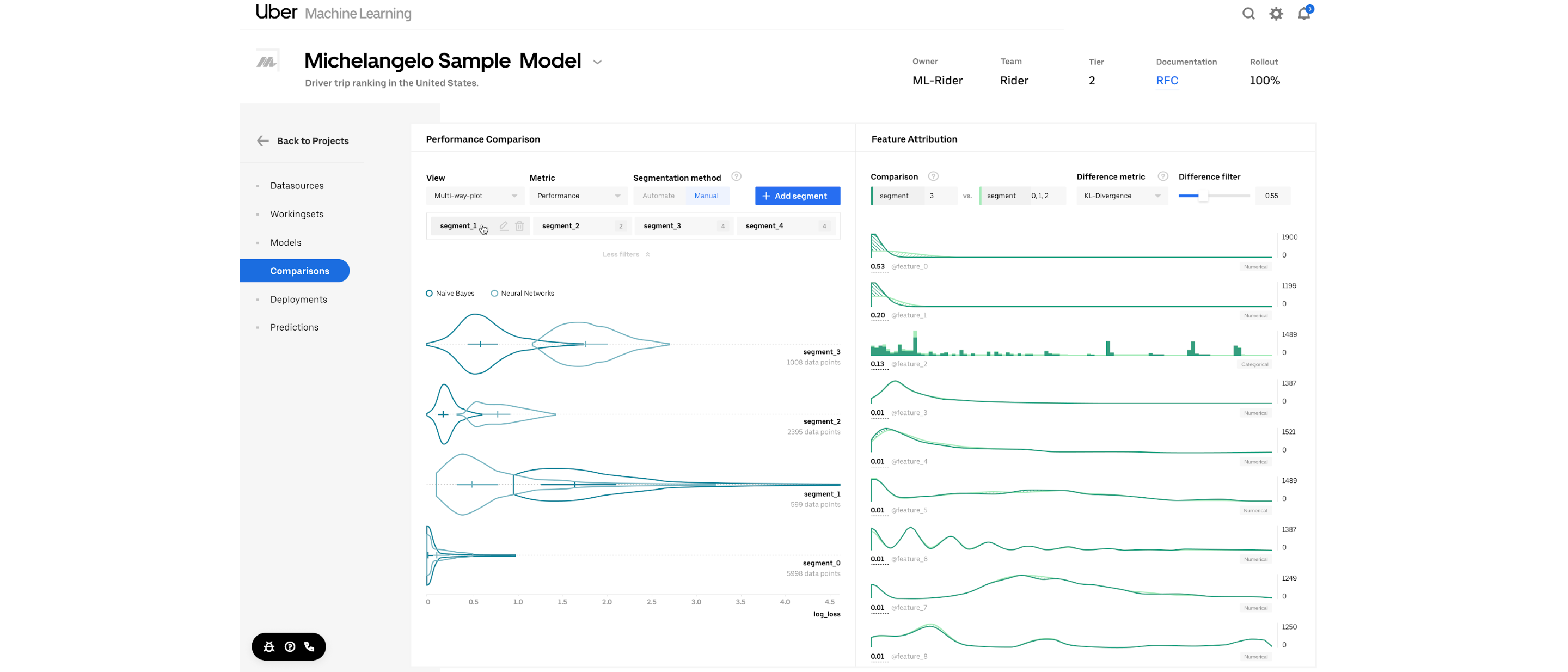

Manifold: A Model-Agnostic Visual Debugging Tool for Machine Learning at Uber

Table of Contents

Uber built Manifold, a model-agnostic visualization tool for ML performance diagnosis and model debugging, to facilitate a more informed and actionable model iteration process. Machine learning (ML) is widely used across the Uber platform to support intelligent decision making and forecasting for features such as ETA prediction and fraud detection. For optimal results, we invest a lot of resources in developing accurate predictive ML models.

In fact, it’s typical for practitioners to devote 20 percent of their effort into building initial working models, and 80 percent of their effort improving model performance in what is known as the 20/80 split rule of ML model development. Traditionally, when data scientists develop models, they evaluate each model candidate using summary scores like log loss, area under curve (AUC), and mean absolute error (MAE). Although these metrics offer insights into how a model is performing, they do not convey much information regarding why a model is not performing well, and from there, how to improve its performance.

As such, model builders tend to rely on trial and error when determining how to improve their models.

Source: uber.com