Istio and Kubernetes in production. Part 2. Tracing

Table of Contents

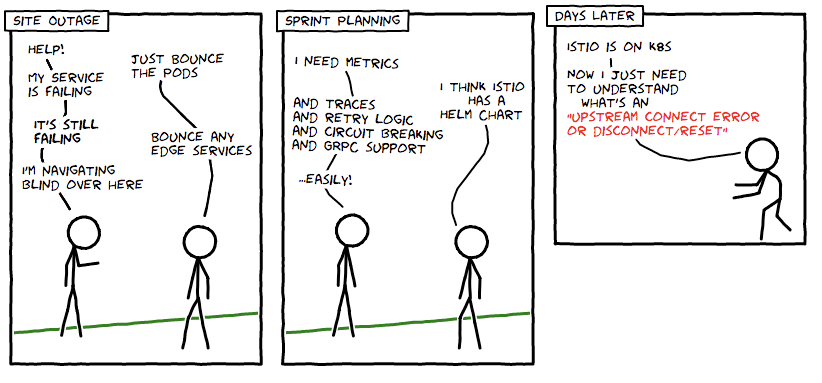

In the previous post, we took a look at the building blocks of Service Mesh Istio, got familiar with the system, and went through the questions that new Istio users often ask. In this post, we will look at how to organize the collection of tracing information over the network. The first thing that developers and system administrators think about when they hear the term Service Mesh is tracing.

Indeed, to each microservice we add a special proxy server to handle all TCP traffic. You may think that now you can easily collect information about all networking events. Unfortunately, in reality, there are many nuances that have to be kept in mind.

Let’s have a look at these. In fact, what is relatively easily is only a diagram of our system’s nodes connected by arrows and the data rate between services (in fact, only bytes per unit of time). However, in most situations, the services communicate over some application layer protocol, such as HTTP, gRPC, Redis, etc.

And, of course, we want to see the tracing information of these protocols, we want to see the rate of application level requests and not the rate of data. Further, we want to know the protocol’s request latency. Finally, we want to see the full path made by the request from the moment the user enters to the moment the response was received.

Unfortunately, this is not an easy task.

Source: medium.com