Facebook AI Memory Layer Boosts Network Capacity by a Billion Parameters

Table of Contents

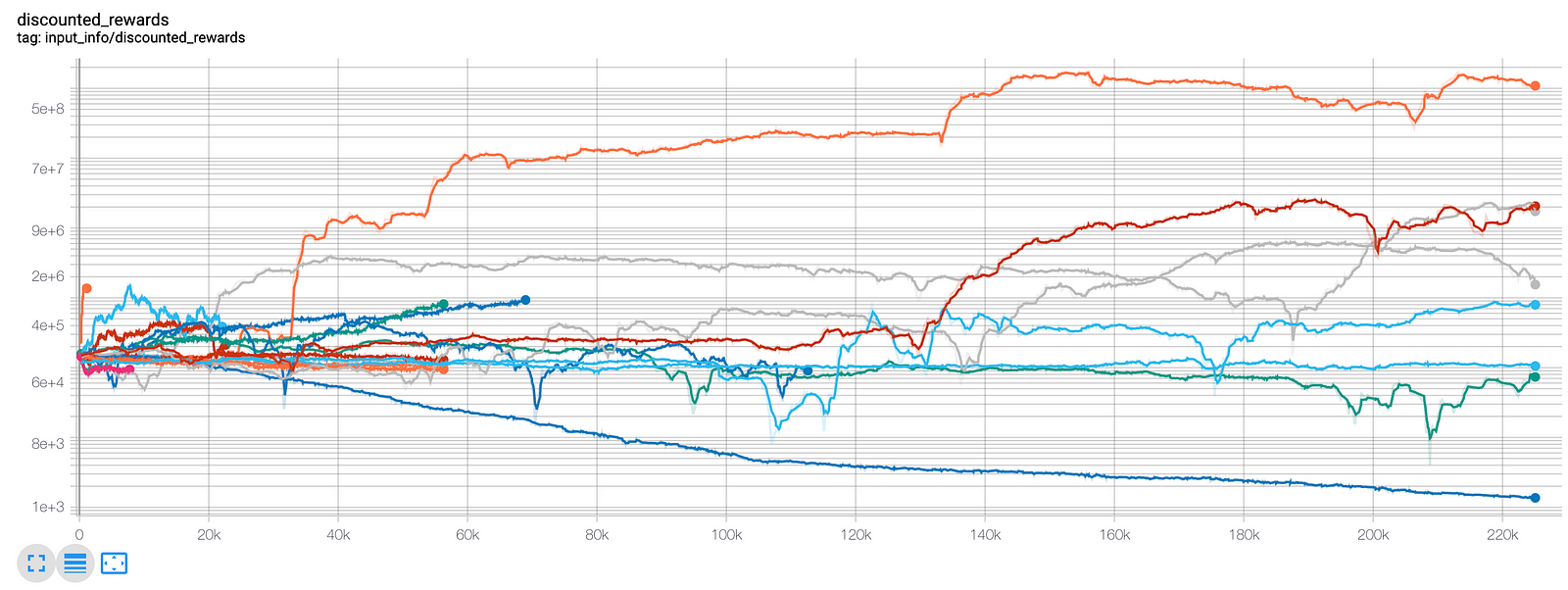

Neural networks are widely used in complex tasks such as machine translation, image classification, or speech recognition. These networks are data driven, and as the amount of data increases so does network size and the computational complexity required for training and inference. Recently, Facebook AI Research (FAIR) researchers introduced a structured memory layer which can be easily integrated into a neural network to greatly expand network capacity and the number of parameters without significantly changing calculation cost.

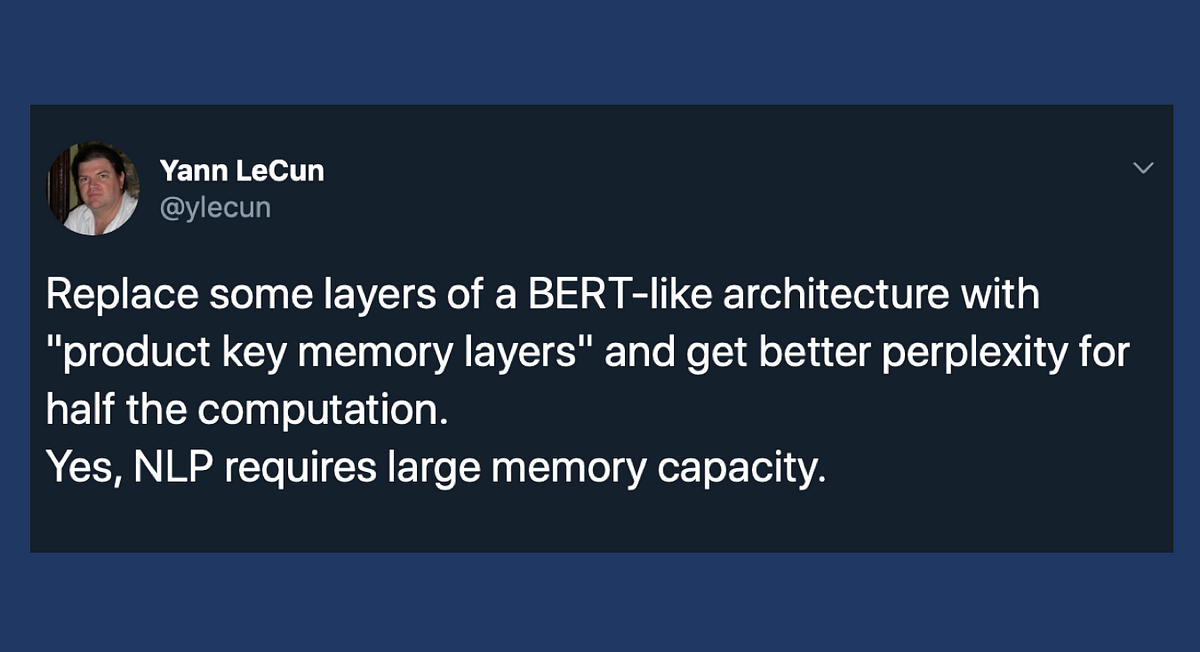

The approach is well-suited to natural language processing tasks, and the code has been open sourced on GitHub. The memory is very large by design and therefore significantly increases the capacity of the architecture, by up to a billion parameters with a negligible computational overhead. Its design and access pattern is based on product keys, which enable fast and exact nearest neighbor search.

The ability to increase the number of parameters while keeping the same computational budget lets the overall system strike a better trade-off between prediction accuracy and computation efficiency both at training and test time. This memory layer allows us to tackle very large scale language modeling tasks. In our experiments we consider a dataset with up to 30 billion words, and we plug our memory layer in a state-of-the-art transformer-based architecture.

In particular, we found that a memory augmented model with only 12 layers outperforms a baseline transformer model with 24 layers, while being twice faster at inference time. (Facebook AI Research).

Source: medium.com