How Amazon Is Solving Big-Data Challenges With Data Lakes

Back when Jeff Bezos filled orders in his garage and drove packages to the post office himself, crunching the numbers on costs, tracking inventory, and forecasting future demand was relatively simple. Fast-forward 25 years, Amazon’s retail business has more than 175 fulfillment centers (FC) worldwide with over 250,000 full-time associates shipping millions of items per day. Amazon’s worldwide financial operations team has the incredible task of tracking all of that data (think petabytes).

At Amazon’s scale, a miscalculated metric, like cost per unit, or delayed data can have a huge impact (think millions of dollars). The team is constantly looking for ways to get more accurate data, faster.

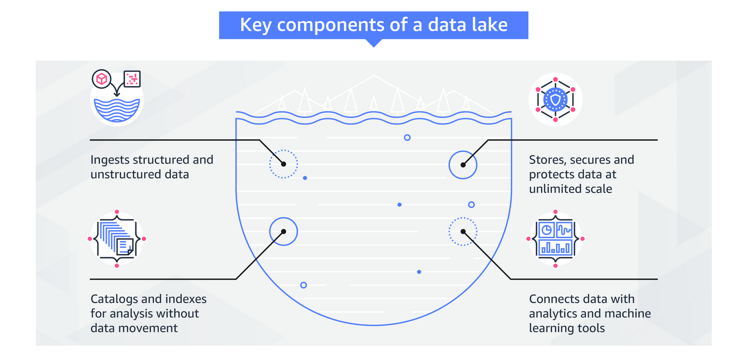

That’s why, in 2019, they had an idea: Build a data lake that can support one of the largest logistics networks on the planet. It would later become known internally as the Galaxy data lake. The Galaxy data lake was built in 2019 and now all the various teams are working on moving their data into it.

Source: allthingsdistributed.com