Incremental App Migration from VMs to Kubernetes: Planning and Tactics

Table of Contents

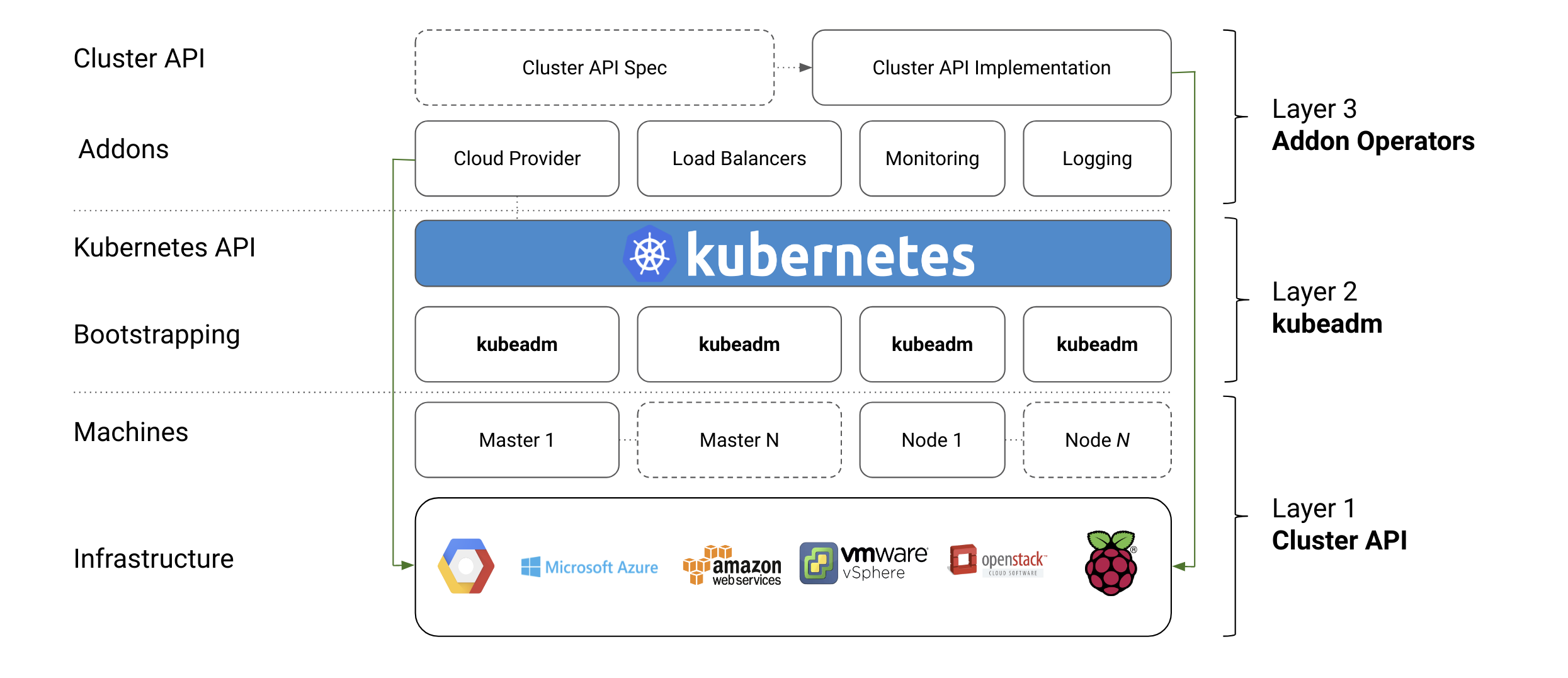

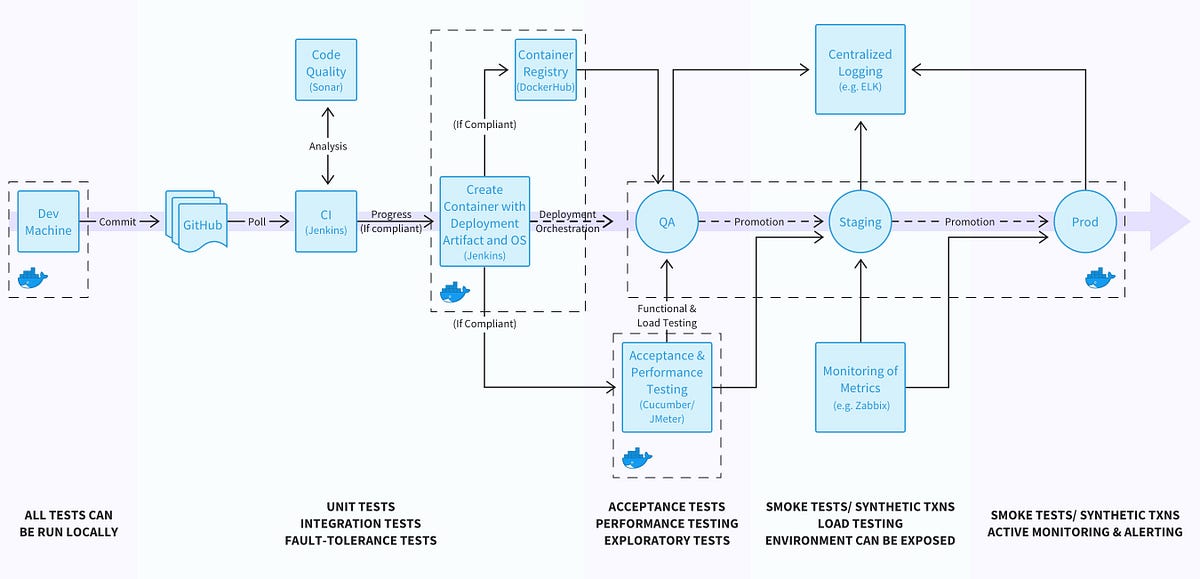

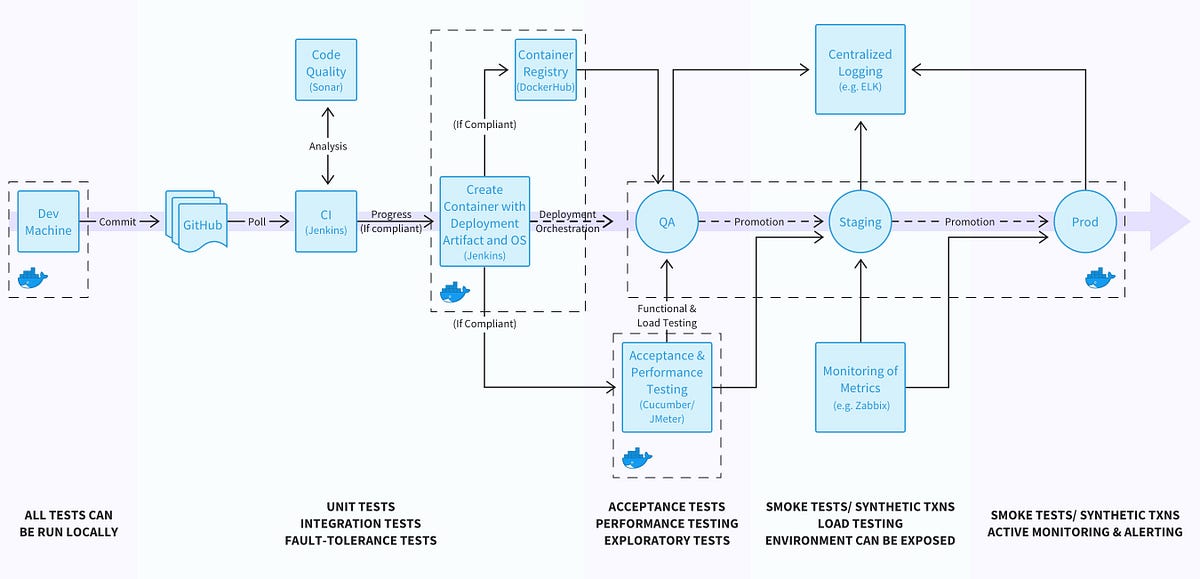

One of the core goals when modernising software systems is to decouple applications from the underlying infrastructure on which they are running. This can provide many benefits, including: workload portability, integration with cloud AI/ML services, reducing costs, and improving/delegating specific aspects of security. The use of containers and orchestration frameworks like Kubernetes can decouple the deployment and execution of applications from the underlying hardware.

In the previous article of this series I explored how to begin the technical journey within an application modernisation program by deploying an Ambassador API gateway at the edge of your system and routing user traffic across existing VM-based services and newly deployed Kubernetes-based services. This second article builds on this journey, and provides an overview of how you can plan the migration, and also provides guidance on containerising workloads and some networking gotchas to watch out for. The next article in the series will look at using a service mesh, like HashiCorp’s Consul, to route service-to-service traffic seamlessly across all platform types, regardless of whether your applications have been containerised or not.

Source: getambassador.io