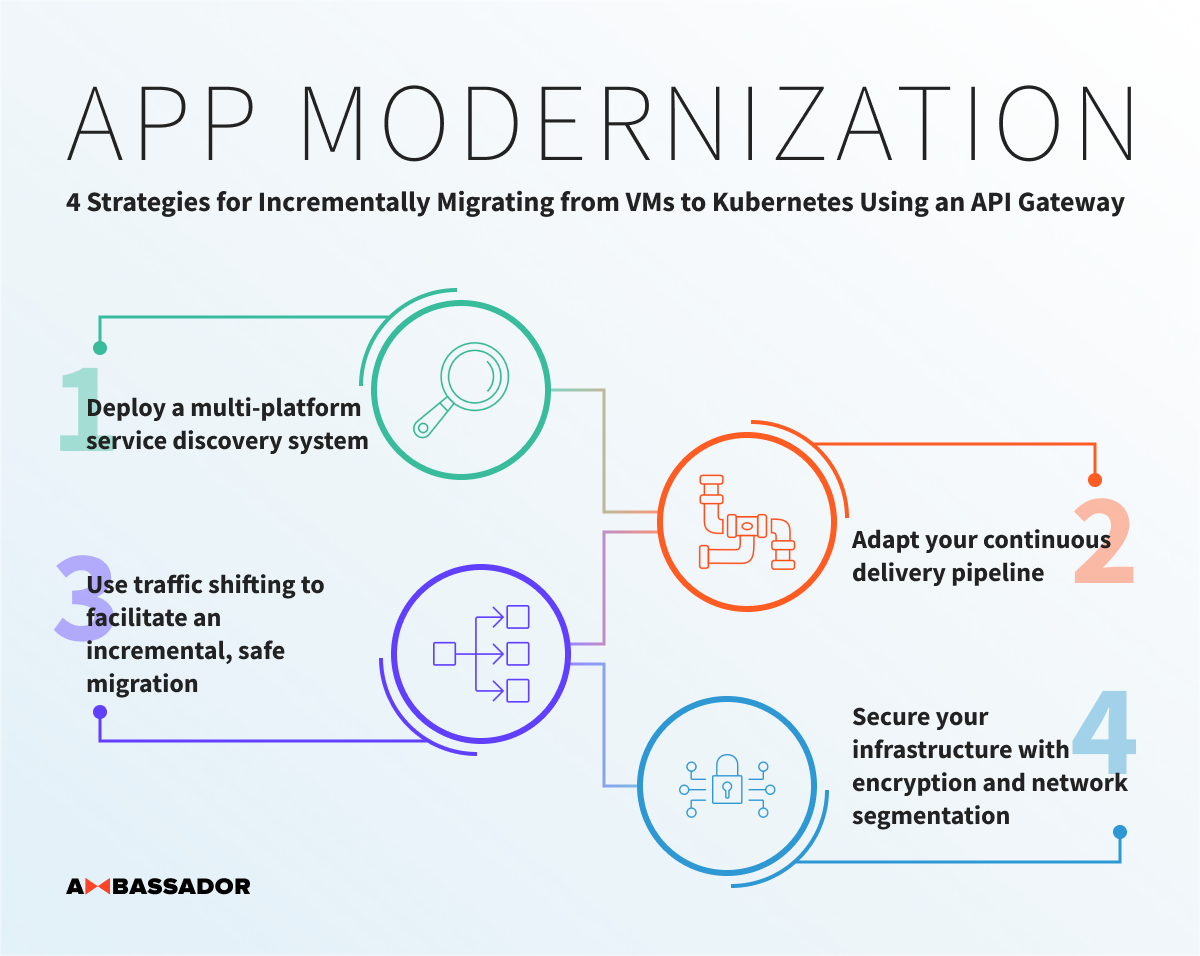

4 Strategies for Incrementally Migrating from VMs to Kubernetes using an API Gateway

Table of Contents

An increasing number of organizations are migrating from a datacenter composed of virtual machines (VMs) to a “next-generation” cloud-native platform that is built around container technologies like Docker and Kubernetes. However, due to the inherent complexity of this move, a migration doesn’t happen overnight. Instead, an organisation will typically be running a hybrid multi-infrastructure and multi-platform environment in which applications span both VMs and containers.

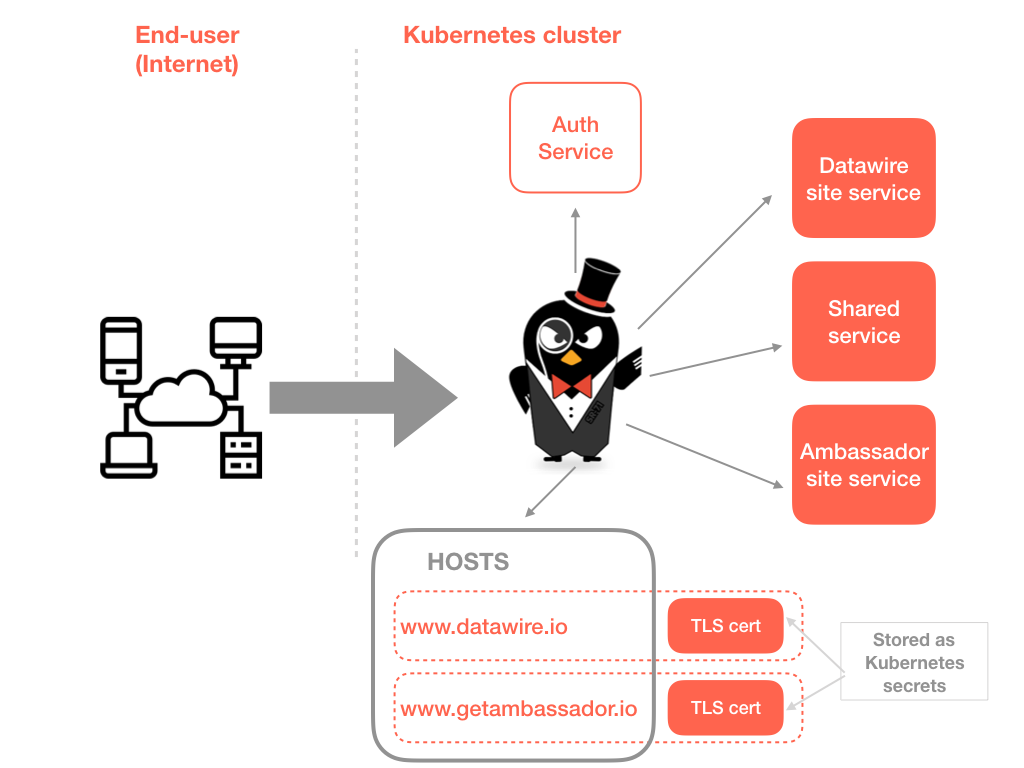

Beginning a migration at the edge of a system, using functionality provided by a cloud-native API gateway, and working inwards towards the application opens up several strategies to minimize the pain and risk. In a recently published article series on the Datawire Ambassador blog, four strategies related to the planning and implementation of such a migration were presented: deploying a multi-platform service discovery system that is capable of routing effectively within a highly dynamic environment; adapting your continuous delivery pipeline to take advantage of best practices and avoid pitfalls with network complexity; using traffic shifting to facilitate an incremental and safe migration; and securing your infrastructure with encryption and network segmentation for all traffic, from end user to service. During a migration to cloud and containers it is common to see a combination of existing applications being decomposed into services and new systems being designed using the microservices architecture style.

Business functionality is often provided via an API that is powered by the collaboration of one of more services, and these components therefore need to be able to locate and communicate with each other.

Source: getambassador.io