The Building Blocks of Interpretability

Table of Contents

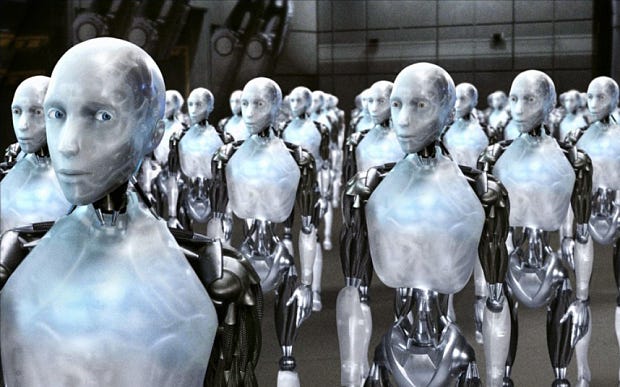

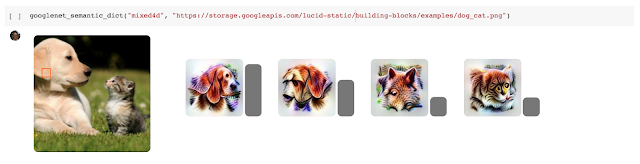

In 2015, our early attempts to visualize how neural networks understand images led to psychedelic images. Soon after, we open sourced our code as DeepDream and it grew into a small art movement producing all sorts of amazing things. But we also continued the original line of research behind DeepDream, trying to address one of the most exciting questions in Deep Learning: how do neural networks do what they do?

Source: googleblog.com