What the History of Math Can Teach Us About the Future of AI

Table of Contents

The long history of automation in mathematics offers an even more apt parallel to how computerization, in the form of AI and robots, is likely to affect other kinds of work. If you’re worried about AI-induced mass unemployment or worse, think about this: why didn’t digital computers make mathematicians obsolete? It turns out that human intelligence is not just one trick or technique—it is many.

Digital computers excel at one particular kind of math: arithmetic. Adding up a long column of numbers is quite hard for a human, but trivial for a computer. So when spreadsheet programs like Excel came along and allowed any middle-school child to tot up long sums instantly, the most boring and repetitive mathematical jobs vanished.

A general rule in economicsis that a big increase in the supply of a commodity causes prices to fall because demand is fixed. Yet this hasn’t applied to computer power—especially for mathematics. Huge increases in supply have counterintuitively stimulated demand for more because each boost in raw computational ability and each clever new software algorithm opens another class of problems to computer solution.

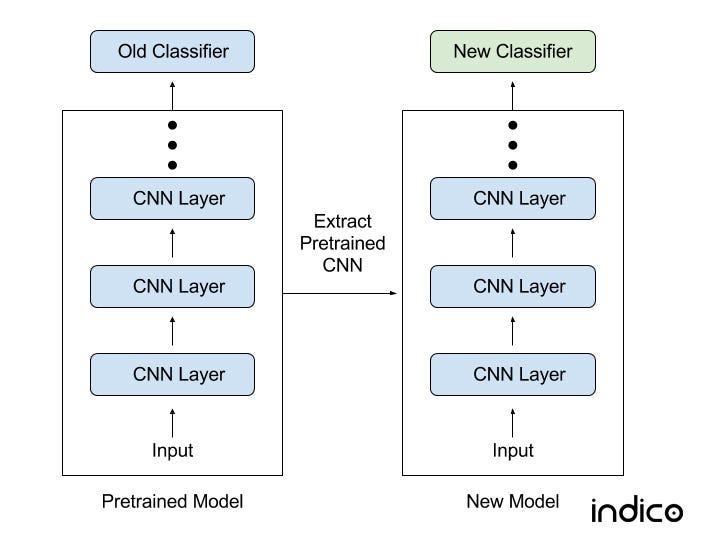

But only with human help. This tells us something important about AI. Like mathematics, intelligence is not just one simple kind of problem, such as pattern recognition.

It’s a huge constellation of tasks of widely differing complexity. So far, the most impressive demonstrations of “intelligent” performance by AI have been programs that play games like chess or Go at superhuman levels. These are tasks that are so difficult for human brains that even the most talented people need years of practice to master them.

Source: scientificamerican.com