Prefrontal cortex as a meta-reinforcement learning system

Table of Contents

Recently, AI systems have mastered a range of video-games such as Atari classics Breakout and Pong. But as impressive as this performance is, AI still relies on the equivalent of thousands of hours of gameplay to reach and surpass the performance of human video game players. In contrast, we can usually grasp the basics of a video game we have never played before in a matter of minutes.

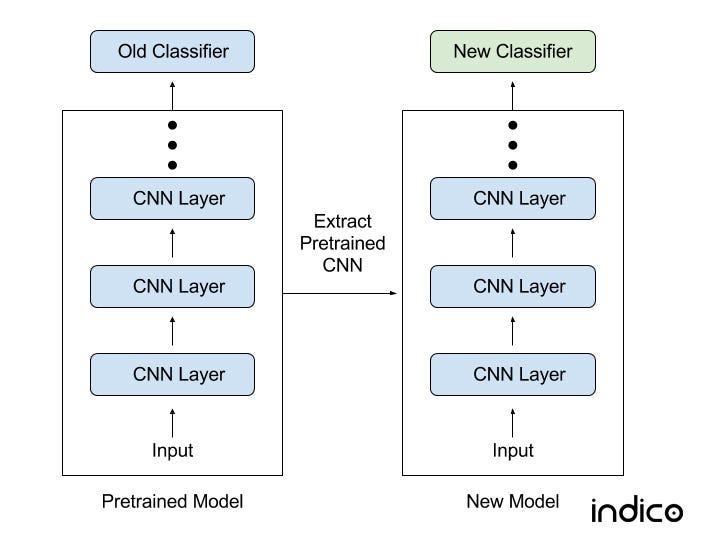

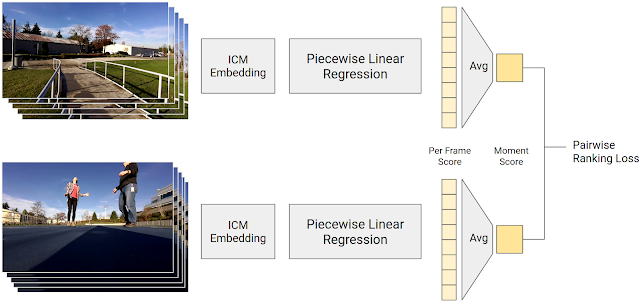

The question of why the brain is able to do so much more with so much less has given rise to the theory of meta-learning, or ‘learning to learn’. It is thought that we learn on two timescales — in the short term we focus on learning about specific examples while over longer timescales we learn the abstract skills or rules required to complete a task. It is this combination that is thought to help us learn efficiently and apply that knowledge rapidly and flexibly on new tasks.

Recreating this meta-learning structure in AI systems — called meta-reinforcement learning — has proven very fruitful in facilitating fast, one-shot, learning in our agents (see our paper and closely related work from OpenAI). However, the specific mechanisms that allow this process to take place in the brain are still largely unexplained in neuroscience.

Source: deepmind.com