News

- Home /

- News

Kubernetes Metrics and Monitoring

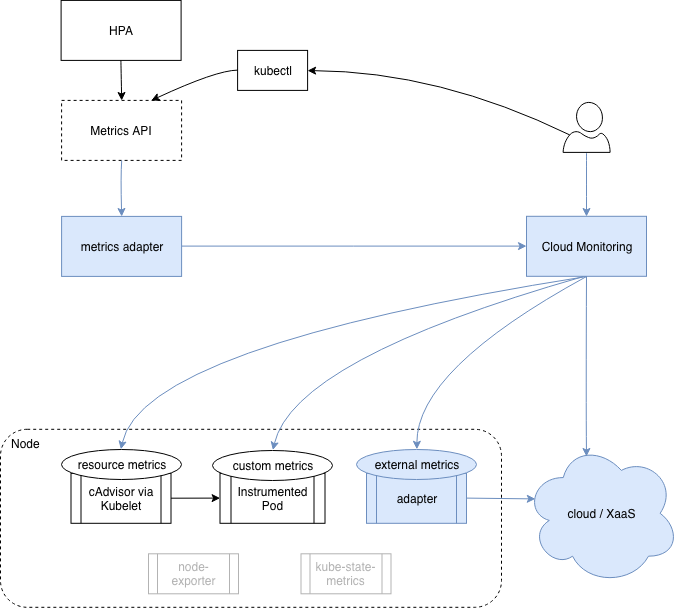

This post explores the current state of metrics and monitoring in Kubernetes by walking through the gradual thought process that I experienced when learning this topic. Kubernetes needs some metrics for it’s basic out-of-the-box functionality, like autoscaling and scheduling. This is regardless of any monitoring solution you may want for the purpose of troubleshooting and alerting.

Read More

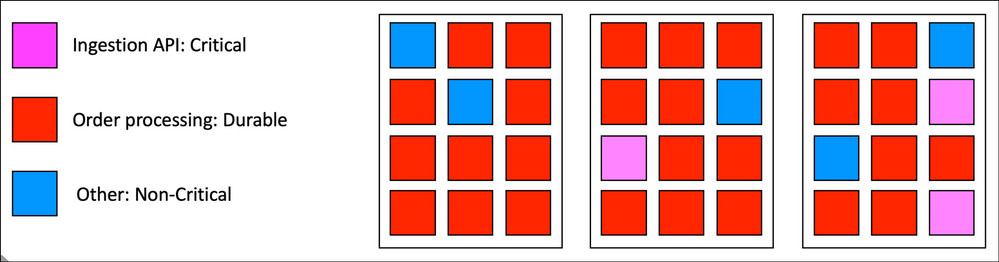

Kubernetes Operations: Prioritize Workload in Overcommitted Clusters

One of the benefits in adopting a system like Kubernetes is facilitating burst-able and scalable workload. Horizontal application scaling involves adding or removing instances of an application to match demand. Kubernetes Horizontal Pod Autoscaler enables automated pod scaling based on demand.

Read More

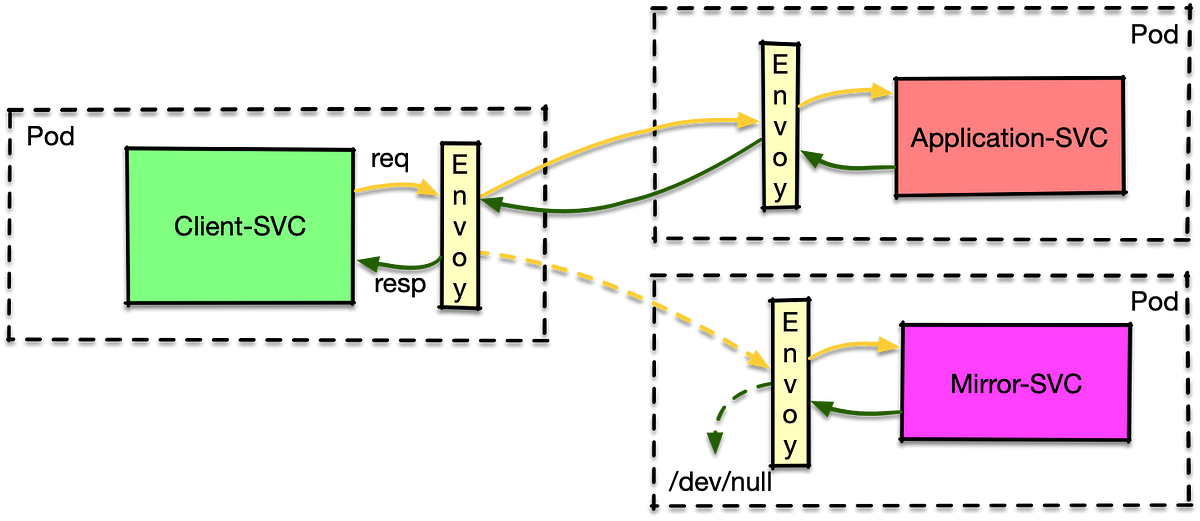

Use Istio traffic mirroring for quicker debugging

Often when an error occurs, especially in production, one needs to debug the application to create a fix. Unfortunately the input that created the issue is gone. And the test data on file does not trigger the error (otherwise it would have been fixed before delivery).

Read More

When AWS Autoscale Doesn’t

The premise behind autoscaling in AWS is simple: you can maximize your ability to handle load spikes and minimize costs if you automatically scale your application out based on metrics like CPU or memory utilization. If you need 100 Docker containers to support your load during the day but only 10 when load is lower at night, running 100 containers at all times means that you’re using 900% more capacity than you need every night. With a constant container count, you’re either spending more money than you need to most of the time or your service will likely fall over during a load spike.

Read More

Kubernetes at CERN: Use Cases, Integration and Challenges

Kubernetes at CERN: Use Cases, Integration and Challenges.

Read More

Istio and Kubernetes in production. Part 2. Tracing

In the previous post, we took a look at the building blocks of Service Mesh Istio, got familiar with the system, and went through the questions that new Istio users often ask. In this post, we will look at how to organize the collection of tracing information over the network. The first thing that developers and system administrators think about when they hear the term Service Mesh is tracing.

Read More

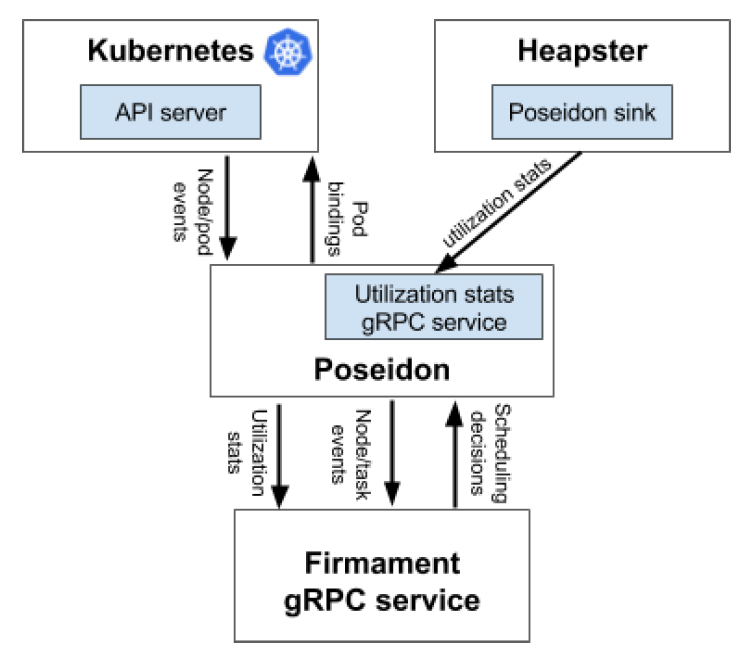

Poseidon-Firmament Scheduler – Flow Network Graph Based Scheduler

In this blog post, we briefly describe the novel Firmament flow network graph based scheduling approach (OSDI paper) in Kubernetes. We specifically describe the Firmament Scheduler and how it integrates with the Kubernetes cluster manager using Poseidon as the integration glue. We have seen extremely impressive scheduling throughput performance benchmarking numbers with this novel scheduling approach.

Read MoreCategories

Tags

- 3dprinting

- 5g

- Ai

- Alexa

- Algorithm

- Amazon

- Ambassador

- Ansible

- Api

- Apple

- Appmesh

- Appswitch

- Argo

- Art

- Artificialintelligence

- Astronomy

- Augmentedreality

- Aurora

- Australia

- Authentication

- Automation

- Aws

- Azure

- Bayesian

- Bgp

- Bigdata

- Bioengineering

- Biology

- Blackhole

- Blockchain

- Brain

- Build

- Buildah

- Business

- Cancer

- Casestudy

- China

- Chip

- Cia

- Cicd

- Cilium

- Climatechange

- Cloud

- Cloudnative

- Cncf

- Codeanalysis

- Computing

- Connectedcars

- Consul

- Container

- Coredns

- Creativity

- Crio

- Crispr

- Cubesat

- Culture

- Dashboard

- Data

- Database

- Datacenter

- Datadog

- Datascience

- Dataset

- Deeplearning

- Deepmind

- Developer

- Development

- Devops

- Digitalcurrency

- Dna

- Dns

- Docker

- Drone

- Ec2

- Economy

- Education

- Einstein

- Eks

- Elasticsearch

- Electricvehicle

- Emdrive

- Energy

- Engineering

- Envoy

- Erlang

- Esa

- Facialrecognition

- Fail

- Falco

- Fashion

- Fda

- Federation

- Fitnessfunction

- Flair

- Fluentd

- Flutter

- Flyingcars

- Food

- Funny

- Gaia

- Gcp

- Gdpr

- Genome

- Geography

- Geopolitics

- Gis

- Git

- Github

- Gitlab

- Gitops

- Gloo

- Go

- Gps

- Grafana

- Graphene

- Graphql

- Grpc

- Hashicorp

- Health

- Healthcare

- Helm

- Hft

- Highavailability

- History

- Hurricane

- Hyperloop

- Iac

- Ibm

- Image

- Imagerecognition

- Ingress

- Innovation

- Intel

- Ios

- Iot

- Istio

- Jaeger

- Jenkins

- Kafka

- Keras

- Kiali

- Knative

- Kubedb

- Kubeedge

- Kubernetes

- Lambda

- Latinamerica

- Legal

- Lidar

- Linkerd

- Linux

- Lyft

- M3

- Machinelearning

- Mars

- Math

- Microservices

- Microsoft

- Mobile

- Mongo

- Monitoring

- Moon

- Multicloud

- Multicluster

- Mysql

- Nanorobot

- Nasa

- Nature

- Navigation

- Network

- Networking

- Newrelic

- News

- Nextjs

- Nlp

- Nlu

- Observability

- Onnx

- Onpremise

- Opencensus

- Openmetrics

- Opentracing

- Openwhisk

- Operator

- Opinion

- Outage

- Perforce

- Performance

- Pharma

- Philosophy

- Physics

- Podman

- Postgres

- Pprof

- Presto

- Privacy

- Programming

- Prometheus

- Protocol

- Psychology

- Python

- Pytorch

- Qa

- Quantum

- Quantumcomputing

- Qubit

- React

- Recycling

- Redis

- Release

- Renewable

- Research

- Resilience

- Rnn

- Robot

- Rook

- Scalability

- Scaling

- Science

- Secrets

- Security

- Selfdrivingcars

- Sentry

- Serverless

- Servicemesh

- Slack

- Smartgrid

- Smi

- Sociology

- Space

- Spacex

- Spacy

- Spark

- Spinnaker

- Sql

- Squash

- Sre

- Startup

- Storage

- Study

- Success

- Swift

- Sysdig

- Tailwind

- Technology

- Tensorflow

- Terraform

- Tesla

- Testcategory1

- Textclassification

- Traefik

- Training

- Transportation

- Troubleshooting

- Uber

- Ubi

- Vault

- Velero

- Versioncontrol

- Video

- Virtualreality

- Vision

- Visualization

- Vitess

- Warfare

- Wd

- Web

- Weird

- Wikipedia