How Waze predicts carpools with Google Cloud’s AI Platform

Waze’s mission is to eliminate traffic and we believe our carpool feature is a cornerstone that will help us achieve it. In our carpool apps, a rider (or a driver) is presented with a list of users that are relevant for their commute (see below). From there, the rider or the driver can initiate an offer to carpool, and if the other side accepts it, it’s a match and a carpool is born.

Read More

OpenAI releases powerful text generator

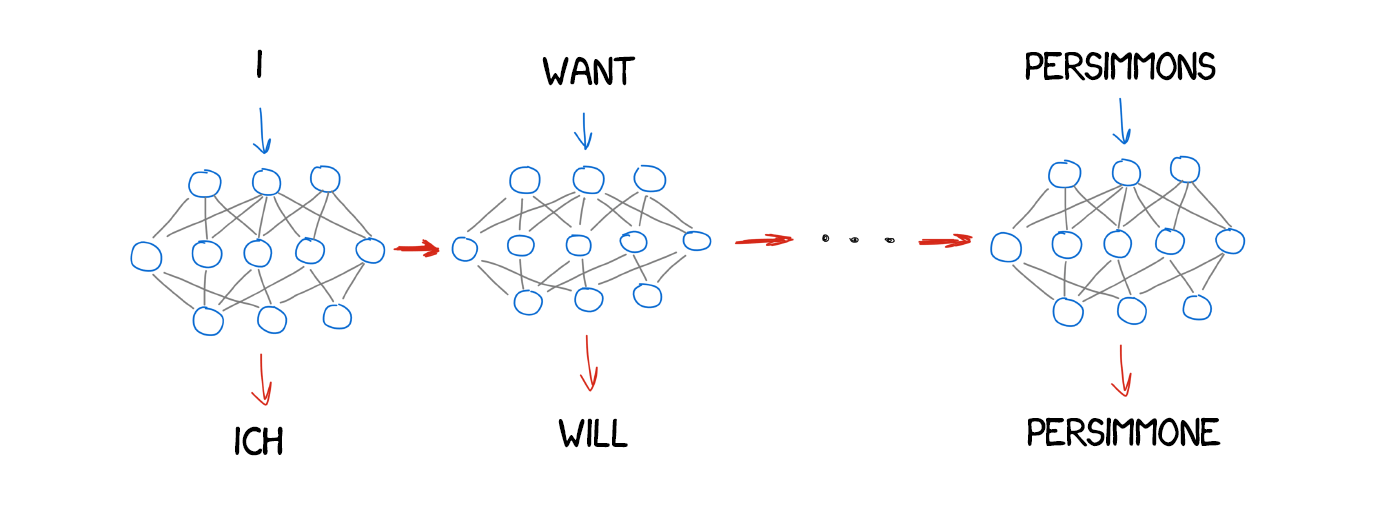

The laboratory, founded by Elon Musk and recently supported by a $1 billion grant from Microsoft, has designed text generators that create readable passages virtually indistinguishable from those written by humans. OpenAI’s machine learning approach scrapes massive amounts of data from the web and analyzes it for statistical patterns that allow it to realistically predict what letters or words will likely be written next. When users feed a word or phrase or longer text snippets into the generator, it expands on the words with convincingly humanlike text.

Read More

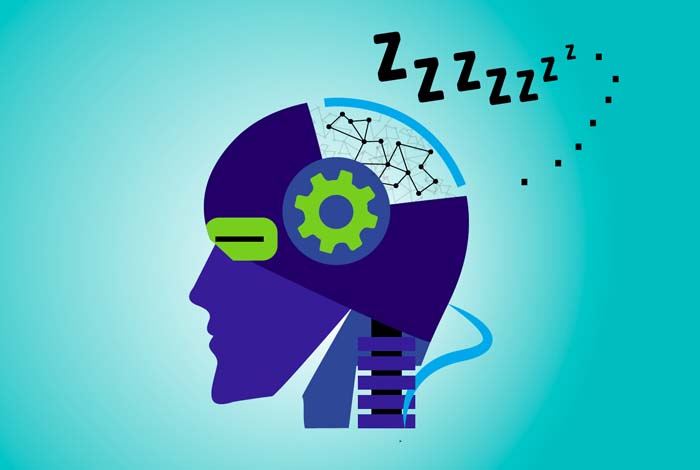

Artificial brains may need sleep too

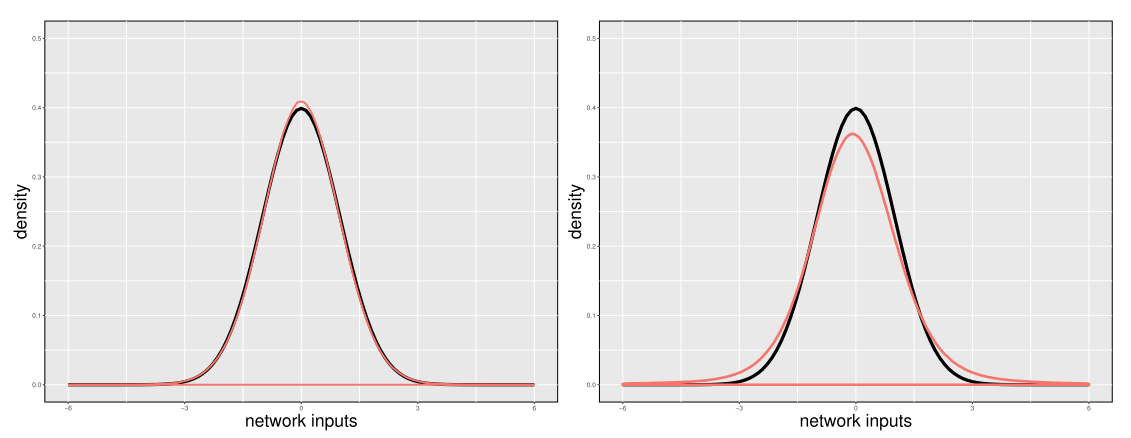

No one can say whether androids will dream of electric sheep, but they will almost certainly need periods of rest that offer benefits similar to those that sleep provides to living brains, according to new research from Los Alamos National Laboratory. Watkins and her research team found that the network simulations became unstable after continuous periods of unsupervised learning. When they exposed the networks to states that are analogous to the waves that living brains experience during sleep, stability was restored.

Read More5 Essential Papers on AI Training Data

Many data scientists claim that around80% of their time is spent on data preprocessing, and for good reasons, as collecting, annotating, and formatting data are crucial tasks in machine learning. This article will help you understand the importance of these tasks, as well as learn methods and tips from other researchers. Below, we will highlight academic papers from reputable universities and research teams on various training data topics.

Read More

Top 10 Best FREE Artificial Intelligence Courses

Most of the Machine Learning, Deep Learning, Computer Vision, NLP job positions, or in general every Artificial Intelligence (AI) job position requires you to have at least a bachelor’s degree in Computer Science, Electrical Engineering, or some similar field. If your degree comes from some of the world’s best universities than your chances might be higher in beating the competition on your job interview. But looking realistically, not most of the people can afford to go to the top universities in the world simply because not most of us are geniuses and don’t have thousands of dollars, or come from some poor country (like we do).

Read More

The Hateful Memes AI Challenge

We’ve built and are now sharing a data set designed specifically to help AI researchers develop new systems to identify multimodal hate speech. This content combines different modalities, such as text and images, making it difficult for machines to understand. The Hateful Memes data set contains 10,000+ new multimodal examples created by Facebook AI.

Read More

Ultimate Guide to Natural Language Processing Courses

Selecting an online course that will match your requirements is very frustrating if you have high standards. Most of them are not comprehensive and a lot of time spent on them is wasted. How would you feel, if someone would provide you a critical path and tell, what modules exactly and in which order will provide you comprehensive, expert-level knowledge?

Read More

Word2Vec: A Comparison Between CBOW, SkipGram & SkipGramSI

Learn how different Word2Vec architectures behave in practice. This is to help you make an informed decision on which architecture to use given the problem you are trying to solve. In this article, we will look at how the different neural network architectures for training a Word2Vec model behave in practice.

Read More

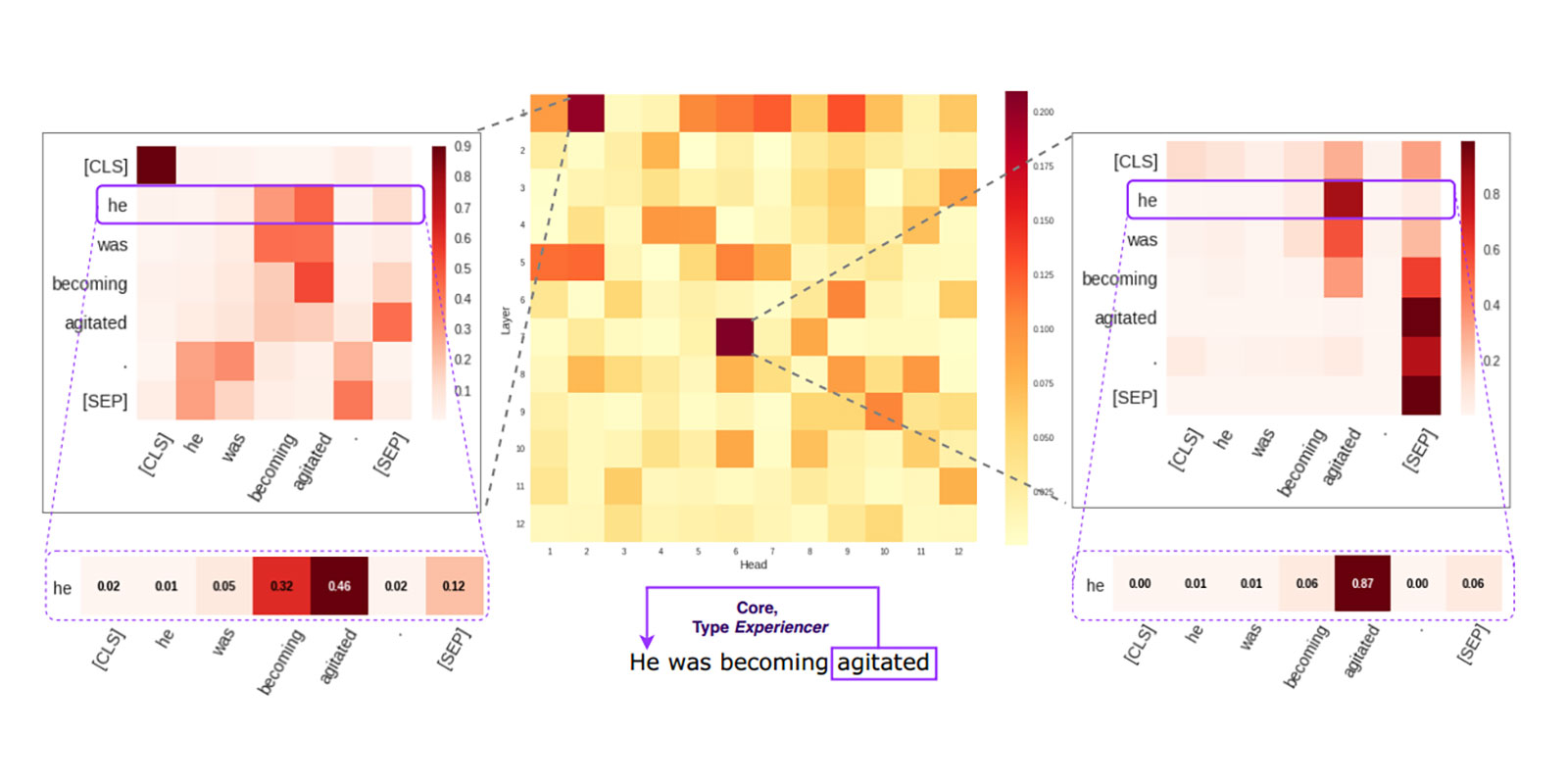

The Dark Secrets Of BERT

BERT stands for Bidirectional Encoder Representations from Transformers. This model is basically a multi-layer bidirectional Transformer encoder(Devlin, Chang, Lee, & Toutanova, 2019), and there are multiple excellent guides about how it works generally, includingthe Illustrated Transformer. What we focus on is one specific component of Transformer architecture known as self-attention.

Read More

The Best NLP Papers From ICLR 2020

I went through 687 papers that were accepted to ICLR 2020 virtual conference (out of 2594 submitted – up 63% since 2019!) and identified 9 papers with the potential to advance the use of deep learning NLP models in everyday use cases.

Read More

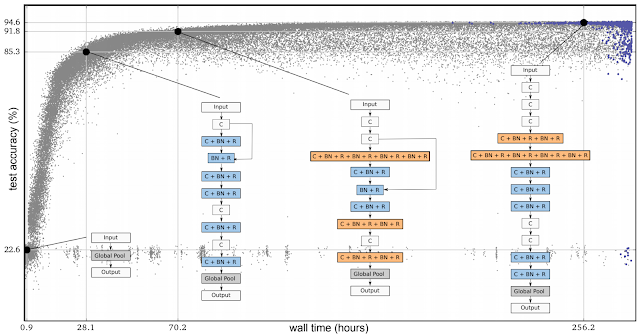

A Hacker’s Guide to Efficiently Train Deep Learning Models

Three months ago, I participated in a data science challenge that took place at my company. The goal was to help a marine researcher better identify whales based on the appearance of their flukes. More specifically, we were asked to predict for each image of a test set, the top 20 most similar images from the full database (train+test).

Read More

A state-of-the-art open source chatbot

Facebook AI has built and open-sourced Blender, the largest-ever open-domain chatbot. It outperforms others in terms of engagement and also feels more human, according to human evaluators. The culmination of years of research in conversational AI, this is the first chatbot to blend a diverse set of conversational skills — including empathy, knowledge, and personality — together in one system.

Read More

Facebook AI, AWS partner to release new PyTorch libraries

Facebook AI and AWS have partnered to release new libraries that target high-performance PyTorch model deployment and large scale model training. As part of the broader PyTorch community, Facebook AI and AWS engineers have partnered to develop new libraries targeted at large-scale elastic and fault-tolerant model training and high-performance PyTorch model deployment. These libraries enable the community to efficiently productionize AI models at scale and push the state of the art on model exploration as model architectures continue to increase in size and complexity.

Read More

MIT CSAIL TextFooler Framework Tricks Leading NLP Systems

A team of researchers at the MIT Computer Science & Artificial Intelligence Lab (CSAIL) recently released a framework called TextFooler which successfully tricked state-of-the-art NLP models (such as BERT) into making incorrect predictions.

Read More

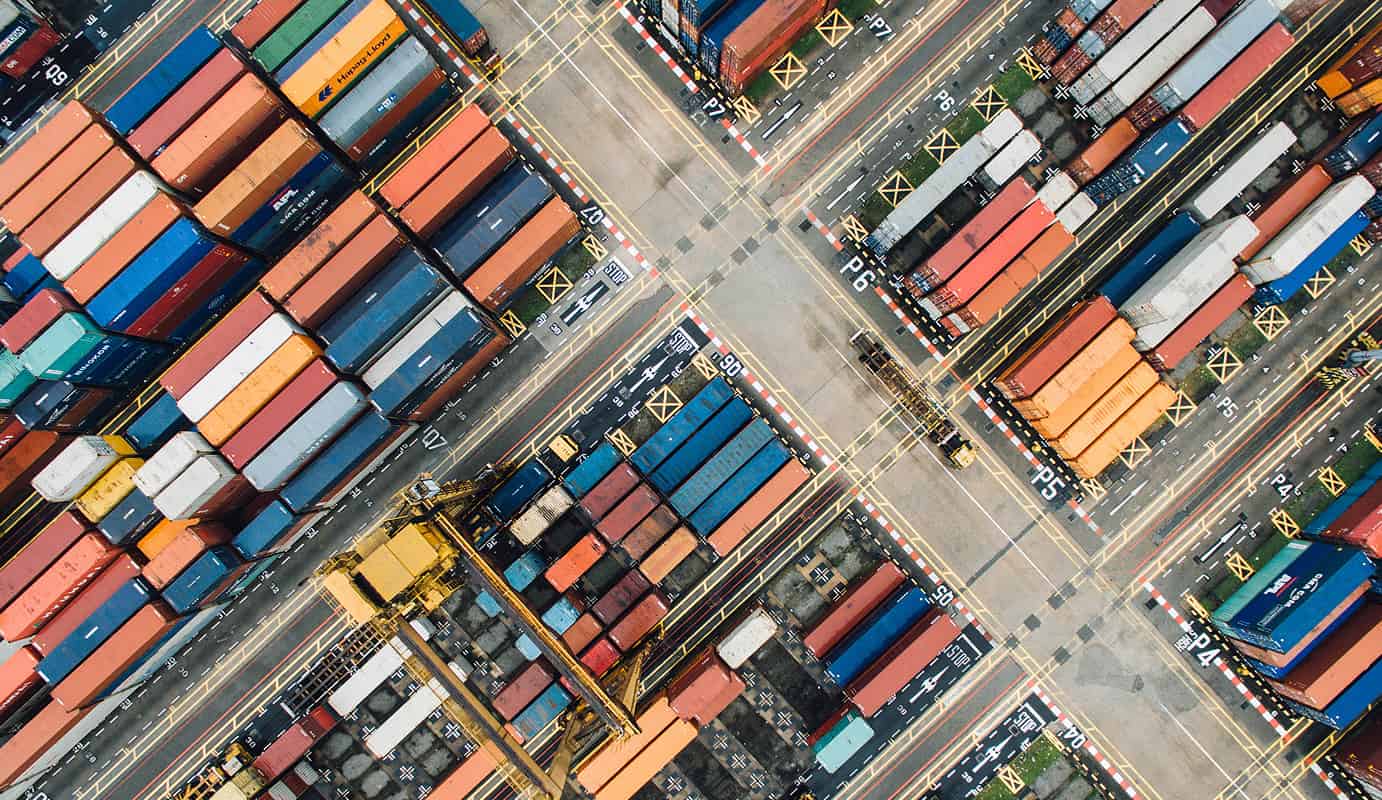

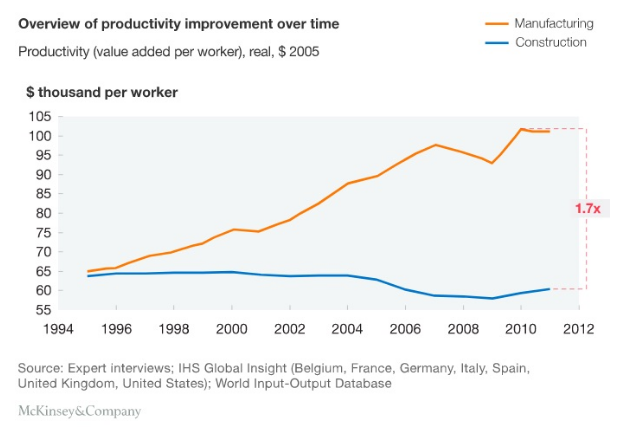

When machine learning packs an economic punch

A new study co-authored by an MIT economist shows that improved translation software can significantly boost international trade online — a notable case of machine learning having a clear impact on economic activity. The research finds that after eBay improved its automatic translation program in 2014, commerce shot up by 10.9 percent among pairs of countries where people could use the new system. To put the results in perspective, he adds, consider that physical distance is, by itself, also a significant barrier to global commerce.

Read More

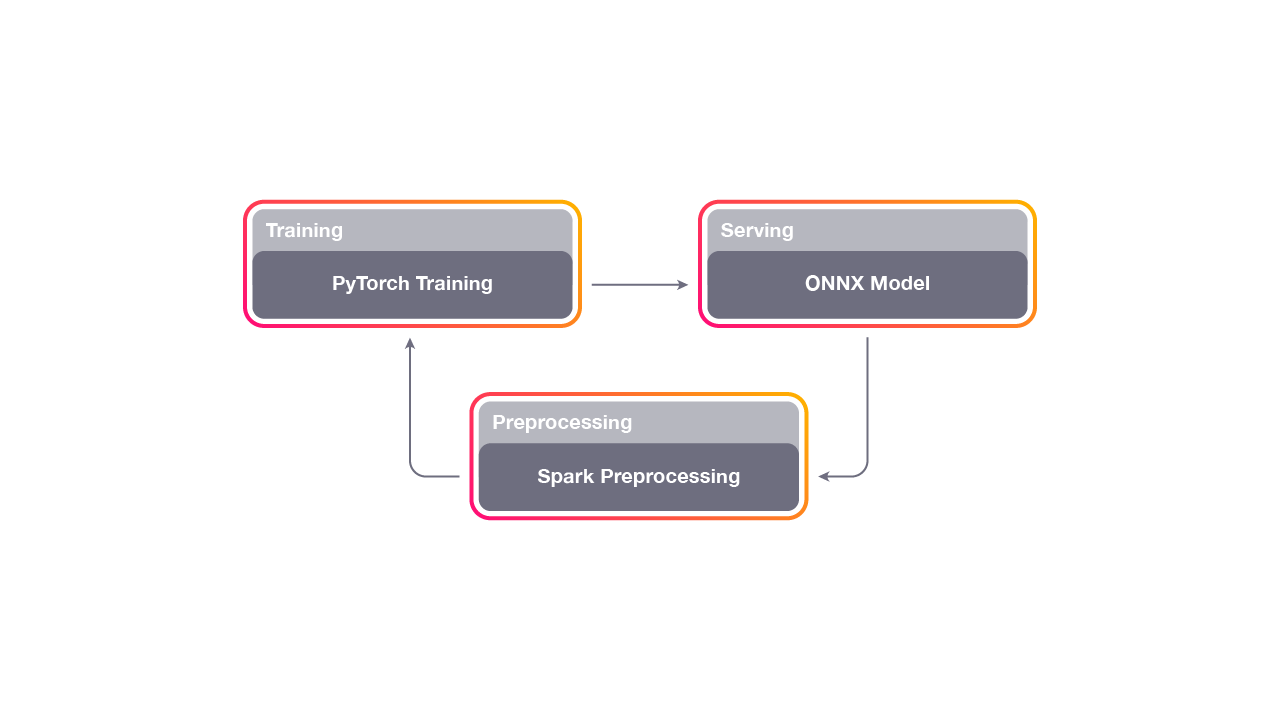

OpenAI, PyTorch

We are standardizing OpenAI’s deep learning framework on PyTorch. In the past, we implemented projects in many frameworks depending on their relative strengths. We’ve now chosen to standardize to make it easier for our team to create and share optimized implementations of our models.

Read More

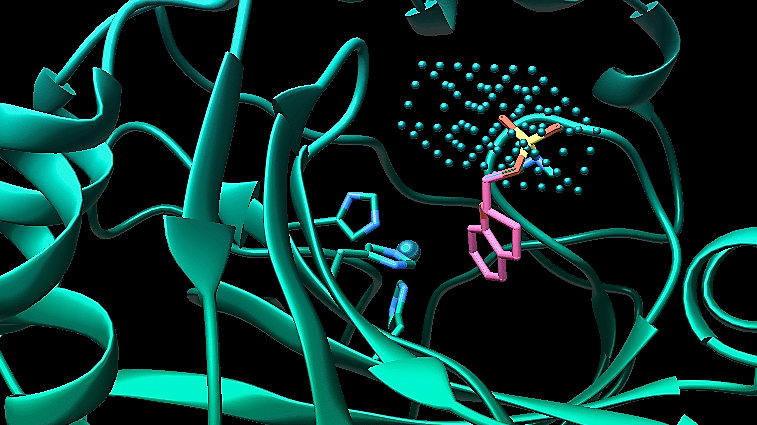

Artificial intelligence yields new antibiotic

Using a machine-learning algorithm, MIT researchers have identified a powerful new antibiotic compound. In laboratory tests, the drug killed many of the world’s most problematic disease-causing bacteria, including some strains that are resistant to all known antibiotics. It also cleared infections in two different mouse models.

Read More

Deep Learning for Anomaly Detection

Anomalies, often referred to as outliers, are data points or patterns in data that do not conform to a notion of normal behavior. Anomaly detection, then, is the task of finding those patterns in data that do not adhere to expected norms. The capability to recognize or detect anomalous behavior can provide highly useful insights across industries.

Read More

How we built the good first issues feature

We’ve recently launched good first issues recommendations to help new contributors find easy gateways into open source projects. Read about the machine learning engine behind these recommendations. GitHub is leveraging machine learning (ML) to help more people contribute to open source.

Read More

Introducing the AI Index 2019 Report

The AI Index 2019 Report takes an interdisciplinary approach by design, analyzing and distilling patterns about AI’s broad global impact on everything from national economies to job growth, research and public perception. We’re excited to release the AI Index 2019 Report, one of the most comprehensive studies about AI to date. Because AI touches so many aspects of society, the Index takes an interdisciplinary approach by design, analyzing and distilling patterns about AI’s broad global impact on everything from national economies to job growth, research and public perception.

Read More

Personalizing Spotify Home with Machine Learning

Machine learning is at the heart of everything we do at Spotify. Especially on Spotify Home, where it enables us to personalize the user experience and provide billions of fans the opportunity to enjoy and be inspired by the artists on our platform. This is what makes Spotify unique.

Read More

Building a document understanding pipeline with Google Cloud

Document understanding is the practice of using AI and machine learning to extract data and insights from text and paper sources such as emails, PDFs, scanned documents, and more. In the past, capturing this unstructured or “dark data” has been an expensive, time-consuming, and error-prone process requiring manual data entry. Today, AI and machine learning have made great advances towards automating this process, enabling businesses to derive insights from and take advantage of this data that had been previously untapped.

Read More

Google Research Use of Concept Vectors for Image Search

Google recently released research about creating a tool for searching Similar Medical Images Like Yours (SMILY). The research uses embeddings for image-based search and allows users to influence the search through the interactive refinement of concepts.

Read More

The Effects of Mixing Machine Learning and Human Judgment

In 1997 IBM’s Deep Blue software beat the World Chess Champion Garry Kasparov in a series of six matches. Since then, other programs have beaten human players in games ranging from Jeopardy to Go. Inspired by his loss, Kasparov decided in 2005 to test the success of Human+AI pairs in an online chess tournament.2

Read More

Understanding Convolutional Neural Networks

A Convolutional Neural Network (CNN) is a class of deep, feed-forward artificial neural networks most commonly applied to analyzing visual imagery. The architecture of these networks was loosely inspired by biological neurons that communicate with each other and generate outputs dependent on the inputs. Though work on CNNs started in the early 1980s, they only became popular with recent technology advancements and computational capabilities that allow the processing of large amounts of data and the training of sophisticated algorithms in a reasonable amount of time.

Read More

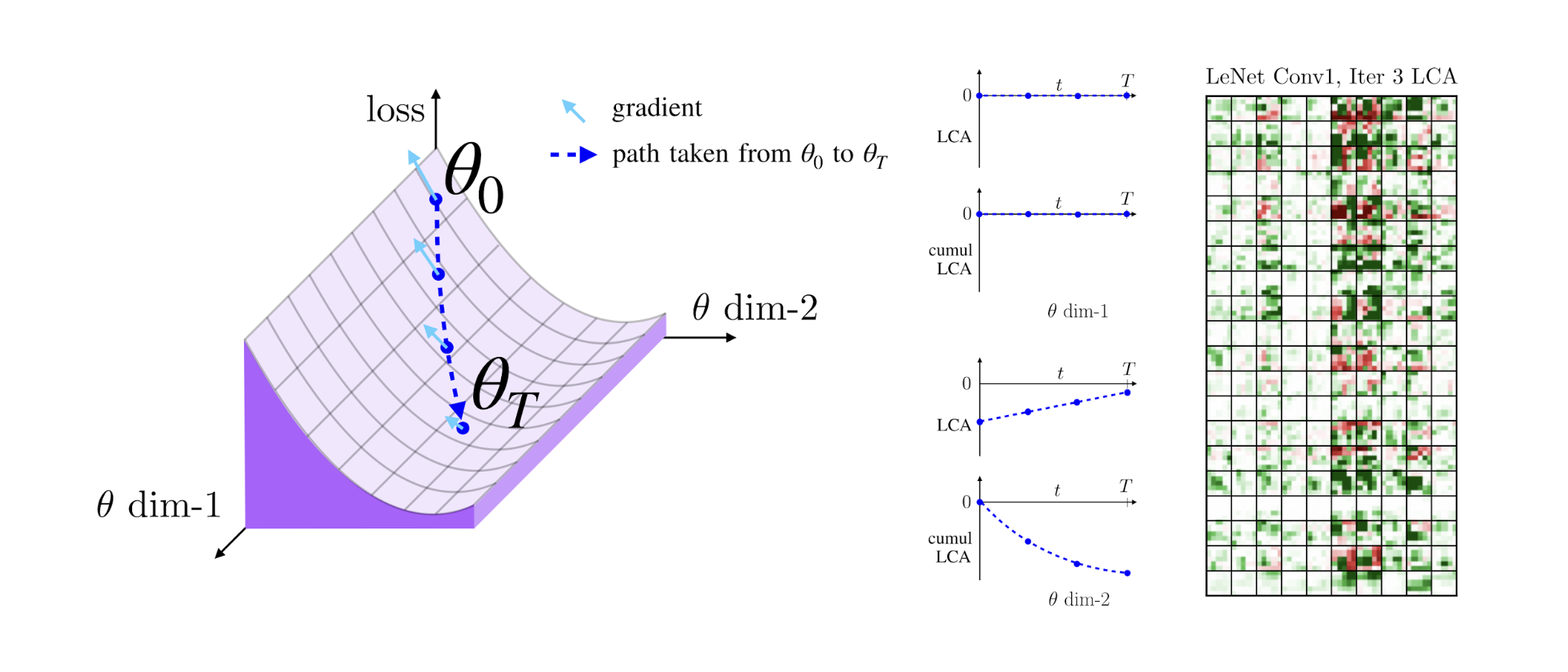

Introducing LCA: Loss Change Allocation for Neural Network Training

Neural networks (NNs) have become prolific over the last decade and now power machine learning across the industry. At Uber, we use NNs for a variety of purposes, including detecting and predicting object motion for self-driving vehicles, responding more quickly to customers, and building better maps. While many NNs perform quite well at their tasks, networks are fundamentally complex systems, and their training and operation is still poorly understood.

Read MoreReplay in biological and artificial neural networks

Our waking and sleeping lives are punctuated by fragments of recalled memories: a sudden connection in the shower between seemingly disparate thoughts, or an ill-fated choice decades ago that haunts us as we struggle to fall asleep. By measuring memory retrieval directly in the brain, neuroscientists have noticed something remarkable: spontaneous recollections, measured directly in the brain, often occur as very fast sequences of multiple memories. These so-called ‘replay’ sequences play out in a fraction of a second–so fast that we’re not necessarily aware of the sequence.

Read More

Powered by AI: Oculus Insight

To unlock the full potential of virtual reality (VR) and augmented reality (AR) experiences, the technology needs to work anywhere, adapting to the spaces where people live and how they move within those real-world environments. When we developed Oculus Quest, the first all-in-one, completely wire-free VR gaming system, we knew we needed positional tracking that was precise, accurate, and available in real time — within the confines of a standalone headset, meaning it had to be compact and energy efficient. At last year’s Oculus Connect event we shared some details about Oculus Insight, the cutting-edge technology that powers both Quest and Rift S. Now that both of those products are available, we’re providing a deeper look at the AI systems and techniques that power this VR technology.

Read More

Deep probabilistic modelling with Pyro

Classical machine learning and deep learning algorithms can only propose the most probable solutions and are not able to adequately model uncertainty. The success of deep neural networks in diverse areas as image recognition and natural language processing has been outstanding in recent years. However, classical machine learning and deep learning algorithms can only propose the most probable solutions and are not able to adequately model uncertainty.

Read More

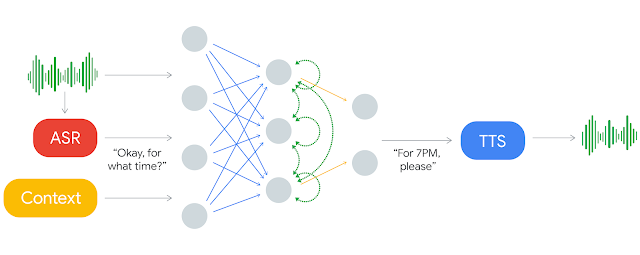

Speak to me: How voice commerce is revolutionizing commerce

We’ve seen profound advances in technology, especially with the development of artificial intelligence and deep learning which are increasingly for voice assistants. This, in turn, promises to bring about huge changes in consumer behavior — what’s being called “voice commerce”. This is a new channel, governed by a new set of rules.

Read More

New advances in natural language processing

Natural language understanding (NLU) and language translation are key to a range of important applications, including identifying and removing harmful content at scale and connecting people across different languages worldwide. Although deep learning–based methods have accelerated progress in language processing in recent years, current systems are still limited when it comes to tasks for which large volumes of labeled training data are not readily available. Recently, Facebook AI has achieved impressive breakthroughs in NLP using semi-supervised and self-supervised learning techniques, which leverage unlabeled data to improve performance beyond purely supervised systems.

Read More

Teaching Computers to Answer Complex Questions

Computerized question-answering systems usually take one of two approaches. Either they do a text search and try to infer the semantic relationships between entities named in the text, or they explore a hand-curated knowledge graph, a data structure that directly encodes relationships among entities. With complex questions, however — such as “Which Nolan films won an Oscar but missed a Golden Globe?” — both of these approaches run into difficulties.

Read More

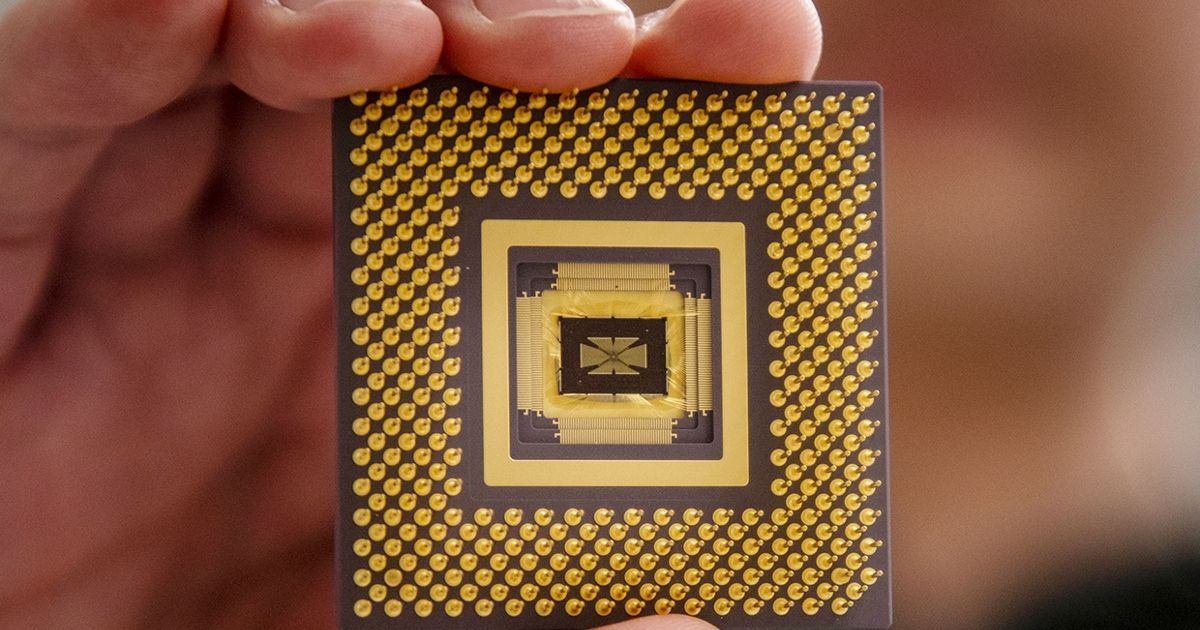

First Programmable Memristor Computer

Michigan team builds memristors atop standard CMOS logic to demo a system that can do a variety of edge computing AI tasks Hoping to speed AI and neuromorphic computing and cut down on power consumption, startups, scientists, and established chip companies have all been looking to do more computing in memory rather than in a processor’s computing core. Memristors and other nonvolatile memory seem to lend themselves to the task particularly well. However, most demonstrations of in-memory computing have been in standalone accelerator chips that either are built for a particular type of AI problem or that need the off-chip resources of a separate processor in order to operate.

Read More

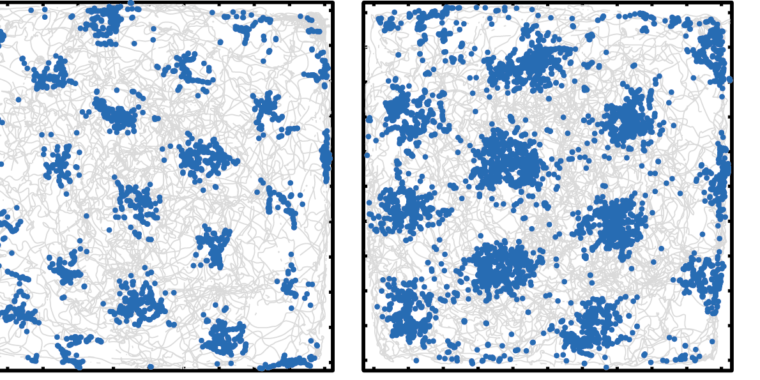

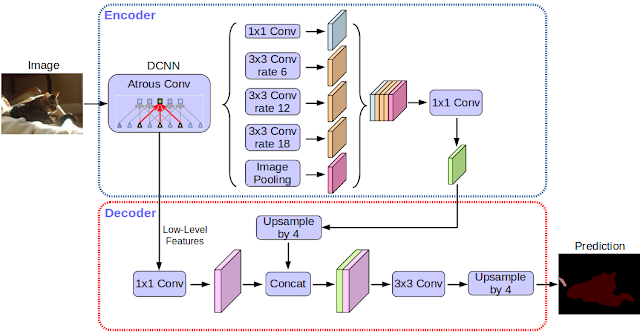

Mapping roads through deep learning and weakly supervised training

Creating accurate maps today is a painstaking, time-consuming manual process, even with access to satellite imagery and mapping software. Many regions — particularly in the developing world — remain largely unmapped. To help close this gap, Facebook AI researchers and engineers have developed a new method that uses deep learning and weakly supervised training to predict road networks from commercially available high-resolution satellite imagery.

Read More

Introducing EvoGrad: A Lightweight Library for Gradient-Based Evolution

Tools that enable fast and flexible experimentation democratize and accelerate machine learning research. Take for example the development of libraries for automatic differentiation, such as Theano, Caffe, TensorFlow, and PyTorch: these libraries have been instrumental in catalyzing machine learning research, enabling gradient descent training without the tedious work of hand-computing derivatives. In these frameworks, it’s simple to experiment by adjusting the size and depth of a neural network, by changing the error function that is to be optimized, and even by inventing new architectural elements, like layers and activation functions–all without having to worry about how to derive the resulting gradient of improvement.

Read More

Panel: First Steps with Machine Learning

This panel is a very diverse group, and I’m actually going to let them introduce themselves rather than me trying to butcher any names. This is all about answering my need, literally, my first steps. What should I be focused on as a software engineer wanting to get into ML and start using ML more convinced leadership on things that I want to do?

Read More

Using natural language processing to manage healthcare records

The next time you see your physician, consider the times you fill in a paper form. It may seem trivial, but the information could be crucial to making a better diagnosis. Now consider the other forms of healthcare data that permeate your life—and that of your doctor, nurses, and the clinicians working to keep patients thriving.

Read More

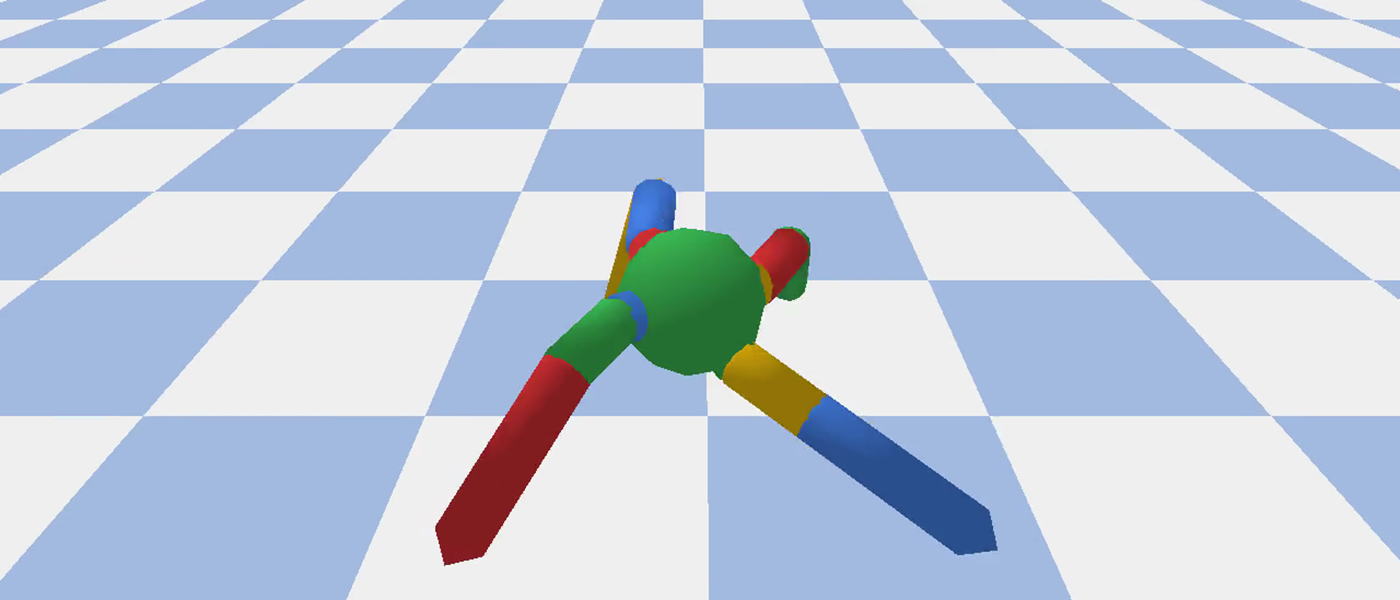

How to run evolution strategies on Google Kubernetes Engine

Reinforcement learning (RL) has become popular in the machine learning community as more and more people have seen its amazing performance in games, chess and robotics. In previous blog posts we’ve shown you how to run RL algorithms on AI Platform utilizing both Google’s powerful computing infrastructure and intelligently managed training service such as Bayesian hyperparameter optimization. In this blog, we introduce Evolution Strategies (ES) and show how to run ES algorithms on Google Kubernetes Engine (GKE).

Read More

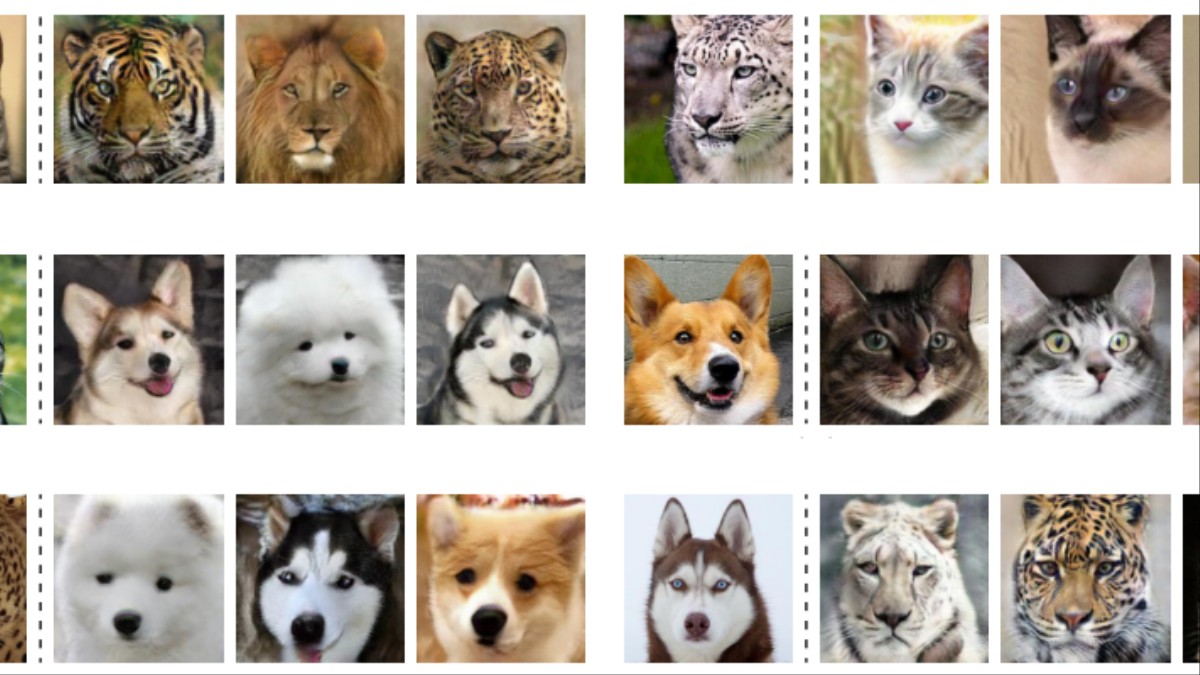

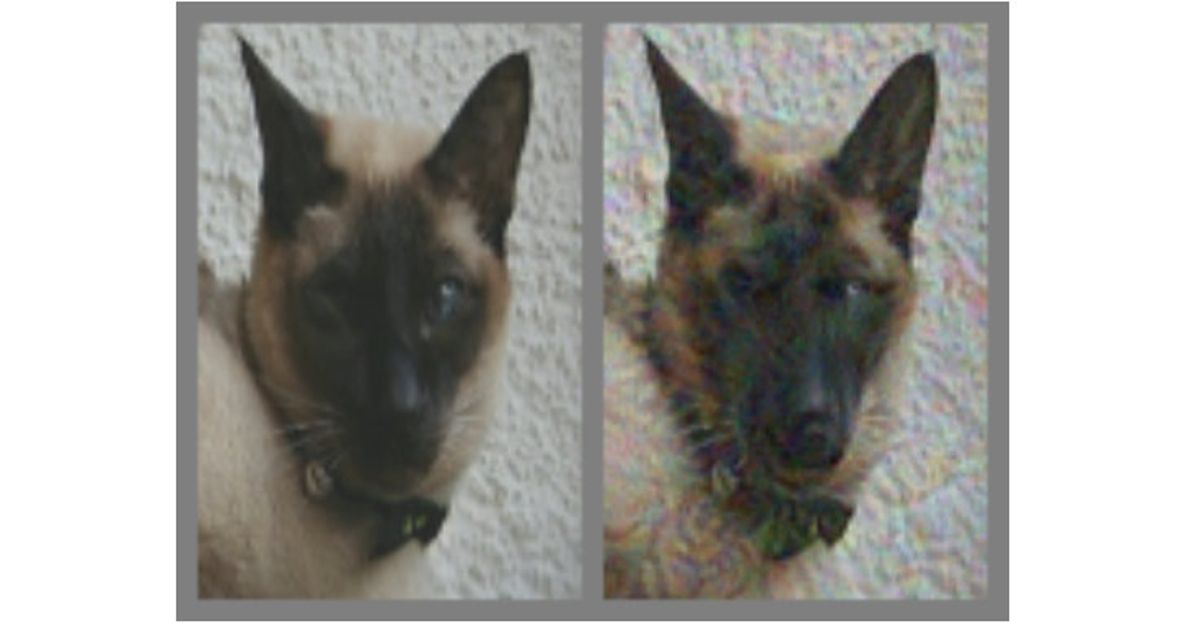

AQR’s Problem With Machine Learning: Cats Morph Into Dogs

Machine learning has done magic, such as beating human chess champions. But in finance, expectations for the technology may need to come down a notch or two, according to quantitative firm AQR. Machine learning changes the way problems are solved.

Read More

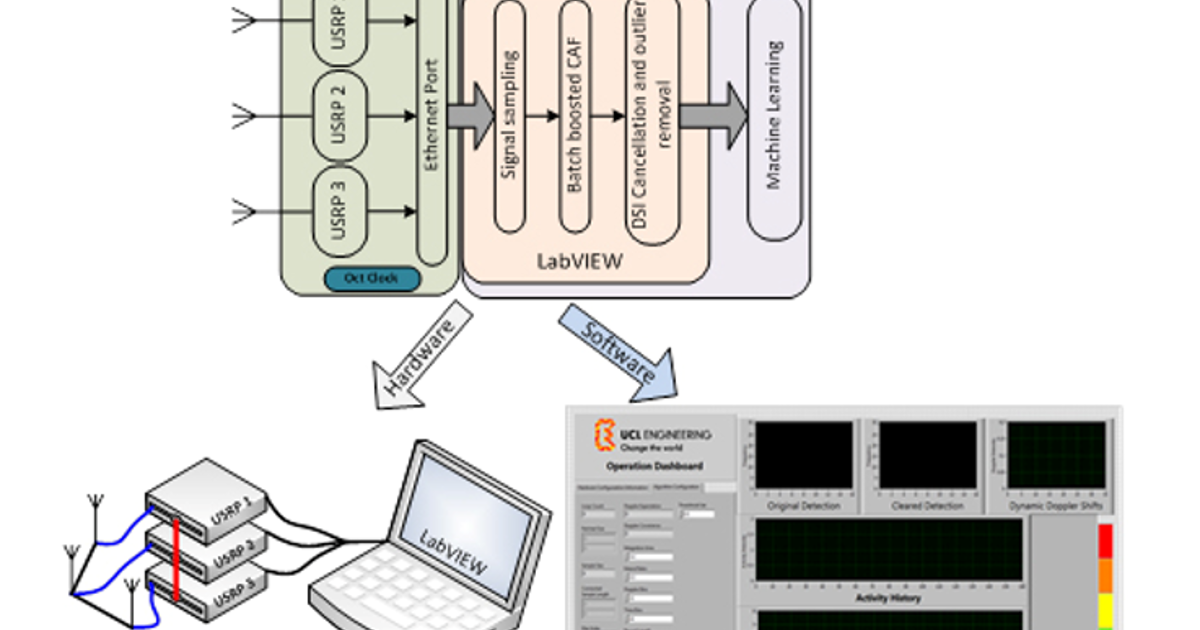

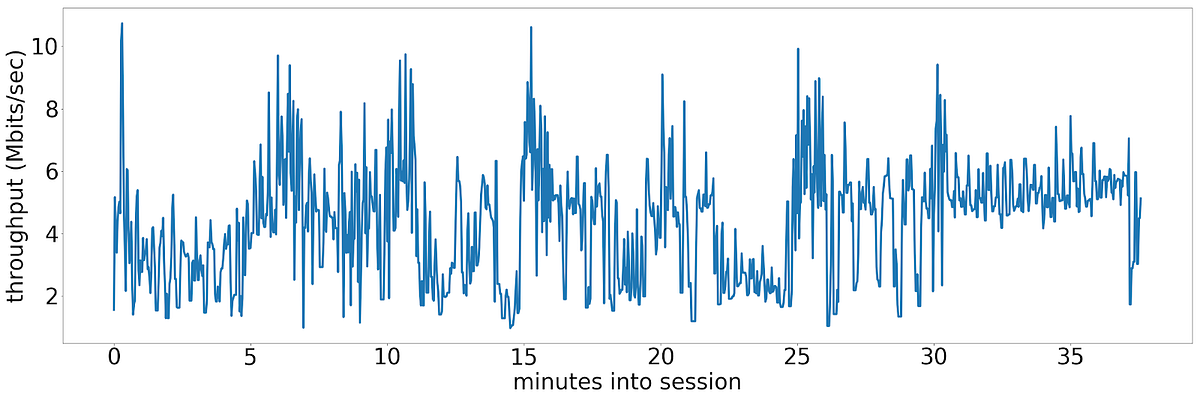

How AI is Starting to Influence Wireless Communications

Machine learning and deep learning technologies are promising an end-to-end optimization of wireless networks while they commoditize PHY and signal-processing designs and help overcome RF complexities What happens when artificial intelligence (AI) technology arrives on wireless channels? For a start, AI promises to address the design complexity of radio frequency (RF) systems by employing powerful machine learning algorithms and significantly improving RF parameters such as channel bandwidth, antenna sensitivity and spectrum monitoring. So far, engineering efforts have been made for smartening individual components in wireless networks via technologies like cognitive radio.

Read More

Releasing Pythia for vision and language multimodal AI models

Pythia is a deep learning framework that supports multitasking in the vision and language domain. Built on our open-source PyTorch framework, the modular, plug-and-play design enables researchers to quickly build, reproduce, and benchmark AI models. Pythia is designed for vision and language tasks, such as answering questions related to visual data and automatically generating image captions.

Read More

Detecting malaria with deep learning

Artificial intelligence (AI) and open source tools, technologies, and frameworks are a powerful combination for improving society. ‘Health is wealth’ is perhaps a cliche, yet it’s very accurate! In this article, we will examine how AI can be leveraged for detecting the deadly disease malaria with a low-cost, effective, and accurate open source deep learning solution.

Read More

An ML showdown in search of the best tool

Ever burgeoning digital data combined with impressive research has lead to a rising interest in Machine Learning or ML, which has further powered a vibrant ecosystem of technologies, frameworks, and libraries in the space. Scikit-learn sees high adoption from the tech community. The most probable reason is a powerful Python interface that allows tweaking of models across multiple parameters.

Read More

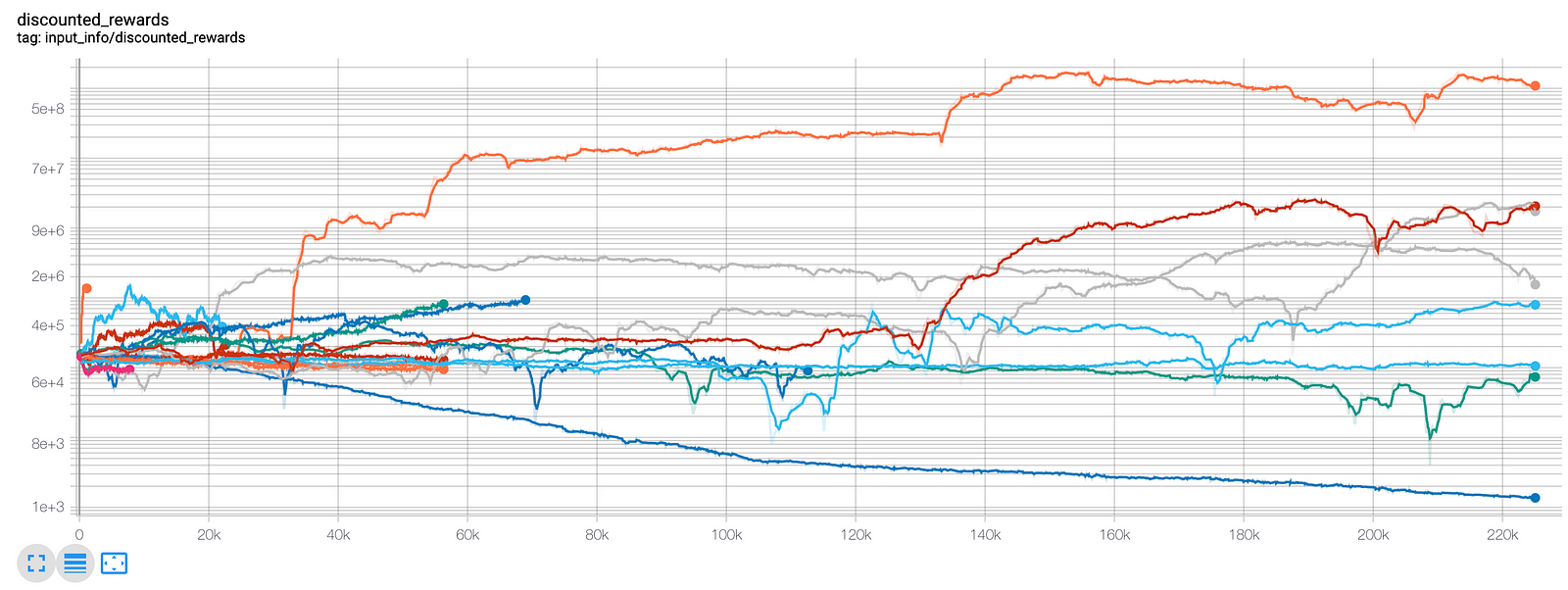

Creating Bitcoin trading bots that don’t lose money

In this article we are going to create deep reinforcement learning agents that learn to make money trading Bitcoin. In this tutorial we will be using OpenAI’s gym and the PPO agent from the stable-baselines library, a fork of OpenAI’s baselines library. If you are not already familiar with how to create a gym environment from scratch, or how to render simple visualizations of those environments, I have just written articles on both of those topics.

Read More

DeepMind and Google: the battle to control artificial intelligence

One afternoon in August 2010, in a conference hall perched on the edge of San Francisco Bay, a 34-year-old Londoner called Demis Hassabis took to the stage. Walking to the podium with the deliberate gait of a man trying to control his nerves, he pursed his lips into a brief smile and began to speak: “So today I’m going to be talking about different approaches to building…” He stalled, as though just realising that he was stating his momentous ambition out loud.

Read More

Hash Your Way To a Better Neural Network

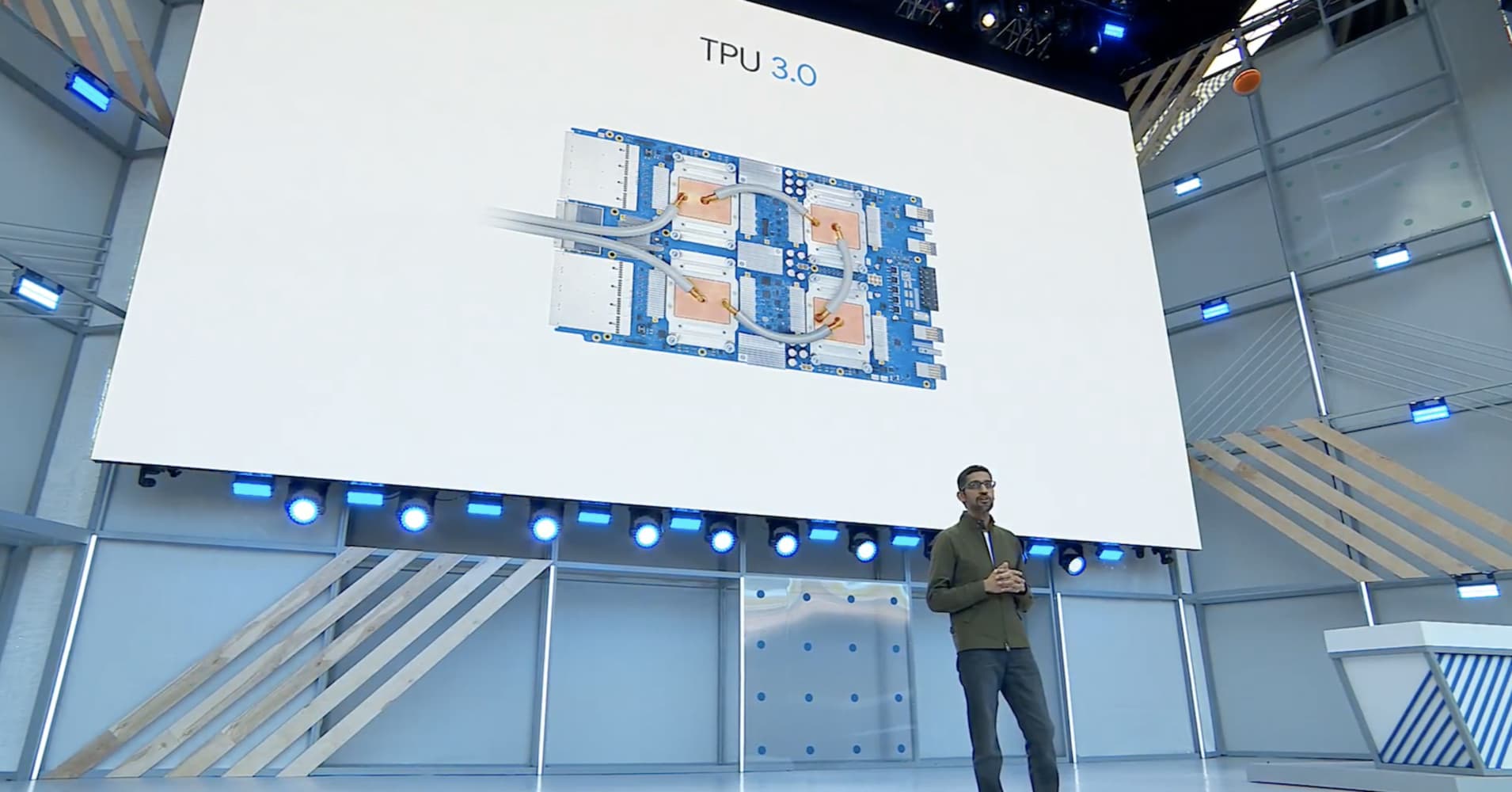

The computer industry has been busy in recent years trying to figure out how to speed up the calculations needed for artificial neural networks—either for their training or for what’s known as inference, when the network is performing its function. In particular, much effort has gone into designing special-purpose hardware to run such computations. Google, for example, developed its Tensor Processing Unit, or TPU, first described publicly in 2016.

Read More

12 open source tools for natural language processing

It would be easy to argue that Natural Language Toolkit (NLTK) is the most full-featured tool of the ones I surveyed. It implements pretty much any component of NLP you would need, like classification, tokenization, stemming, tagging, parsing, and semantic reasoning. And there’s often more than one implementation for each, so you can choose theexact algorithm or methodology you’d like to use.

Read More

Introducing Ludwig, a Code-Free Deep Learning Toolbox

Over the last decade, deep learning models have proven highly effective at performing a wide variety of machine learning tasks in vision, speech, and language. At Uber we are using these models for a variety of tasks, including customer support, object detection, improving maps, streamlining chat communications, forecasting, and preventing fraud. Many open source libraries, including TensorFlow, PyTorch, CNTK, MXNET, and Chainer, among others, have implemented the building blocks needed to build such models, allowing for faster and less error-prone development.

Read More

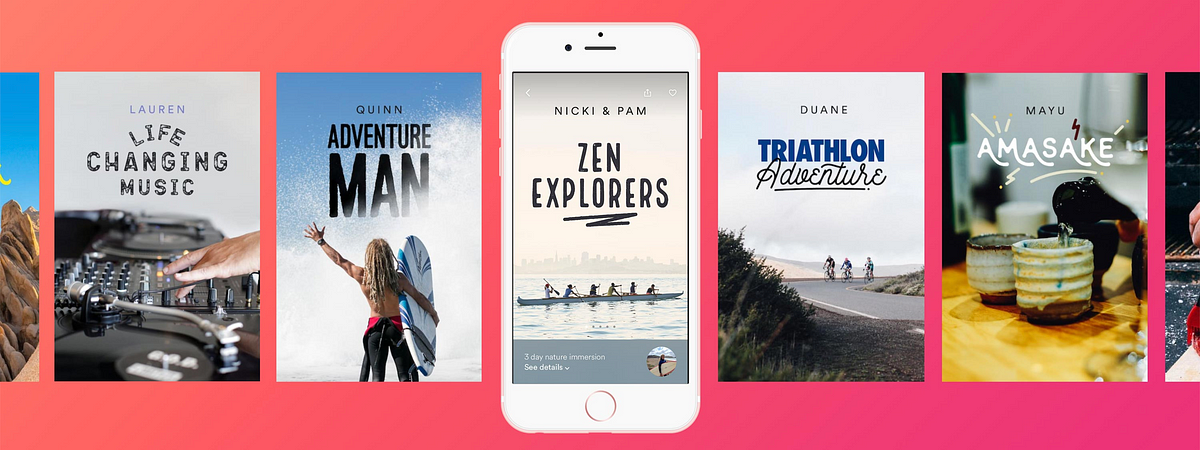

Machine Learning-Powered Search Ranking of Airbnb Experiences

How we built and iterated on a machine learning Search Ranking platform for a new two-sided marketplace and how we helped itgrow. Airbnb Experiences are handcrafted activities designed and led by expert hosts that offer a unique taste of local scene and culture. Each experience is vetted for quality by a team of editors before it makes its way onto the platform.

Read More

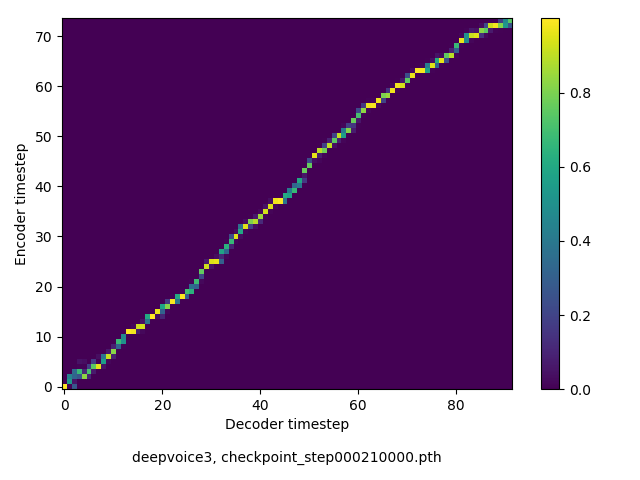

Teaching AI to learn speech the way children do

A collaboration between the Facebook AI Research (FAIR) group and the Paris Sciences & Lettres University, with additional sponsorship from Microsoft Research, to challenge other researchers to teach AI systems to learn speech in a way that more closely resembles how young children learn. The ZeroSpeech 2019 challenge (which builds on previous efforts in 2015 and 2017) asks participants to build a speech synthesizer using only audio input, without any text or phonetic labels. The challenge’s central task is to build an AI system that can discover, in an unknown language, the machine equivalent of text of phonetic labels and use them to re-synthesize a sentence in a given voice.

Read More

AI Blueprints: Implementing content-based recommendations using Python

In this article, we’ll have a look at how you can implement a content-based recommendation system using Python and the scikit-learn library. But before diving straight into this, it’s important to have some prerequisite knowledge of the different ways by which recommendation systems can recommend an item to users. Content-based: A content-based recommendation finds similar items to a given item by examining the item’s properties, such as its title or description, category, or dependencies on other items (for example, electronic toys require batteries).

Read More

AI year in review

At Facebook, we think that artificial intelligence that learns in new, more efficient ways – much like humans do – can play an important role in bringing people together. That core belief helps drive our AI strategy, focusing our investments in long-term research related to systems that learn using real-world data, inspiring our engineers to share cutting-edge tools and platforms with the wider AI community, and ultimately demonstrating new ways to use the technology to benefit the world. In 2018, we made important progress in all these areas.

Read More

What Kagglers are using for Text Classification

With the problem of Image Classification is more or less solved by Deep learning, Text Classification is the next new developing theme in deep learning. For those who don’t know, Text classification is a common task in natural language processing, which transforms a sequence of text of indefinite length into a category of text. How could you use that?

Read More

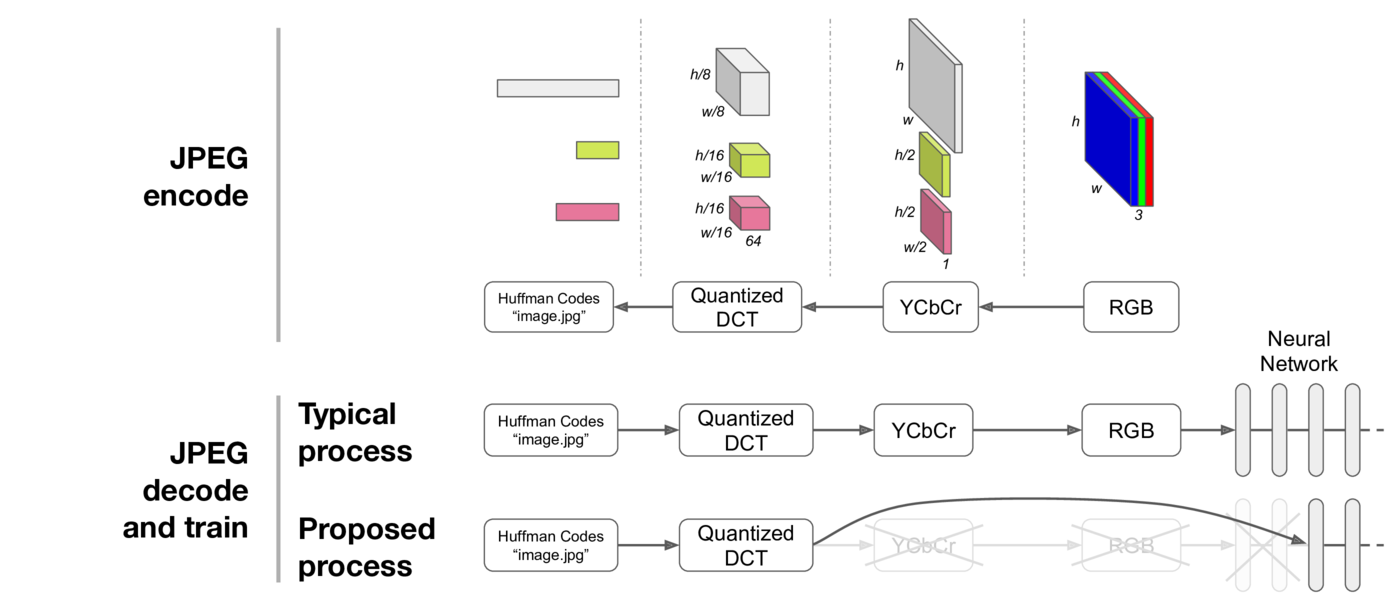

Faster Neural Networks Straight from JPEG

Uber AI Labs introduces a method for making neural networks that process images faster and more accurately by leveraging JPEG representations. Neural networks, an important tool for processing data in a variety of industries, grew from an academic research area to a cornerstone of industry over the last few years. Convolutional Neural Networks (CNNs) have been particularly useful for extracting information from images, whether classifying them, recognizing faces, or evaluating board positions in Go.

Read More

Using AI and satellite imagery for disaster insights

A framework for using convolutional neural networks (CNNs) on satellite imagery to identify the areas most severely affected by a disaster. This new method has the potential to produce more accurate information in far less time than current manual methods. Ultimately, the goal of this research is to allow rescue workers to quickly identify where aid is needed most, without relying on manually annotated, disaster-specific data sets.

Read More

Matplotlib—Making data visualization interesting

Data visualization is a key step to understand the dataset and draw inferences from it. While one can always closely inspect the data row by row, cell by cell, it’s often a tedious task and does not highlight the big picture. Visuals on the other hand, define data in a form that is easy to understand with just a glance and keeps the audience engaged.

Read More

Easy-To-Read Summary of Important AI Research Papers of 2018

Trying to keep up with AI research papers can feel like an exercise in futility given how quickly the industry moves. If you’re buried in papers to read that you haven’t quite gotten around to, you’re in luck. To help you catch up, we’ve summarized 10 important AI research papers from 2018 to give you a broad overview of machine learning advancements this year.

Read More

Amazon makes its machine learning courses available for free

Amazon announced today that it’s making its range of machine learning courses available to all developers signed up to its AWS platform for free. This program was previously available only to Amazon employees, but anyone can now take advantage of it at no charge by signing up to Amazon Web Services’ free plan. It includes 30 courses in total, with over 45 hours of course material, videos, and lab tests.

Read More

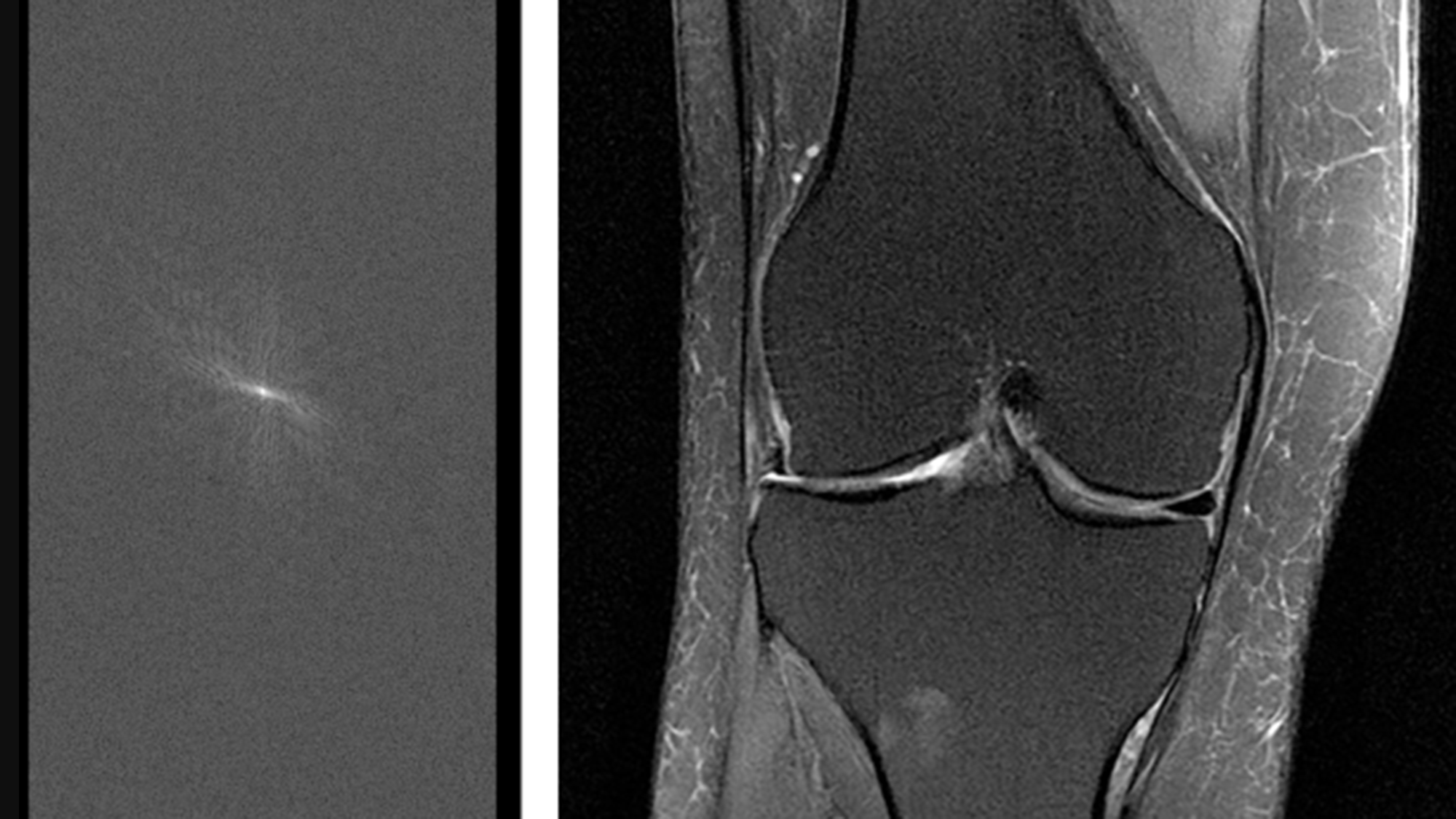

FastMRI open source tools from Facebook and NYU

Facebook AI Research (FAIR) and NYU School of Medicine’s Center for Advanced Imaging Innovation and Research (CAI²R) are sharing new open source tools and data as part of fastMRI, a joint research project to spur development of AI systems to speed MRI scans by up to 10x. Today’s releases include new AI models and baselines for this task(as described in our paper here). It also includes the first large-scale MRI data set of its kind, which can serve as a benchmark for future research.

Read More

Humanizing Customer Complaints using NLP Algorithms

Last Christmas, I went through the most frustrating experience as a consumer. I was doing some last minute holiday shopping and after standing in a long line, I finally reached the blessed register only to find out that my debit card was blocked. I could sense the old lady at the register judging me with her narrowed eyes.

Read More

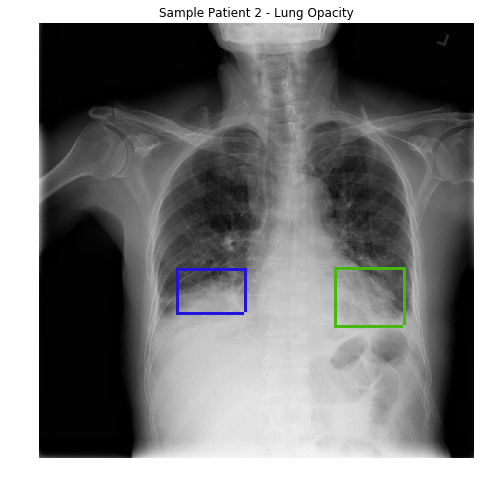

Radiology and Deep Learning

Radiology and DeepLearningDetecting pneumonia opacities from chest X-Ray images using deep learning. One day back in August, I was catching up with my best friend from high school who is now a radiology resident. One thing led to another, and we started talking about our interests in artificial intelligence and machine learning and its possible applications in radiology.

Read More

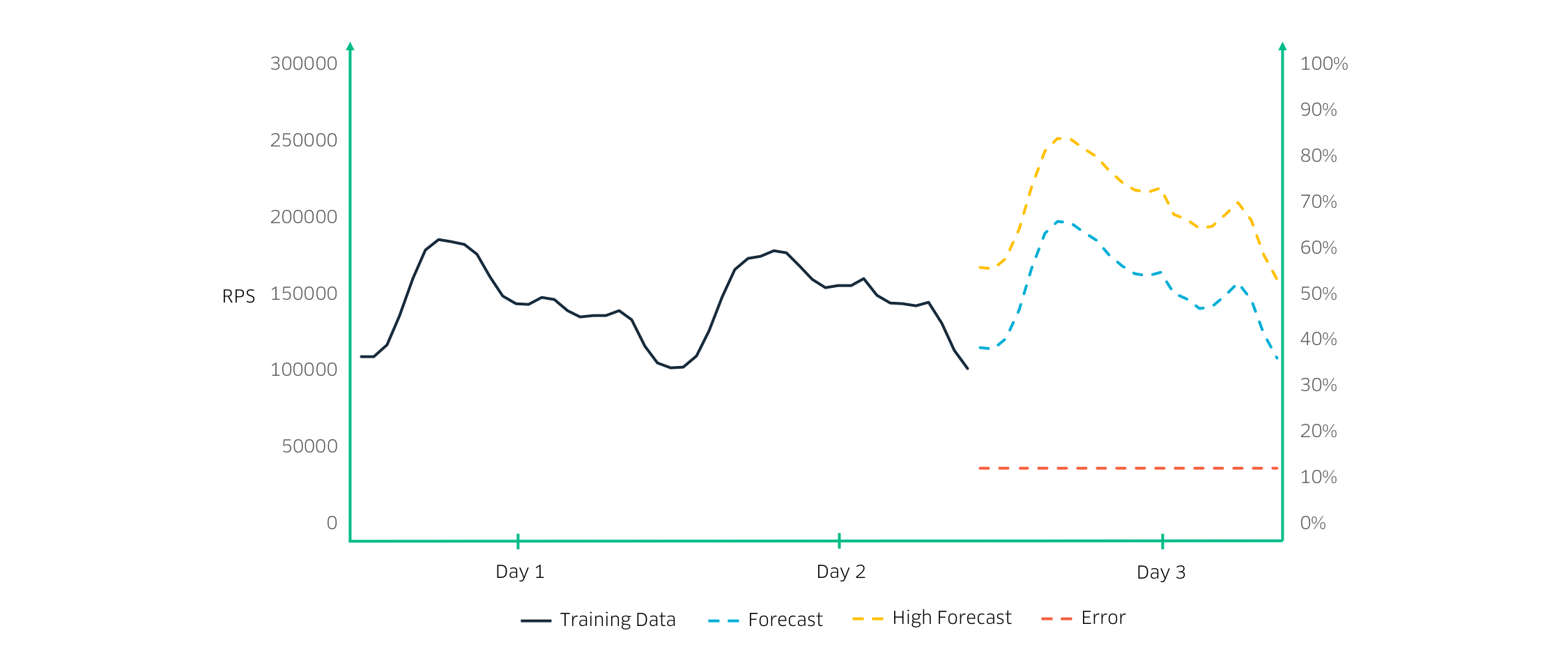

Predictive Scaling for EC2, Powered by Machine Learning

When I look back on the history of AWS and think about the launches that truly signify the fundamentally dynamic, on-demand nature of the cloud, two stand out in my memory: the launch of Amazon EC2 in 2006 and the concurrent launch of CloudWatch Metrics, Auto Scaling, and Elastic Load Balancing in 2009. The first launch provided access to compute power; the second made it possible to use that access to rapidly respond to changes in demand. We have added a multitude of features to all of these services since then, but as far as I am concerned they are still central and fundamental!

Read More

The dark side of YouTube

The YouTube algorithm that I helped build in 2011 still recommends the flat earth theory by the hundreds of millions. This investigation by @RawStory shows some of the real-life consequences of this badly designed AI.

Read More

Decision Tree in Machine Learning

A decision tree is a flowchart-like structure in which each internal node represents a test on a feature (e.g. whether a coin flip comes up heads or tails), each leaf node represents a class label (decision taken after computing all features) and branches represent conjunctions of features that lead to those class labels. The paths from root to leaf represent classification rules. Below diagram illustrate the basic flow of decision tree for decision making with labels (Rain(Yes), No Rain(No)).

Read More

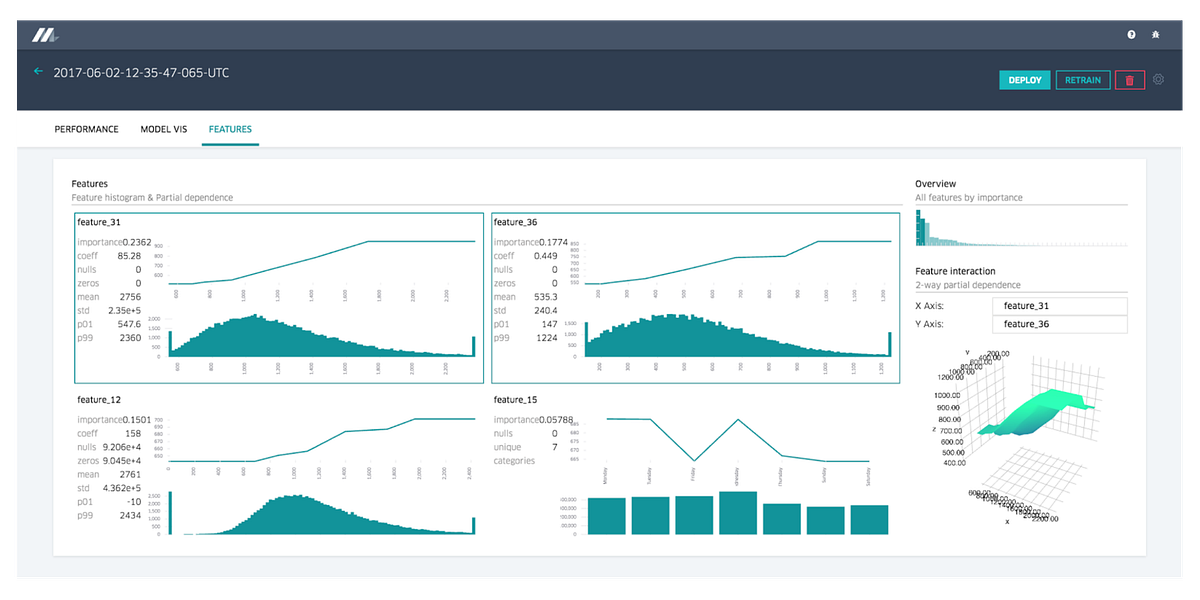

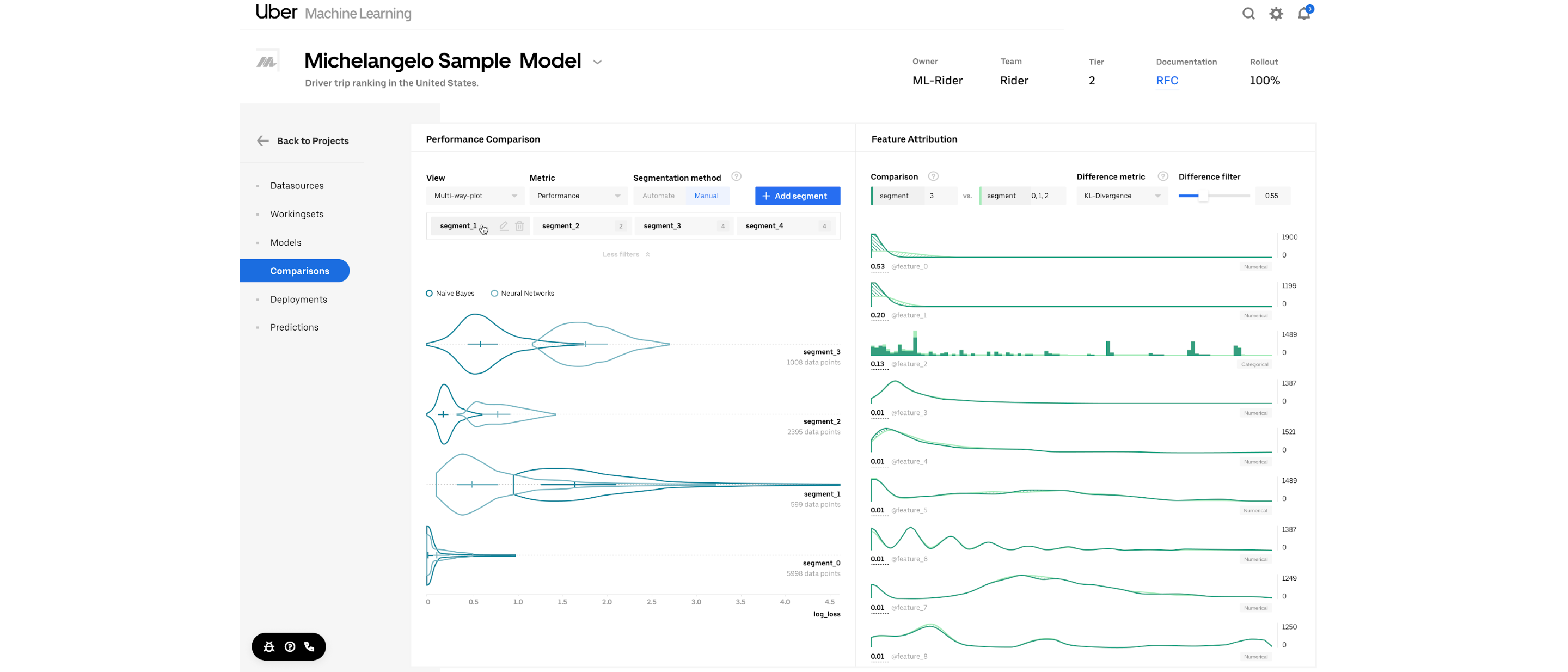

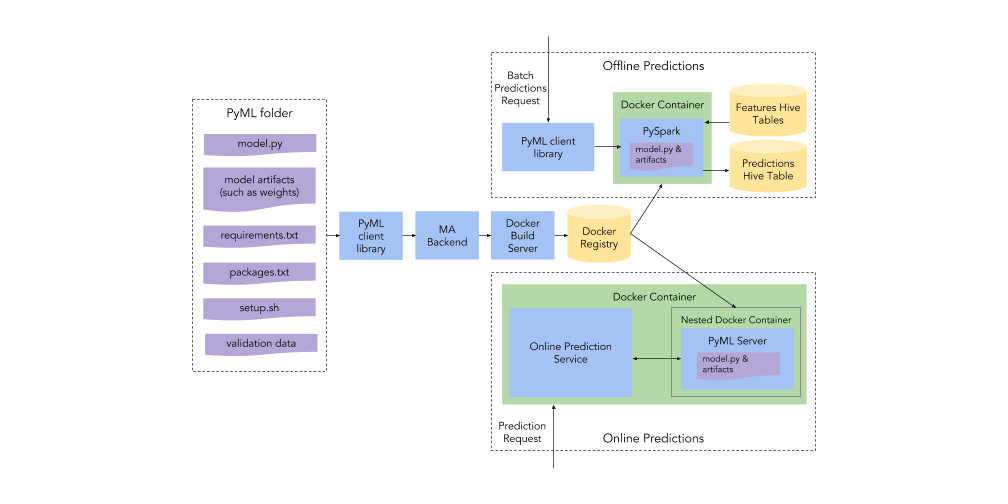

Five Lessons From the First Three Years of Michelangelo

Uber has been one of the most active contributors to open source machine learning technologies in the last few years. While companies like Google or Facebook have focused their contributions in new deep learning stacks like TensorFlow, Caffe2 or PyTorch, the Uber engineering team has really focused on tools and best practices for building machine learning at scale in the real world. Technologies such as Michelangelo, Horovod, PyML, Pyro are some of examples of Uber’s contributions to the machine learning ecosystem.

Read More

Real Time Facial Expression Recognition

Computer animated agents and robots bring new dimension in human computer interaction which makes it vital as how computers can affect our social life in day-to-day activities. Face to face communication is a real-time process operating at a time scale in the order of milliseconds. The level of uncertainty at this time scale is considerable, making it necessary for humans and machines to rely on sensory rich perceptual primitives rather than slow symbolic inference processes.

Read More

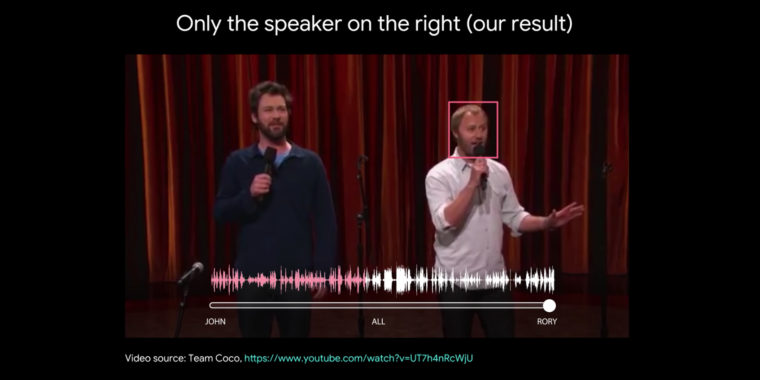

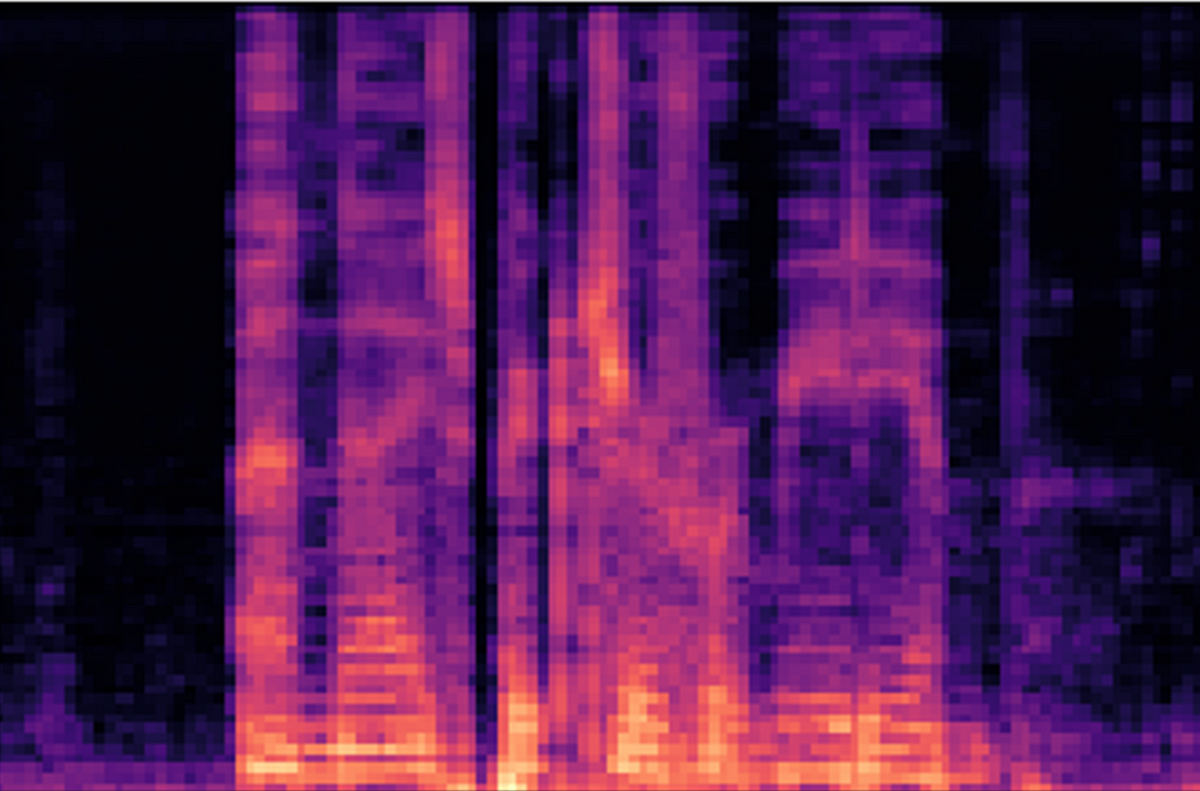

Accurate Online Speaker Diarization with Supervised Learning

Speaker diarization, the process of partitioning an audio stream with multiple people into homogeneous segments associated with each individual, is an important part of speech recognition systems. By solving the problem of “who spoke when”, speaker diarization has applications in many important scenarios, such as understanding medical conversations, video captioning and more. However, training these systems with supervised learning methods is challenging — unlike standard supervised classification tasks, a robust diarization model requires the ability to associate new individuals with distinct speech segments that weren’t involved in training.

Read More

A Google Brain engineer’s guide to entering AI

Note that this guide was written in November 2018 to complement an in-depth conversation on the 80,000 Hours Podcast with Catherine Olsson and Daniel Ziegler on how to transition from computer science and software engineering in general into ML engineering, with a focus on alignment and safety. If you like this guide, we’d strongly encourage you to check out the podcast episode where we discuss some of the instructions here, and other relevant advice. Technical AI safety is a multifaceted area of research, with many sub-questions in areas such as reward learning, robustness, and interpretability.

Read More

Learning Concepts with Energy Functions

We’ve developed an energy-based model that can quickly learn to identify and generate instances of concepts, such as near, above, between, closest, and furthest, expressed as sets of 2d points. Our model learns these concepts after only five demonstrations.

Read More

20 Best YouTube channels for AI and machine learning

What are the most interesting and informative YouTube channels about artificial intelligence (AI) and machine learning? Subscribe to these 20 high-quality channels today to stay up to date with the latest AI and machine learning breakthroughs. Siraj Raval:

Read More

Why Chinese Artificial Intelligence Will Run The World

With Chinese tech giants Baidu, Alibaba, and Tencent focused on developing sophisticated AI-driven systems in the coming decade, the rest of the world can only watch while China builds the computer systems that will run our world in the decades to come. If you’ve been paying attention in the past year, it seems that all anyone can talk about is the coming artificial intelligence boom on the horizon. Whether it’s the Amazon, Google, or Facebook, everyone seems to be getting in on the AI game as fast as they can.

Read More

EPO Issues First Guidelines on AI Patents

The European Patent Office (EPO) has issued official guidelines on the patenting of artificial intelligence and machine learning technologies. The guidelines became valid on November 1st, 2018. When determining whether the claimed subject-matter satisfies this condition, the guidelines note that expressions such as “support vector machine,” “reasoning engine” or “neural network” may not qualify, as these are regarded as terms for mathematical methods which do not have a unique technical character of their own.

Read More

Tensorflow 2.0: models migration and new design

Tensorflow 2.0 will be a major milestone for the most popular machine learning framework: lots of changes are coming, and all with the aim of making ML accessible to everyone. These changes, however, requires for the old users to completely re-learn how to use the framework: this article describes all the (known) differences between the 1.x and 2.x version, focusing on the change of mindset required and highlighting the pros and cons of the new and implementations. This article can be a good starting point also for the novice: start thinking in the Tensorflow 2.0 way right now, so you don’t have to re-learn a new framework (unless until Tensorflow 3.0 will be released).

Read More

Horizon: An open-source reinforcement learning platform

Horizon is the first open source end-to-end platform that uses applied reinforcement learning (RL) to optimize systems in large-scale production environments. The workflows and algorithms included in this release were built on open frameworks — PyTorch 1.0, Caffe2, and Spark — making Horizon accessible to anyone using RL at scale. We’ve put Horizon to work internally over the past year in a wide range of applications, including helping to personalize M suggestions, delivering more meaningful notifications, and optimizing streaming video quality.

Read More

New Theory of Intelligence May Disrupt AI and Neuroscience

Recent advancement in artificial intelligence, namely in deep learning, has borrowed concepts from the human brain. The architecture of most deep learning models is based on layers of processing– an artificial neural network that is inspired by the neurons of the biological brain. Yet neuroscientists do not agree on exactly what intelligence is, and how it is formed in the human brain — it’s a phenomena that remains unexplained.

Read More

What’s the Best Deep Learning Framework?

Deep learning models are large and complex, so instead of writing out every function from the ground up, programmers rely on frameworks and software libraries to build neural networks efficiently. The top deep learning frameworks provide highly optimized, GPU-enabled code that are specific to deep neural network computations.

Read More

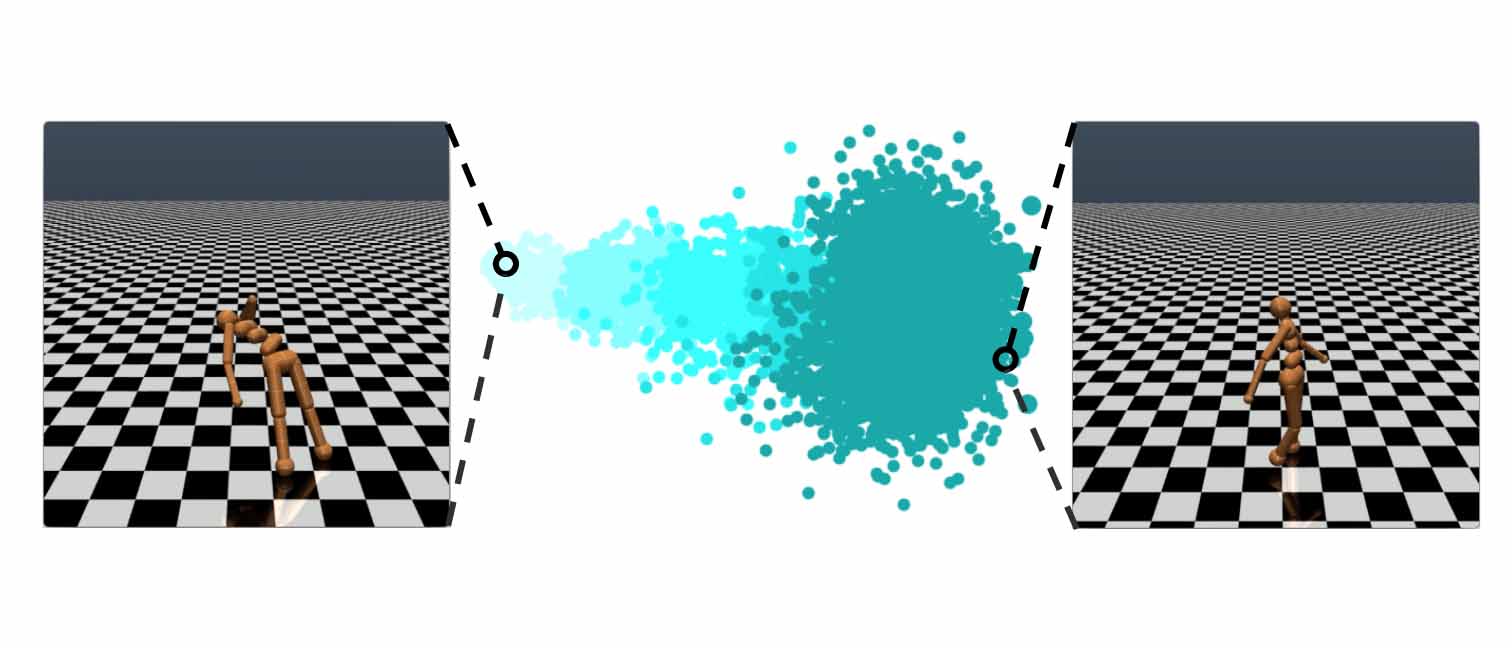

Curiosity and Procrastination in Reinforcement Learning

Episodic Curiosity through Reachability: Observations are added to memory, reward is computed based on how far the current observation is from the most similar observation in memory. The agent receives more reward for seeing observations which are not yet represented in memory.

Read More

California Law Bans Bots From Pretending to Be Human

Are you talking to a real person online or a bot? In California, bots will need to identify themselves thanks to a new bill just signed into law by Gov. Jerry Brown. The measure bans automated accounts from pretending to be real people in order to ‘incentivize a purchase or sale of goods or services in a commercial transaction or to influence a vote in an election,’ effective July 1, 2019.

Read More

The What-If Tool: Code-Free Probing of Machine Learning Models

How would changes to a datapoint affect my model’s prediction? Does it perform differently for various groups–for example, historically marginalized people? How diverse is the dataset I am testing my model on?

Read More

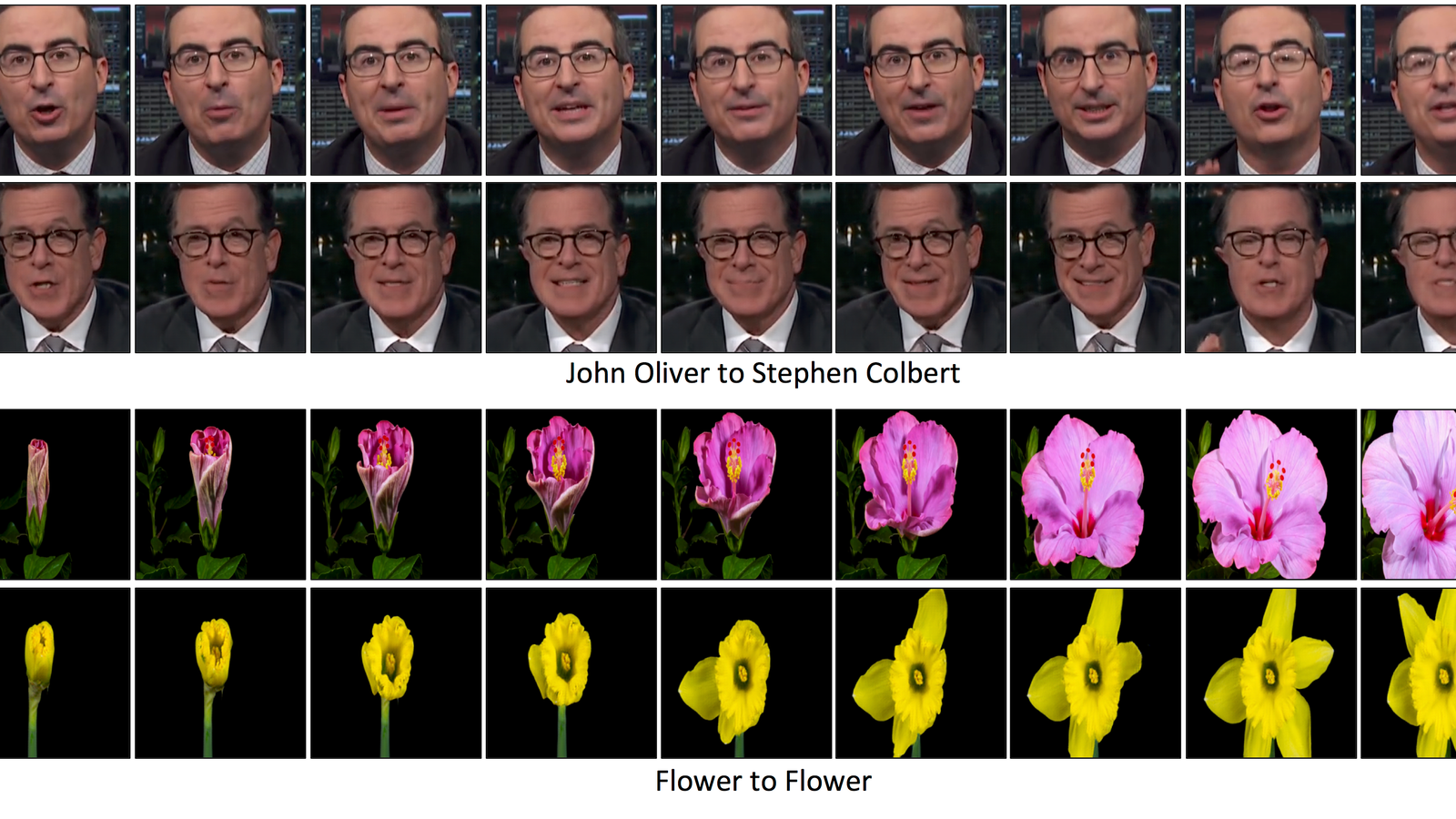

Carnegie Mellon Researchers Develop New Deepfake Method

Deepfakes, ultrarealistic fake videos manipulated using machine learning, are getting pretty convincing. And researchers continue to develop new methods to create these types of videos, for better or, more likely, for worse. The most recent method comes from researchers at Carnegie Mellon University, who have figured out a way to automatically transfer the “style” of one person to another.

Read More

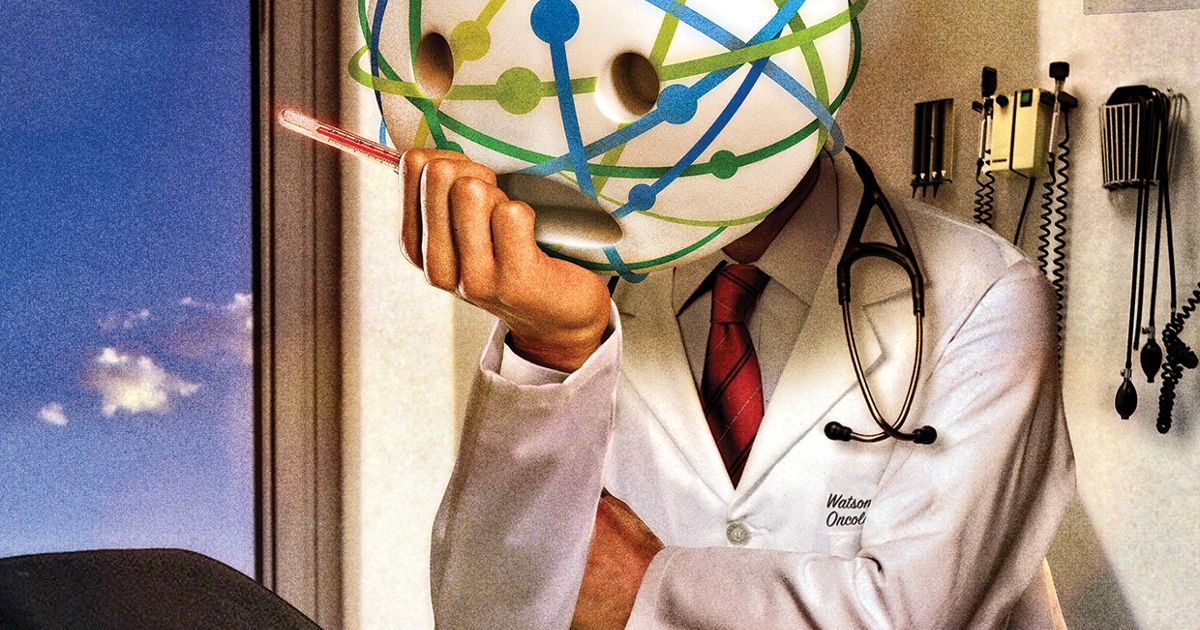

AI System Approved For Diabetic Retinopathy Diagnosis

A system designed by a University of Iowa ophthalmologist that uses artificial intelligence (AI) to detect diabetic retinopathy without a person interpreting the results earned Food and Drug Administration (FDA) authorization in April, following a clinical trial in primary care offices. Results of that study were published Aug. 28 online in Nature Digital Medicine, offering the first look at data that led to FDA clearance for IDx-DR, the first medical device that uses AI for the autonomous detection of diabetic retinopathy. The clinical trial, which also was the first study to prospectively assess the safety of an autonomous AI system in patient care, compared the performance of IDx-DR to the gold standard diagnostic for diabetic retinopathy, which is the leading cause of vision loss in adults and one of the most severe complications for the 30.3 million Americans living with diabetes.

Read More

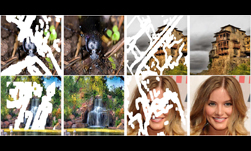

Deep Angel: AI that erases objects from images

Deep Angel is an artificial intelligence that erases objects from photographs. Part art, part technology, and part philosophy, Deep Angel shares Angelus Novus’ gaze into the future.

Read More

China Is Building a Fleet of Autonomous AI

A fleet of autonomous, AI-powered submarines is headed into hotly-contested Asian waterways. The vehicles will belong to the Chinese armed forces, and their mission capabilities are likely to raise concerned eyebrows in surrounding countries. If all goes to plan, the first submarines will launch in 2020.

Read More

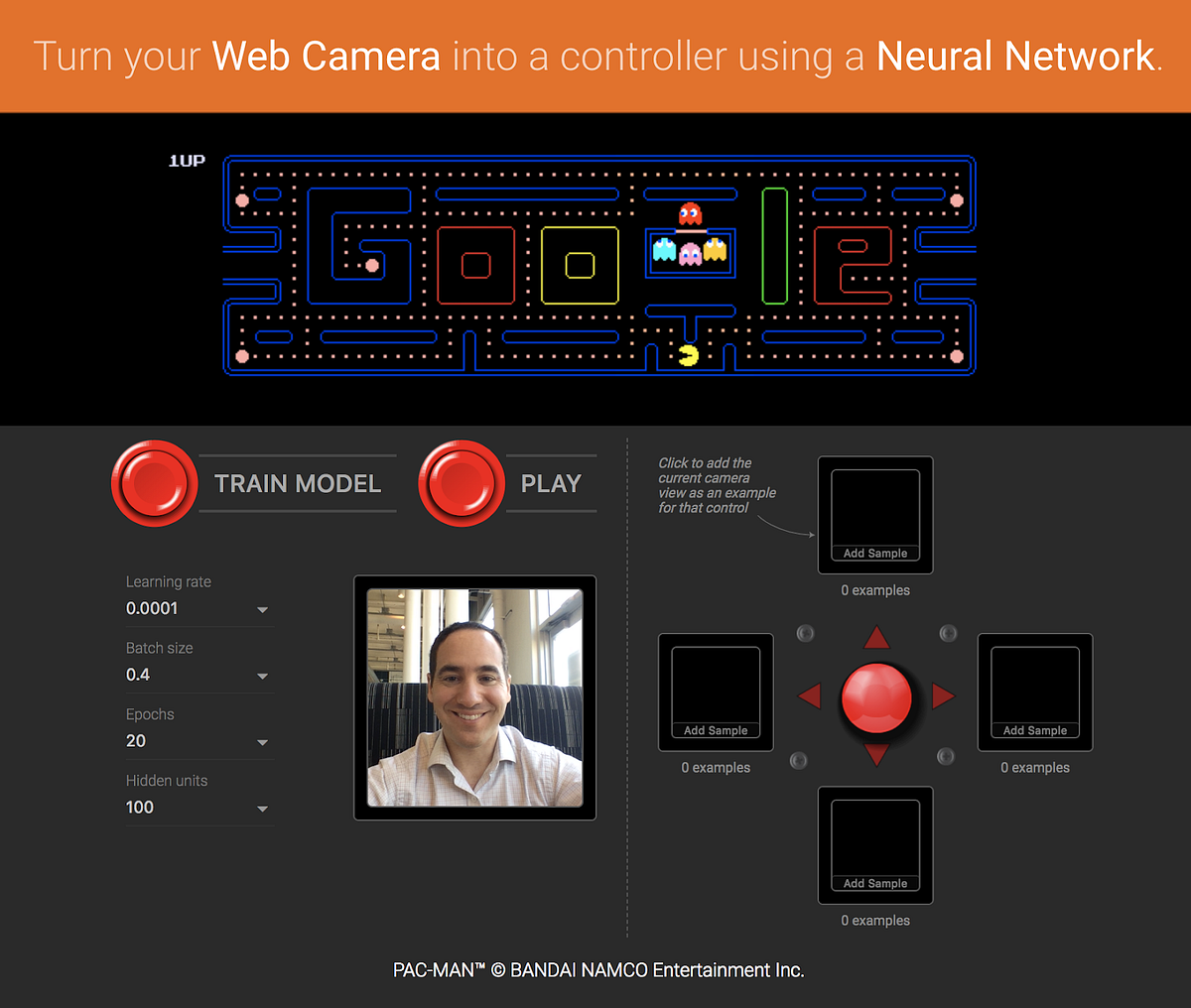

Collection of Interactive Machine Learning Examples

Each seed is a machine learning example you can start playing with. Explore, learn and grow them into whatever you like.

Read More

Foundations Machine Learning

Bloomberg presents ‘Foundations of Machine Learning,’ a training course that was initially delivered internally to the company’s software engineers as part of its ‘Machine Learning EDU’ initiative. This course covers a wide variety of topics in machine learning and statistical modeling. The primary goal of the class is to help participants gain a deep understanding of the concepts, techniques and mathematical frameworks used by experts in machine learning.

Read More

Don’t Learn TensorFlow! Start with Keras or PyTorch Instead

So, you want to learn deep learning? Whether you want to start applying it to your business, base your next side project on it, or simply gain marketable skills – picking the right deep learning framework to learn is the essential first step towards reaching your goal. We strongly recommend that you pick either Keras or PyTorch.

Read More

Neural scene representation and rendering

There is more than meets the eye when it comes to how we understand a visual scene: our brains draw on prior knowledge to reason and to make inferences that go far beyond the patterns of light that hit our retinas. For example, when entering a room for the first time, you instantly recognise the items it contains and where they are positioned. If you see three legs of a table, you will infer that there is probably a fourth leg with the same shape and colour hidden from view.

Read More

Intel’s New Path to Quantum Computing

Intel’s director of quantum hardware, Jim Clarke, explains the company’s two quantum computing technologies The limits of Tangle Lake’s technology Silicon spin qubits and how far away they are The importance of cryogenic control electronics Top quantum computing applications What problems keeps him up at night AI vs. Quantum Computing: which will be more important? IEEE Spectrum: What’s special about Tangle Lake?

Read More

AI Nationalism

The last few years have seen developments in machine learning research and commercialisation that have been pretty astounding. As just a few examples: Image recognition starts to achieve human-level accuracy at complex tasks, for example skin cancer classification. Big steps forward in applying neural networks to machine translation at Baidu, Google, Microsoft etc.

Read More

The 50 Best Free Datasets for Machine Learning

What are some open datasets for machine learning? We at Gengo decided to create the ultimate cheat sheet for high quality datasets. These range from the vast (looking at you, Kaggle) or the highly specific (data for self-driving cars).

Read More

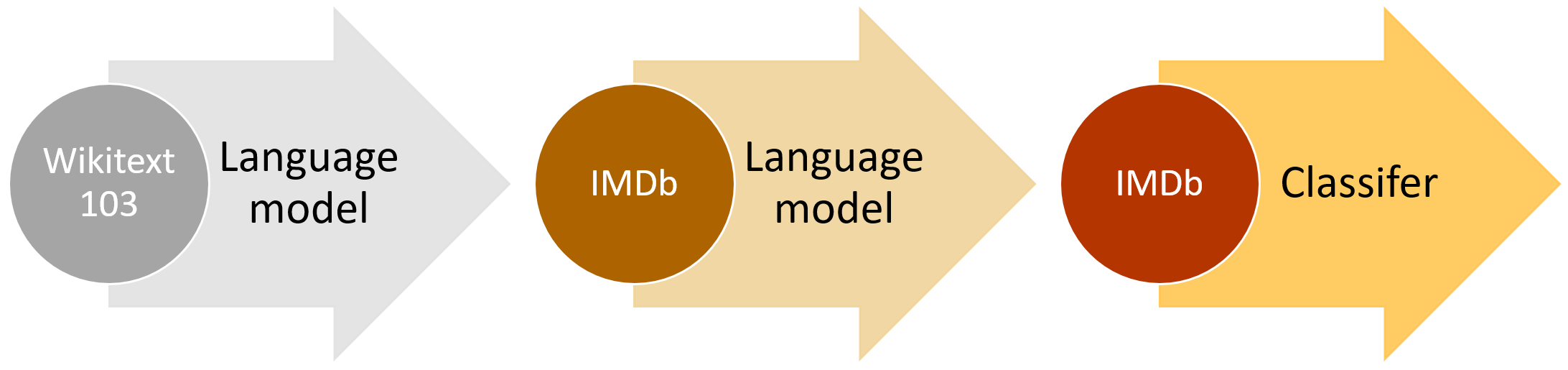

Improving Language Understanding with Unsupervised Learning

We’ve obtained state-of-the-art results on a suite of diverse language tasks with a scalable, task-agnostic system, which we’re also releasing. Our approach is a combination of two existing ideas: transformers and unsupervised pre-training. These results provide a convincing example that pairing supervised learning methods with unsupervised pre-training works very well; this is an idea that many have explored in the past, and we hope our result motivates further research into applying this idea on larger and more diverse datasets.

Read More

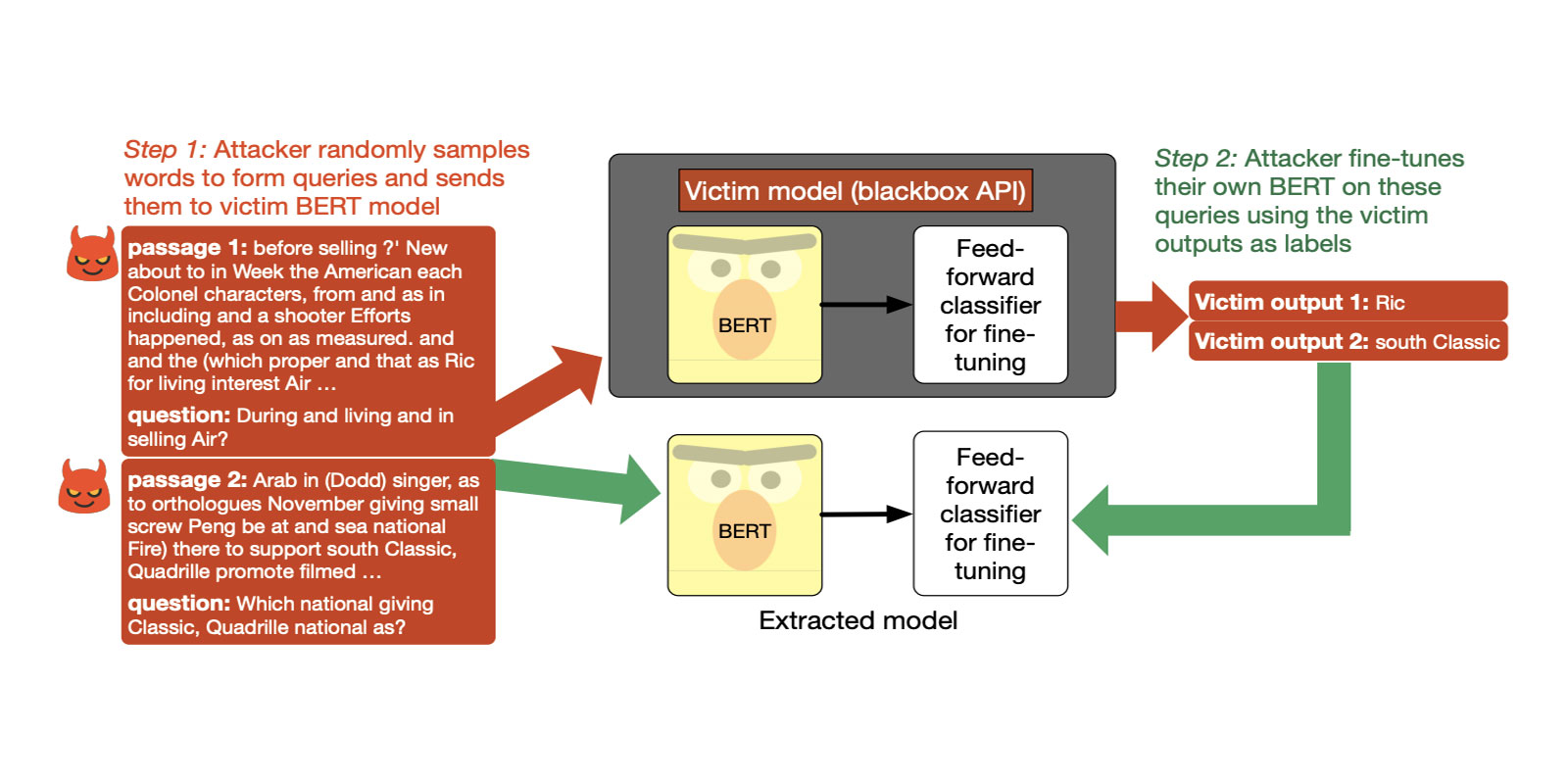

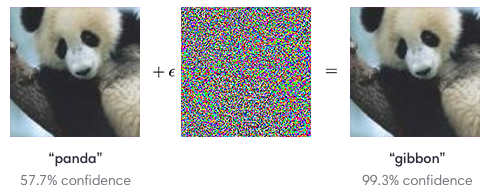

Attacks against machine learning – an overview

At a high level, attacks against classifiers can be broken down into three types: Adversarial inputs, which are specially crafted inputs that have been developed with the aim of being reliably misclassified in order to evade detection. Adversarial inputs include malicious documents designed to evade antivirus, and emails attempting to evade spam filters. Data poisoning attacks, which involve feeding training adversarial data to the classifier.

Read More

Why do neural networks generalize so poorly?

Deep convolutional network architectures are often assumed to guarantee generalization for small image translations and deformations. In this paper we show that modern CNNs (VGG16, ResNet50, and InceptionResNetV2) can drastically change their output when an image is translated in the image plane by a few pixels, and that this failure of generalization also happens with other realistic small image transformations. Furthermore, the deeper the network the more we see these failures to generalize.

Read More

Training a neural network in phase-change memory beats GPUs

Compared to a typical CPU, a brain is remarkably energy-efficient, in part because it combines memory, communications, and processing in a single execution unit, the neuron. A brain also has lots of them, which lets it handle lots of tasks in parallel. Attempts to run neural networks on traditional CPUs run up against these fundamental mismatches.

Read More

Learn Reinforcement Learning from scratch

Deep RL is a field that has seen vast amounts of research interest, including learning to play Atari games, beating pro players at Dota 2, and defeating Go champions. Contrary to many classical Deep Learning problems that often focus on perception (does this image contain a stop sign?) , Deep RL adds the dimension of actions that influence the environment (what is the goal, and how do I get there?).

Read More

Horovod: Distributed Training Framework for TensorFlow, Keras, and PyTorch

Horovod is a distributed training framework for TensorFlow, Keras, and PyTorch. The goal of Horovod is to make distributed Deep Learning fast and easy to use.

Read More

AI winter is well on its way

Deep learning has been at the forefront of the so called AI revolution for quite a few years now, and many people had believed that it is the silver bullet that will take us to the world of wonders of technological singularity (general AI). Many bets were made in 2014, 2015 and 2016 when still new boundaries were pushed, such as the Alpha Go etc. Companies such as Tesla were announcing through the mouths of their CEO’s that fully self driving car was very close, to the point that Tesla even started selling that option to customers [to be enabled by future software update].

Read More

Americans Less Trusting of Self-Driving Safety Following High-Profile Accidents

Americans are less trusting of self-driving cars following two deadly accidents involving autonomous or semi-autonomous vehicles, with half of U.S. adults considering those automobiles less safe than human drivers, according to a new poll. A Morning Consult survey conducted March 29-April 1 among a national sample of 2,202 adults found that 27 percent of respondents said self-driving cars are safer than human drivers, while 50 percent said autonomous vehicles are less safe. Eight percent said the automobiles are on par with human drivers when it comes to safety.

Read More

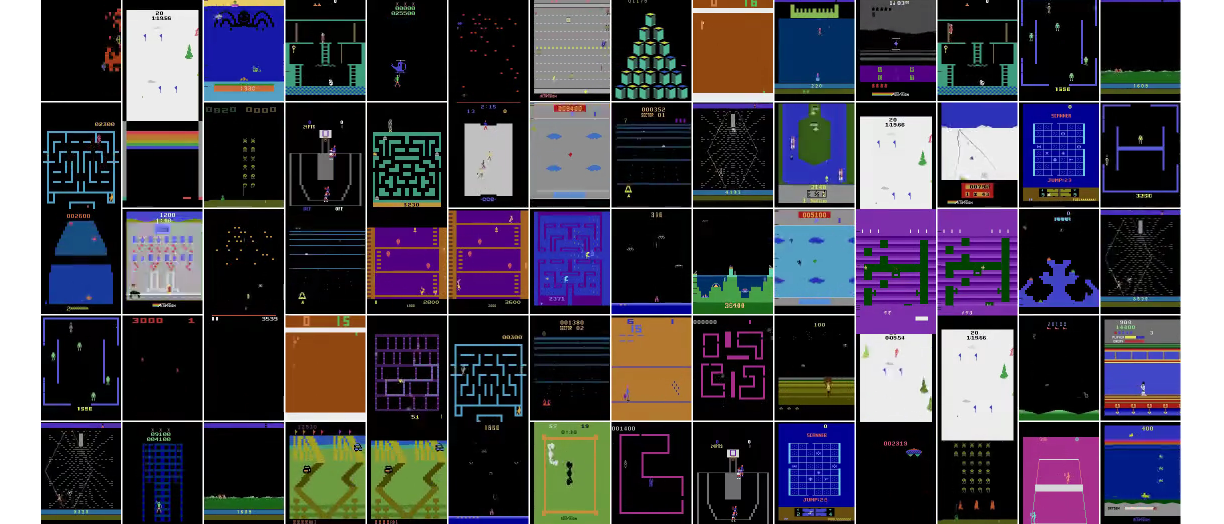

OpenAI: Gym Retro

We’re releasing the full version of Gym Retro, a platform for reinforcement learning research on games. This brings our publicly-released game count from around 70 Atari games and 30 Sega games to over 1,000 games across a variety of backing emulators. We’re also releasing the tool we use to add new games to the platform.

Read More

30+ Machine Learning Resources

For almost all machine learning projects, the main steps of the ideal solution remain same. Briefly, we all go over the steps below each and every time: Understand the dataClean up, fix the missing values, extract new features, select the best onesBuild the model, compare it with the other ones, tune hyper parameters, find out what is the right metric to evaluate your modelIterate this process over and over again until you believe you have the best solution:) Iterate this process over and over again until you believe you have the best solution:)

Read More

Intel AI Lab open-sources library for deep learning-driven NLP

The Intel AI Lab has open-sourced a library for natural language processing to help researchers and developers give conversational agents like chatbots and virtual assistants the smarts necessary to function, such as name entity recognition, intent extraction, and semantic parsing to identify the action a person wants to take from their words. The first-ever conference by Intel for AI developers is being held Wednesday and Thursday, May 23 and 24, at the Palace of Fine Arts in San Francisco. The Intel AI Lab now employs about 40 data scientists and researchers and works with divisions of the company developing products like the nGraph framework and hardware like Nervana Neural Network chips, Liu said.

Read More

3D Face Reconstruction with Position Map Regression Networks

Position Map Regression Networks (PRN) is a method to jointly regress dense alignment and 3D face shape in an end-to-end manner. In this article, I’ll provide a short explanation and discuss its applications in computer vision. In the last few decades, a lot of important research groups in computer vision have made amazing advances in 3D face reconstruction and face alignment.

Read More

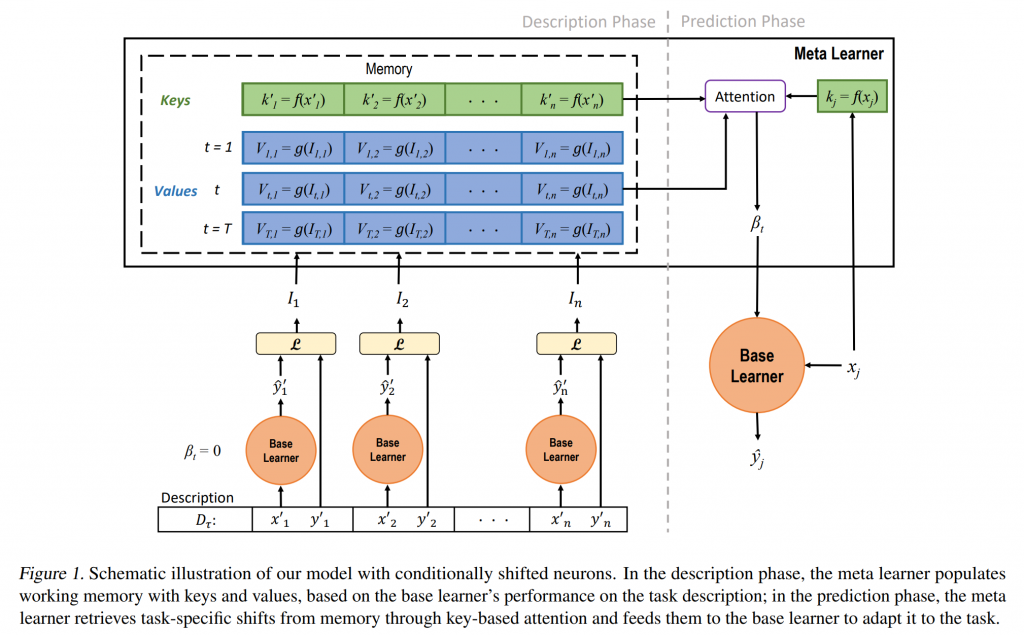

Deep Learning Research: Creating Adaptable Meta-Learning Models

Adaptability is one of the key cognitive abilities that defined us as humans. Even as babies, we can intuitively shift between similar tasks even if we don’t have prior training on them. This contrasts with the traditional train-and-test approach of most artificial intelligence(AI) systems which require an agent to go through massive amounts of training before it can master a specific task.

Read More

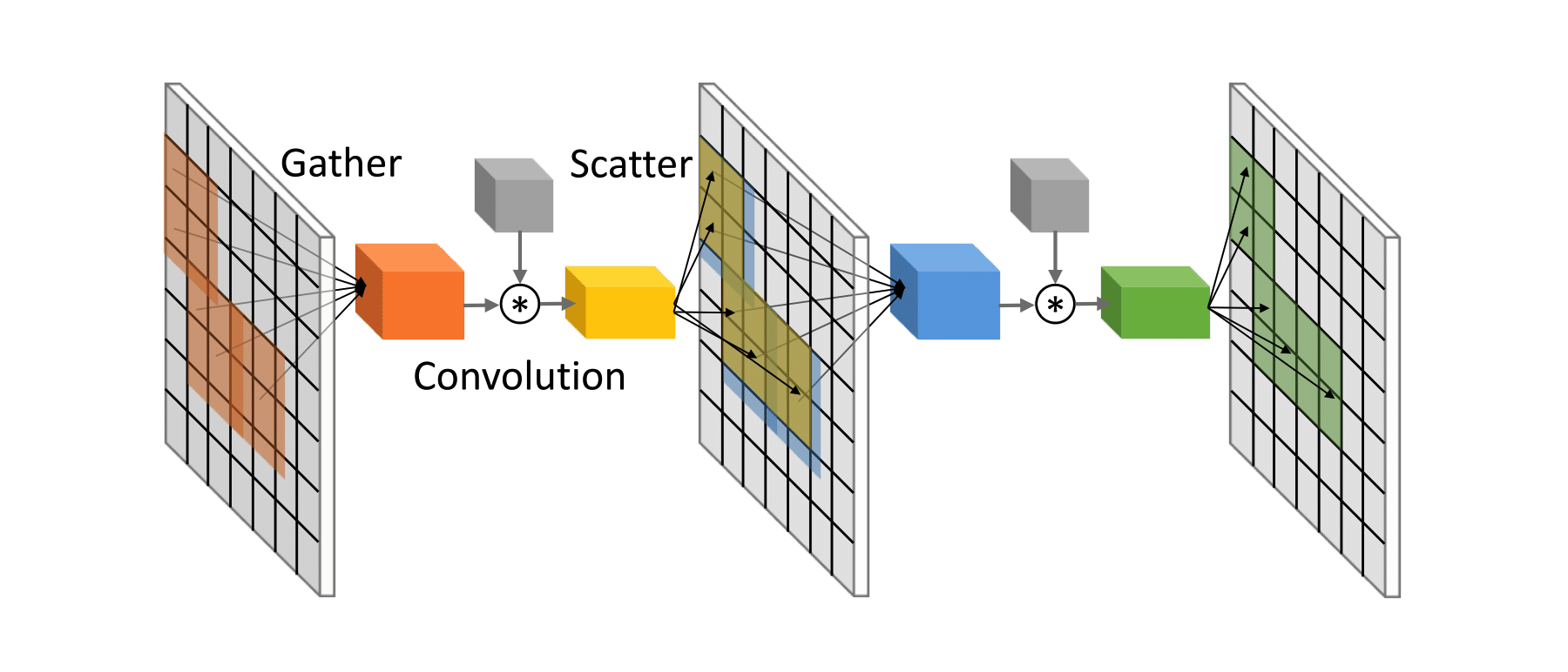

Scanner: Processing Terabytes of Video on Hundreds of Machines

There are now many state-of-the-art computer vision algorithms which are a git clone away. We found that existing systems for distributed data analysis were not well suited to dealing with the computational challenges of applying computer vision algorithms to terabyte or petabyte sized video collections, so we designed and built a system called Scanner to make efficient video analysis easier.

Read More

Tensor Compilers: Comparing PlaidML, Tensor Comprehensions, and TVM

One of the most complex and performance critical parts of any machine learning framework is its support for device specific acceleration. Indeed, without efficient GPU acceleration, much of modern ML research and deployment would not be possible. This acceleration support is also a critical bottleneck, both in terms of adding support for a wider range of hardware targets (including mobile) as well as for writing new research kernels.

Read More

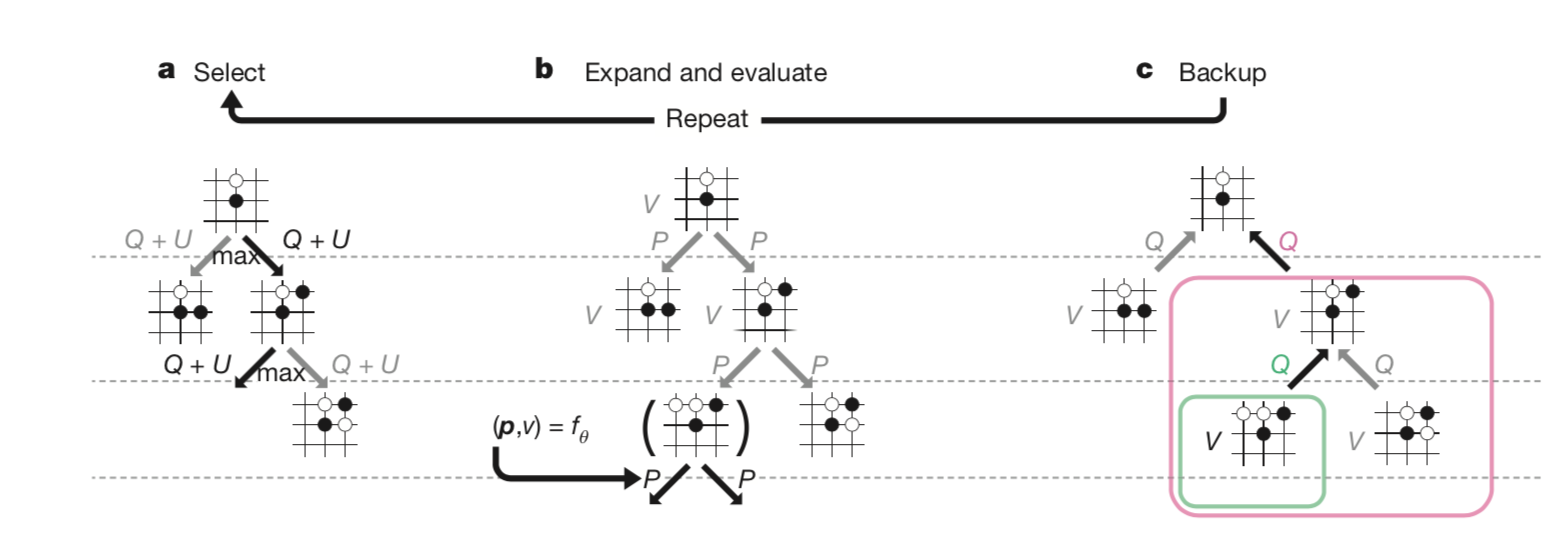

A Deep Dive into Monte Carlo Tree Search

The very first Go AIs used multiple modules to handle each aspect of playing Go – life and death, capturing races, opening theory, endgame theory, and so on. The idea was that by having experts program each module using heuristics, the AI would become an expert in all areas of the game. All that came to a grinding halt with the introduction of Monte Carlo Tree Search (MCTS) around 2008.

Read More

Crossbar Pushes Resistive RAM into Embedded AI

Resistive RAM technology developer Crossbar says it has inked a deal with aerospace chip maker Microsemi allowing the latter to embed Crossbar’s nonvolatile memory on future chips. The move follows selection of Crossbar’s technology by a leading foundry for advanced manufacturing nodes. Crossbar is counting on resistive RAM (ReRAM) to enable artificial intelligence systems whose neural networks are housed within the device rather than in the cloud.

Read More

AI and Compute

We’re releasing an analysis showing that since 2012, the amount of compute used in the largest AI training runs has been increasing exponentially with a 3.5 month-doubling time (by comparison, Moore’s Law had an 18-month doubling period). Since 2012, this metric has grown by more than 300,000x (an 18-month doubling period would yield only a 12x increase). Improvements in compute have been a key component of AI progress, so as long as this trend continues, it’s worth preparing for the implications of systems far outside today’s capabilities.

Read More

The Nengo Neural Simulator

Nengo is a graphical and scripting based Python package for simulating large-scale neural networks. Nengo can create sophisticated spiking or non-spiking neural simulations with sensible defaults in a few lines of code. Yet, Nengo is highly extensible and flexible.

Read More

How an AI Startup Could Defeat Now Unbeatable Bugs

The need for new medications is higher than ever, but so is the cost and time to bring them to market. Developing a new drug can cost billions and take as long as 14 years, according to the U.S. Food and Drug Administration. Yet with all that effort, only 8 percent of drugs make it to market, the FDA said.

Read More

Prefrontal cortex as a meta-reinforcement learning system

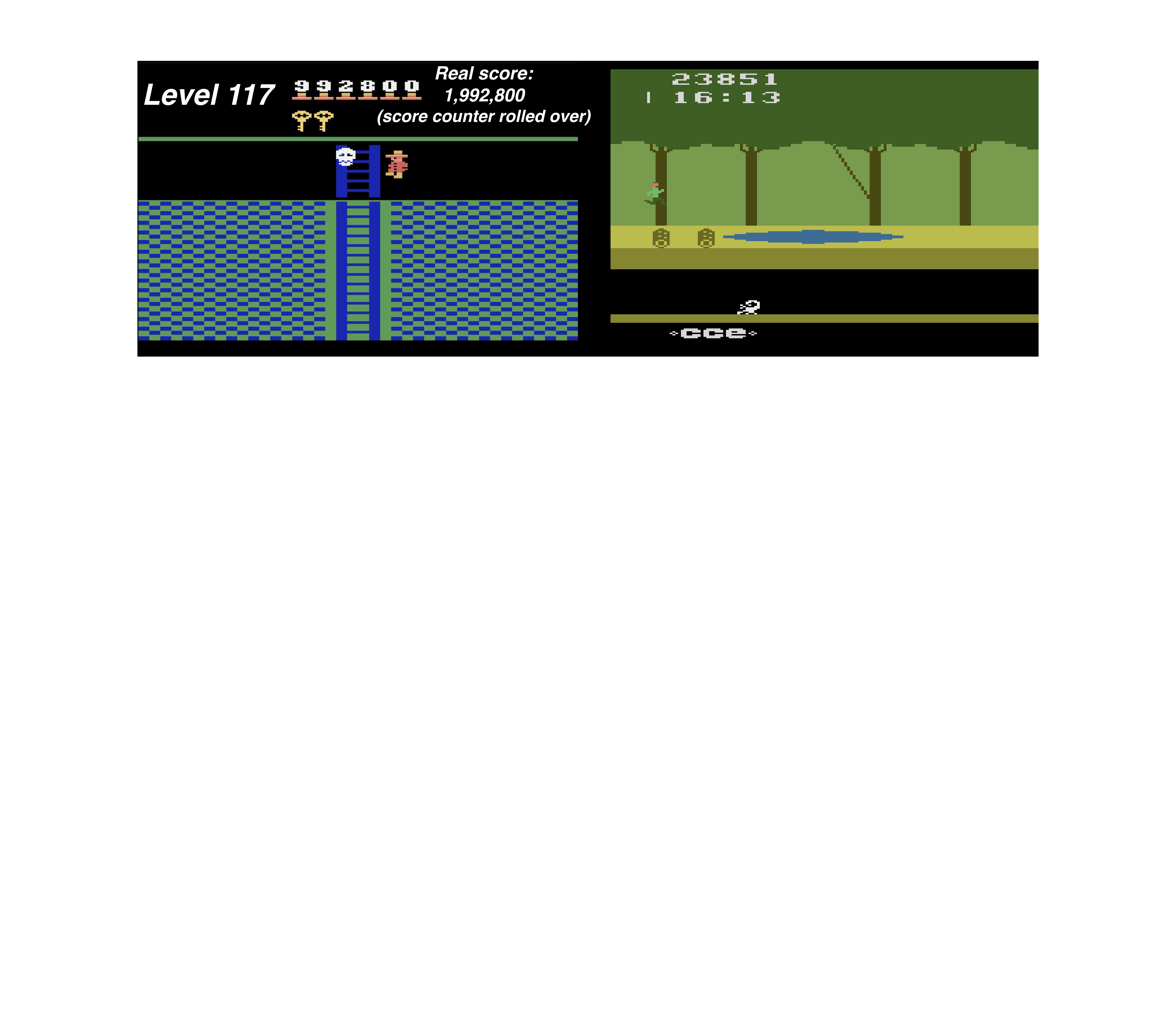

Recently, AI systems have mastered a range of video-games such as Atari classics Breakout and Pong. But as impressive as this performance is, AI still relies on the equivalent of thousands of hours of gameplay to reach and surpass the performance of human video game players. In contrast, we can usually grasp the basics of a video game we have never played before in a matter of minutes.

Read More

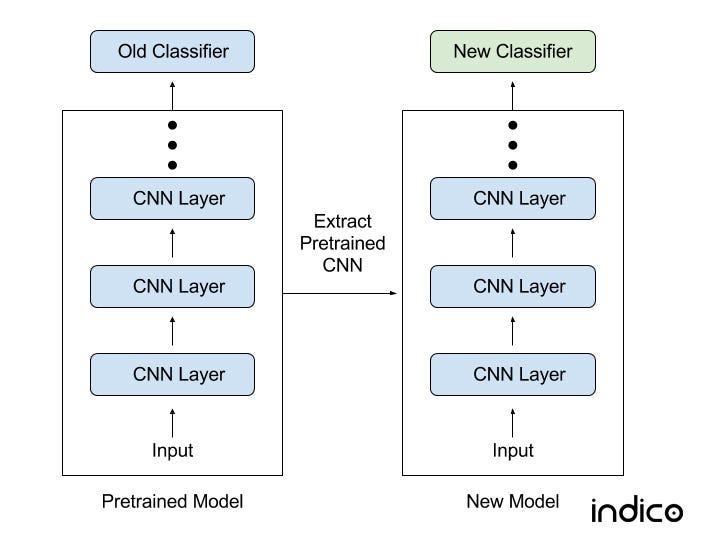

Transfer Learning

Transfer Learning is the reuse of a pre-trained model on a new problem. It is currently very popular in the field of Deep Learning because it enables you to train Deep Neural Networks with comparatively little data. This is very useful since most real-world problems typically do not have millions of labeled data points to train such complex models.

Read More

Machine Learning for Text Classification Using SpaCy in Python

spaCy is a popular and easy-to-use natural language processing library in Python. It provides current state-of-the-art accuracy and speed levels, and has an active open source community. However, since SpaCy is a relative new NLP library, and it’s not as widely adopted as NLTK.

Read More

The fall of RNN / LSTM

It is the year 2014 and LSTM and RNN make a great come-back from the dead. We all read Colah’s blog and Karpathy’s ode to RNN. But we were all young and unexperienced.

Read More

Germany adopts first ethics standards for autonomous driving systems

Federal transport minister, Alexander Dobrindt, presented a report to Germany’s cabinet seeking to establish guidelines for the future programming of ethical standards into automated driving software. The report, was prepared by an automated driving ethics commission comprised of scientists and legal experts and produced 20 guidelines to be used by the automotive industry when creating automated driving systems. Shortly after its introduction, Dobrindt announced that the cabinet ratified the guidelines, making Germany the first government in the world to put such measures in place.

Read More

Automatic Photography with Google Clips

How could we train an algorithm to recognize interesting moments? As with most machine learning problems, we started with a dataset. We created a dataset of thousands of videos in diverse scenarios where we imagined Clips being used.

Read More

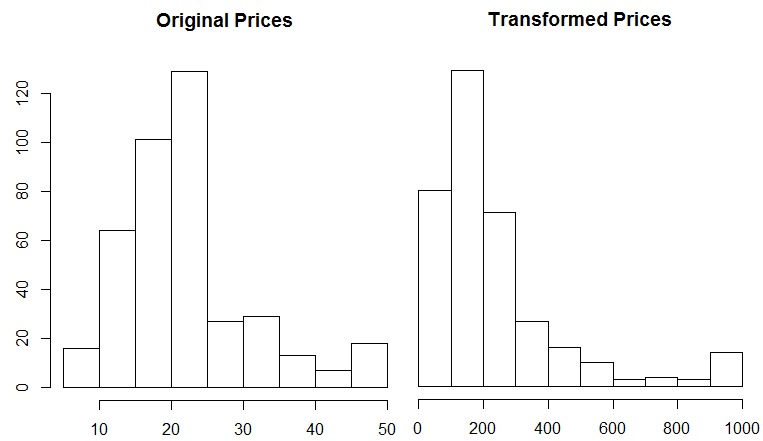

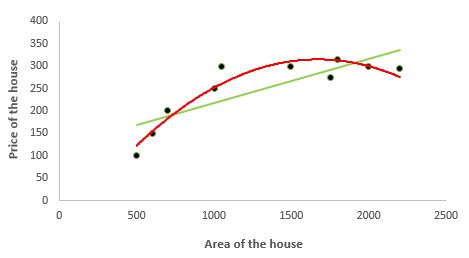

Custom deep learning loss functions with Keras for R

I recently started reading “Deep Learning with R”, and I’ve been really impressed with the support that R has for digging into deep learning. One of the use cases presented in the book is predicting prices for homes in Boston, which is an interesting problem because homes can have such wide variations in values. This is a machine learning problem that is probably best suited for classical approaches, such as XGBoost, because the data set is structured rather than perceptual data.

Read More

AI Can Generate ‘Doom’ Levels Now

Researchers recently successfully trained neural networks to generate level maps for Doom that, they report in a paper published to the arXiv preprint server in April, “proved to be interesting” to play. The work was carried out by researchers from the Polytechnic University of Milan and used Generative Adversarial Networks, a recent innovation in the field of deep learning.

Read More

AI trained to navigate develops brain-like location tracking

Now that DeepMind has solved Go, the company is applying DeepMind to navigation. Navigation relies on knowing where you are in space relative to your surroundings and continually updating that knowledge as you move. DeepMind scientists trained neural networks to navigate like this in a square arena, mimicking the paths that foraging rats took as they explored the space.

Read More

Intel Starts R&D Effort in Probabilistic Computing for AI

Intel announced today that it is forming a strategic research alliance to take artificial intelligence to the next level. Autonomous systems don’t have good enough ways to respond to the uncertainties of the real world, and they don’t have a good enough way to understand how the uncertainties of their sensors should factor into the decisions they need to make. According to Intel CTO Mike Mayberry the answer is “probabilistic computing”, which he says could be AI’s next wave.

Read More

Artificial Neural Nets Grow Brainlike Navigation Cells

Having the sense to take a shortcut, the most direct route from point A to point B, doesn’t sound like a very impressive test of intelligence. Yet according to a new report appearing today in Nature, in which researchers describe the performance of their new navigational artificial intelligence, the system’s ability to explore complex simulated environments and find the shortest route to a goal put it in a class previously reserved for humans and other living things. The surprising key to the system’s performance was that while learning how to navigate, the neural net spontaneously developed the equivalent of “grid cells,” sets of brain cells that enable at least some mammals to track their location in space.

Read More

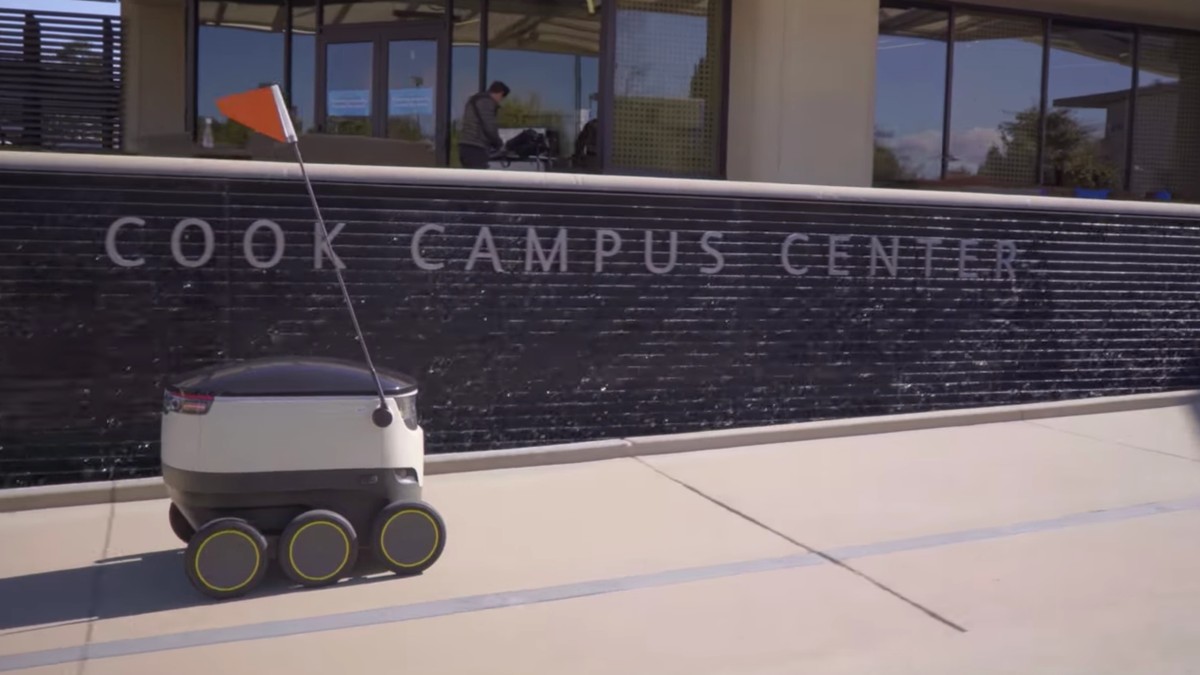

Delivery Robots Will Rely on Human Kindness and Labor

In April, Starship Technologies announced that it is going to launch “robot delivery services for campuses.” Its goal is to deploy at least 1,000 delivery robots to “corporate and academic campuses in Europe and the U.S.” within the next year. It’s the latest in a long list of automated delivery schemes from a tech companies big and small.

Read More

Google: Deep Learning for Electronic Health Records

When patients get admitted to a hospital, they have many questions about what will happen next. When will I be able to go home? Will I get better?

Read More

Facebook’s Field Guide to Machine Learning video series

The Facebook Field Guide to Machine Learning is a six-part video series developed by the Facebook ads machine learning team. The series shares best real-world practices and provides practical tips about how to apply machine-learning capabilities to real-world problems. Machine learning and artificial intelligence are in the headlines everywhere today, and there are many resources to teach you about how the algorithms work and demonstrations of the latest cutting-edge research.

Read More

Cutting Edge Deep Learning for Coders, Part 2

Welcome to the new 2018 edition of fast.ai’s second 7 week course, Cutting Edge Deep Learning For Coders, Part 2, where you’ll learn the latest developments in deep learning, how to read and implement new academic papers, and how to solve challenging end-to-end problems such as natural language translation. You’ll develop a deep understanding of neural network foundations, the most important recent advances in the fields, and how to implement them in the world’s fastest deep learning libraries, fastai and pytorch. This course contains all new material, so if you’ve already completed the 2017 version, you’ll find plenty here to keep you busy too!

Read More

Real-Time AI: Microsoft Announces Preview of Project Brainwave

That’s where Microsoft’s Project Brainwave could come in. Project Brainwave is a hardware architecture designed to accelerate real-time AI calculations. The Project Brainwave architecture is deployed on a type of computer chip from Intel called a field programmable gate array, or FPGA, to make real-time AI calculations at competitive cost and with the industry’s lowest latency, or lag time.

Read More

The economics of artificial intelligence

When looking at artificial intelligence from the perspective of economics, we ask the same, single question that we ask with any technology: What does it reduce the cost of? Economists are good at taking the fun and wizardry out of technology and leaving us with this dry but illuminating question. The answer reveals why AI is so important relative to many other exciting technologies.

Read More

16-year-old on finding primes with neural networks

Inspired by the triumphs of the “AlphaGo” project by DeepMind, I focused my research into the extensions and optimisation techniques that are so common in neural network design. There are many useful packages in machine learning, such as tensor flow, which can generate complex neural networks which work well, very quickly, but for this project I really wanted to develop an understanding of the inner workings of modern neural networks. So I went through the arduous calculus myself giving a much less efficient but more rewarding program.

Read More

Announcing PyTorch 1.0 for both research and production

PyTorch 1.0 takes the modular, production-oriented capabilities from Caffe2 and ONNX and combines them with PyTorch’s existing flexible, research-focused design to provide a fast, seamless path from research prototyping to production deployment for a broad range of AI projects. With PyTorch 1.0, AI developers can both experiment rapidly and optimize performance through a hybrid front end that seamlessly transitions between imperative and declarative execution modes. The technology in PyTorch 1.0 has already powered many Facebook products and services at scale, including performing 6 billion text translations per day.

Read More

Advancing state-of-the-art image recognition with deep learning on hashtags

Image recognition is one of the pillars of AI research and an area of focus for Facebook. Our researchers and engineers aim to push the boundaries of computer vision and then apply that work to benefit people in the real world — for example, using AI to generate audio captions of photos for visually impaired users. In order to improve these computer vision systems and train them to consistently recognize and classify a wide range of objects, we need data sets with billions of images instead of just millions, as is common today.

Read More

Embodied Question Answering: A goal-driven approach to autonomous agents

Facebook AI Research (FAIR) has developed a collection of virtual environments for training and testing autonomous agents, as well as novel AI agents that learn to intelligently explore those environments. To test this goal-driven approach, FAIR are collaborating Georgia Tech on a multistep AI task called Embodied Question Answering, or EmbodiedQA.

Read More

ONNX expansion speeds AI development

Facebook helped develop the Open Neural Network Exchange (ONNX) format to allow AI engineers to more easily move models between frameworks without having to do resource-intensive custom engineering. Today, we’re sharing that ONNX is adding support for additional AI tools, including Apple Core ML converter technology, Baidu’s PaddlePaddle platform, and Qualcomm SNPE.

Read More

We Need Bug Bounties for Bad Algorithms

Algorithmic auditors are a growing discipline of researchers specializing in computer science and human-computer interaction. They employ a variety of methods to tinker with and uncover how algorithms work, and their research has already sparked public discussions and regulatory investigations into the most dominant and powerful algorithms of the Information Age. From Uber and Booking.com to Google and Facebook, to name a few, these friendly auditors already uncovered bias and deception in the algorithms that control our lives.

Read More

Facebook Open Sources ELF OpenGo

Inspired by DeepMind’s work, we kicked off an effort earlier this year to reproduce their recent AlphaGoZero results using FAIR’s Extensible, Lightweight Framework (ELF) for reinforcement learning research. The goal was to create an open source implementation of a system that would teach itself how to play Go at the level of a professional human player or better. By releasing our code and models we hoped to inspire others to think about new applications and research directions for this technology.

Read More

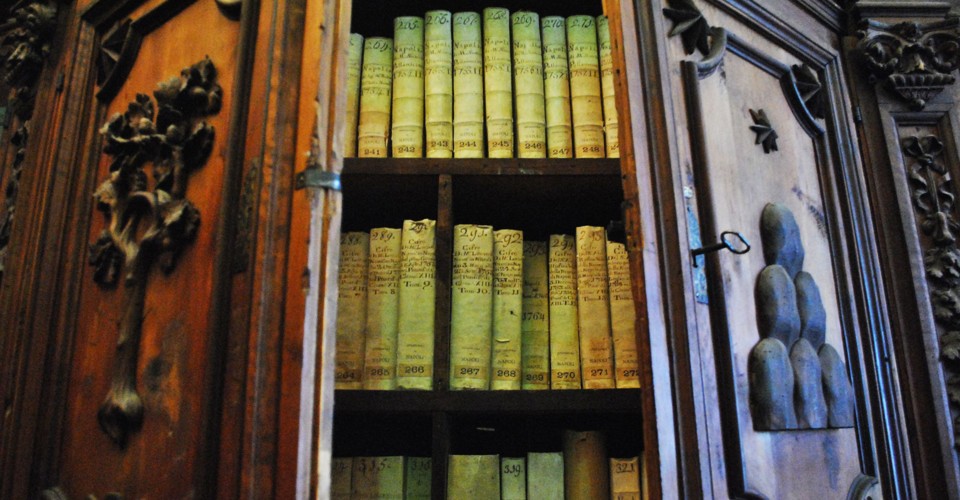

Artificial Intelligence Opens the Vatican Secret Archives

Known as In Codice Ratio, it uses a combination of artificial intelligence and optical-character-recognition (OCR) software to scour these neglected texts and make their transcripts available for the very first time. If successful, the technology could also open up untold numbers of other documents at historical archives around the world.

Read More

What tech calls “AI” isn’t really AI

First, the problem itself is poorly defined: what do you mean by intelligence? Nature, with all her blind hideous strength, endless experimentation and wild wastes of infinite time, has only managed the trick once (by our narrow definition), with one species of tree-ape on a rolling green world. Even if you believe there’s intelligent biological life elsewhere, the stats aren’t promising.

Read More

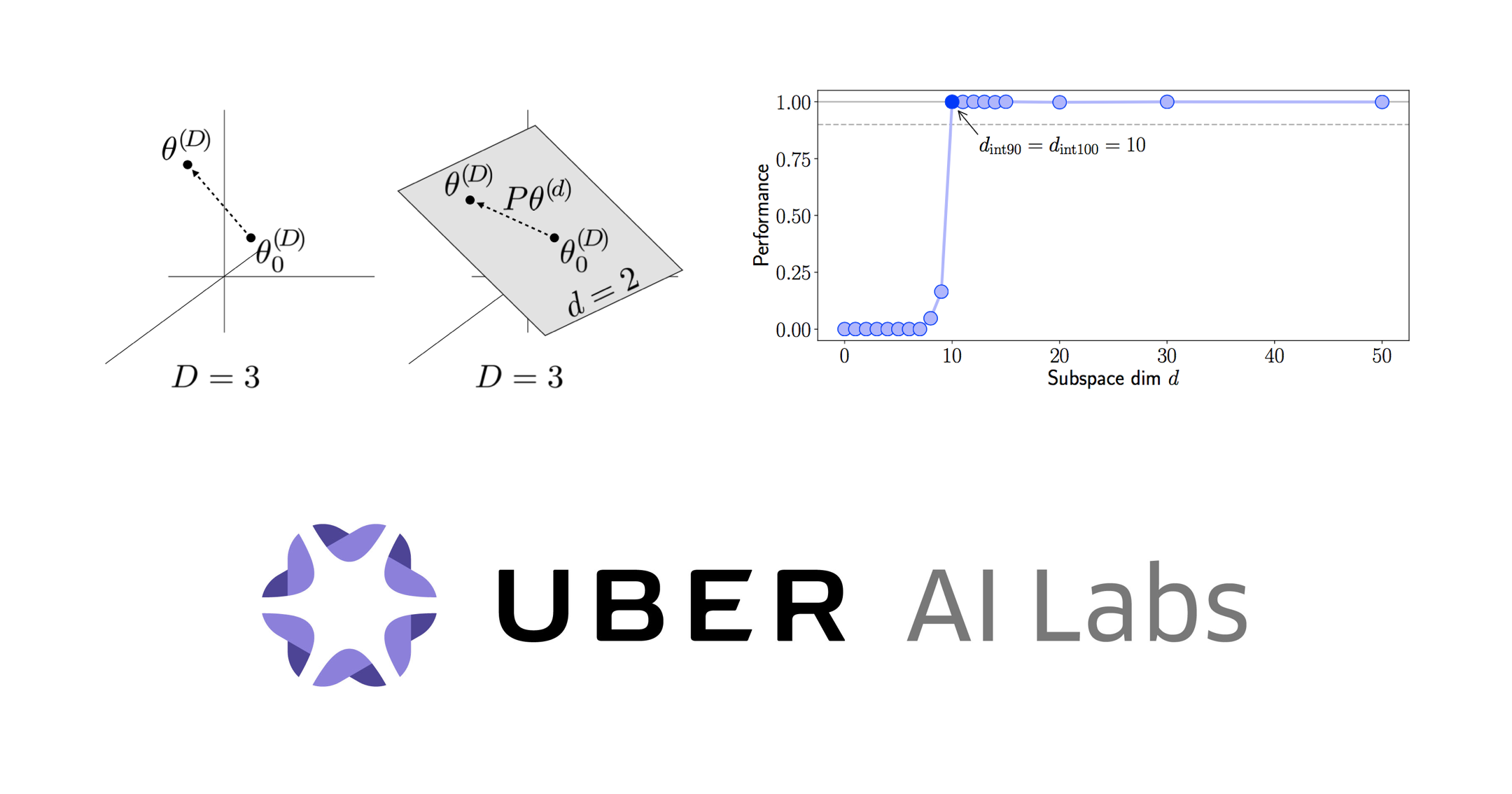

Measuring the Intrinsic Dimension of Objective Landscapes

In our paper, Measuring the Intrinsic Dimension of Objective Landscapes, to be presented at ICLR 2018, we contribute to this ongoing effort by developing a simple way of measuring a fundamental network property known as intrinsic dimension. In the paper, we develop intrinsic dimension as a quantification of the complexity of a model in a manner decoupled from its raw parameter count, and we provide a simple way of measuring this dimension using random projections. We find that many problems have smaller intrinsic dimension than one might suspect.

Read More

DeepMind papers at ICLR 2018

Between 30 April and 03 May, hundreds of researchers will gather in Vancouver, Canada, for the Sixth International Conference on Learning Representations. Here you will find details of all DeepMind’s accepted papers.

Read More

Pentagon-funded research aims to predict when crimes are gang-related

The paper attempts to predict whether crimes are gang-related using a neural network, a complex computational system modeled after a human brain that “learns” to classify or identify items based on ingesting a training dataset. The authors selected what they determined to be the four most important features (number of suspects, primary weapon used, the type of premises where the crime took place, and the narrative description of the crime) for identifying a gang-related crime from 2014–16 LAPD data and cross-referenced the crime incidents with a 2009 LAPD map of gang territory to create a training dataset for their neural network.

Read More

TDM: From Model-Free to Model-Based Deep Reinforcement Learning

While simple, this thought experiment highlights some important aspects of human intelligence. For some tasks, we use a trial-and-error approach, and for others we use a planning approach. A similar phenomena seems to have emerged in reinforcement learning (RL).

Read More

Swift for TensorFlow Design Overview

Swift for TensorFlow provides a new programming model for TensorFlow – one that combines the performance of graphs with the flexibility and expressivity of Eager execution, while keeping a strong focus on improved usability at every level of the stack. In order to achieve our goals, we scope in the possibility of making (carefully considered) compiler and language enhancements, which enables us to provide a great user experience.

Read More

Introduction to Decision Tree Learning

From Kaggle to classrooms, one of the first lessons in machine learning involves decision trees. The reason for the focus on decision trees is that they aren’t very mathematics heavy compared to other ML approaches, and at the same time, they provide reasonable accuracy on classification problems.

Read More

New A.I. application can write its own code

Designing applications that can program computers is a long-sought grail of the branch of computer science called artificial intelligence (AI). The new application, called Bayou, came out of an initiative aimed at extracting knowledge from online source code repositories like GitHub. Users can try it out at askbayou.com.

Read More

Why data scientists should start learning Swift

One week into my first year physics course at the University of Michigan, a professor assigned a problem set that required simulating some many-body system. It was due Friday. That was the week I learned my first programming language, Matlab.

Read More

United Kingdom Plans $1.3 Billion Artificial Intelligence Push

The U.K. government said Thursday that part of its multi-year AI investment–about £300 million, or more than $400 million–would come from U.K.-based corporations and investment firms and those located outside the country.

Read More

Lessons from My First Two Years of AI Research

A friend of mine who is about to start a career in artificial intelligence research recently asked what I wish I had known when I started two years ago. Below are some lessons I have learned so far. They range from general life lessons to relatively specific tricks of the AI trade.

Read More

CIA plans to replace spies with AI

Human spies will soon be relics of the past, and the CIA knows it. Dawn Meyerriecks, the Agency’s deputy director for technology development, recently told an audience at an intelligence conference in Florida the CIA was adapting to a new landscape where its primary adversary is a machine, not a foreign agent.

Read More

New AI Imaging Technique Reconstructs Photos with Realistic Results

To prepare to train their neural network, the team first generated 55,116 masks of random streaks and holes of arbitrary shapes and sizes for training. They also generated nearly 25,000 for testing. These were further categorized into six categories based on sizes relative to the input image, in order to improve reconstruction accuracy.

Read More

A Face-Detection Library in 200 Lines of JavaScript

The pico.js library is a JavaScript implementation of the method described in 2013 by Markuš et al. in a technical report. The reference implementation is written in C and available on GitHub: https://github.com/nenadmarkus/pico.

Read More

The AI Revolution Hasn’t Happened Yet

Artificial Intelligence (AI) is the mantra of the current era. The phrase is intoned by technologists, academicians, journalists and venture capitalists alike. As with many phrases that cross over from technical academic fields into general circulation, there is significant misunderstanding accompanying the use of the phrase.

Read More

Machine Learning’s ‘Amazing’ Ability to Predict Chaos

Half a century ago, the pioneers of chaos theory discovered that the “butterfly effect” makes long-term prediction impossible. Even the smallest perturbation to a complex system (like the weather, the economy or just about anything else) can touch off a concatenation of events that leads to a dramatically divergent future. Unable to pin down the state of these systems precisely enough to predict how they’ll play out, we live under a veil of uncertainty.

Read More

A step-by-step guide to the “World Models” AI paper

Teaching a machine to master car racing and fireball avoidance through “World Models”.

Read More

IBM Releases Open Source AI Security Tool

IBM releases Adversarial Robustness Toolbox, an open source software library designed to help researchers and developers secure artificial intelligence (AI) systems

Read More

China’s tech giants are venturing into autonomous driving

If there’s one thing China’s tech giants are known for, it’s their ability to venture into everything from social media, to online payments, to delivery services. The latest thing they’re all speeding towards? Autonomous driving.

Read More

This AI Will Turn Your Dog Into a Cat

As detailed in a paper published to arXiv, the neural net is actually a generative adversarial network (GAN), which is a way of training a machine learning algorithm without human supervision. In GANs, two neural nets are pitted against one another: One neural net generates new images and tries to trick the other neural net into thinking the images are real. If the other neural net is able to tell the generated images are false

Read More

Robot cognition requires machines that both think and feel

In the quest to create intelligent robots, designers tend to focus on purely rational, cognitive capacities. It’s tempting to disregard emotion entirely, or include only as much as necessary. But without emotion to help determine the personal significance of objects and actions, I doubt that true intelligence can exist – not the kind that beats human opponents at chess or the game of Go, but the sort of smarts that we humans recognise as such.

Read More

15 Types of Regression you should know

Regression techniques are one of the most popular statistical techniques used for predictive modeling and data mining tasks. On average, analytics professionals know only 2-3 types of regression which are commonly used in real world. They are linear and logistic regression.

Read More

Depthwise separable convolutions for machine learning

Convolutions are an important tool in modern deep neural networks (DNNs). This post is going to discuss some common types of convolutions, specifically regular and depthwise separable convolutions. My focus will be on the implementation of these operation, showing from-scratch Numpy-based code to compute them and diagrams that explain how things work.

Read More

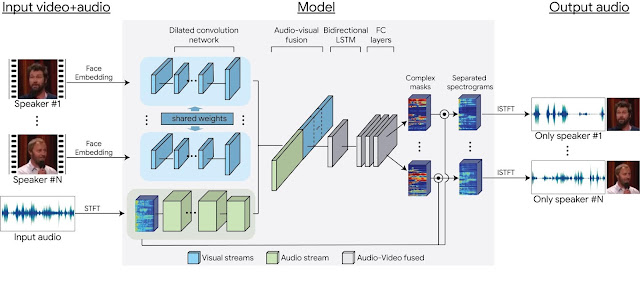

Looking to Listen: Audio-Visual Speech Separation

People are remarkably good at focusing their attention on a particular person in a noisy environment, mentally “muting” all other voices and sounds. Known as the cocktail party effect, this capability comes natural to us humans. However, automatic speech separation — separating an audio signal into its individual speech sources — while a well-studied problem, remains a significant challenge for computers.

Read More

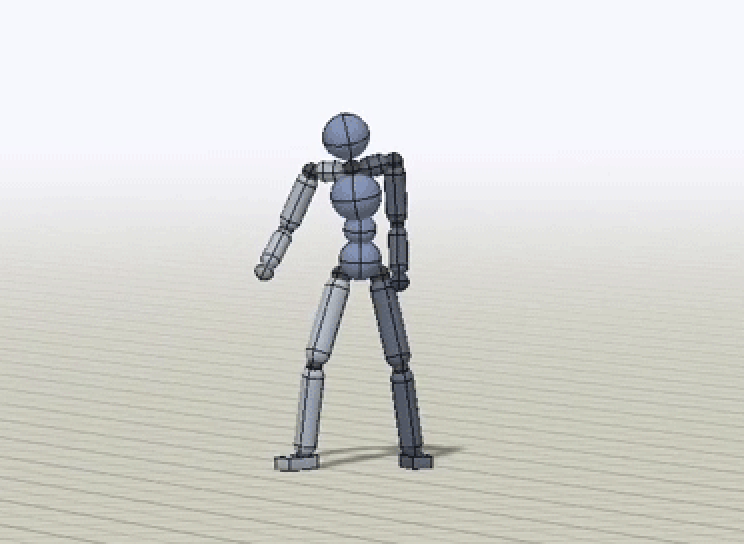

Towards a Virtual Stuntman

Motion control problems have become standard benchmarks for reinforcement learning, and deep RL methods have been shown to be effective for a diverse suite of tasks ranging from manipulation to locomotion. However, characters trained with deep RL often exhibit unnatural behaviours, bearing artifacts such as jittering, asymmetric gaits, and excessive movement of limbs. Can we train our characters to produce more natural behaviours?

Read More

Differentiable Plasticity: A New Method Learning to Learn

Neural networks, which underlie many of Uber’s machine learning systems, have proven highly successful in solving complex problems, including image recognition, language understanding, and game-playing. However, these networks are usually trained to a stopping point through gradient descent, which incrementally adjusts the connections of the network based on its performance over many trials. Once the training is complete, the network is fixed and the connections can no longer change; as a result, barring any later re-training (again requiring many examples), the network in effect stops learning at the moment training ends.

Read More

The Case Against an Autonomous Military